The best way to train your dog is by using a reward system. You give the dog a treat when it behaves well, and you chastise it when it does something wrong. This same policy can be applied to machine learning models too! This type of machine learning method, where we use a reward system to train our model, is called Reinforcement Learning.

In this article titled ‘What is Reinforcement Learning? The best guide to Reinforcement Learning’, we will talk about reinforcement learning and how to implement it in python.

Need for Reinforcement Learning

A major drawback of machine learning is that a tremendous amount of data is needed to train models. The more complex a model, the more data it may require. But this data may not be available to us. It may not exist or we simply may not have access to it. Further, the data collected might not be reliable. It may have false or missing values or it might be outdated.

Also, learning from a small subset of actions will not help expand the vast realm of solutions that may work for a particular problem. This is going to slow the growth that technology is capable of. Machines need to learn to perform actions by themselves and not just learn from humans.

All of these problems are overcome by reinforcement learning. In reinforcement learning, we introduce our model to a controlled environment which is modeled after the problem statement to be solved instead of using actual data to solve it.

Your AI/ML Career is Just Around The Corner!

AI Engineer Master’s ProgramExplore Program

What is Reinforcement Learning?

Reinforcement learning is a sub-branch of Machine Learning that trains a model to return an optimum solution for a problem by taking a sequence of decisions by itself.

We model an environment after the problem statement. The model interacts with this environment and comes up with solutions all on its own, without human interference. To push it in the right direction, we simply give it a positive reward if it performs an action that brings it closer to its goal or a negative reward if it goes away from its goal.

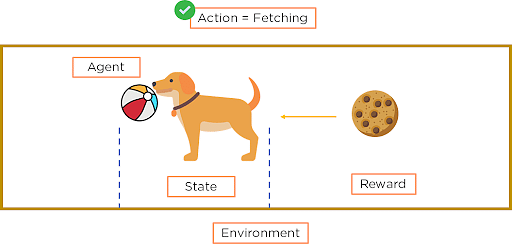

To understand reinforcement learning better, consider a dog that we have to house train. Here, the dog is the agent and the house, the environment.

Figure 1: Agent and Environment

Figure 1: Agent and Environment

We can get the dog to perform various actions by offering incentives such as dog biscuits as a reward.

Figure 2: Performing an Action and getting Reward

The dog will follow a policy to maximize its reward and hence will follow every command and might even learn a new action, like begging, all by itself.

Figure 3: Learning new actions

The dog will also want to run around and play and explore its environment. This quality of a model is called Exploration. The tendency of the dog to maximize rewards is called Exploitation. There is always a tradeoff between exploration and exploitation, as exploration actions may lead to lesser rewards.

Figure 4: Exploration vs Exploitation

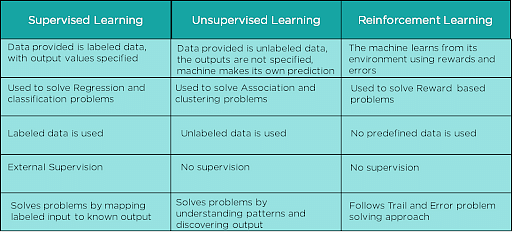

Supervised vs Unsupervised vs Reinforcement Learning

The below table shows the differences between the three main sub-branches of machine learning.

Table 1: Differences between Supervised, Unsupervised, and Reinforcement Learning

Important Terms in Reinforcement Learning

- Agent: Agent is the model that is being trained via reinforcement learning

- Environment: The training situation that the model must optimize to is called its environment

- Action: All possible steps that can be taken by the model

- State: The current position/ condition returned by the model

- Reward: To help the model move in the right direction, it is rewarded/points are given to it to appraise some action

- Policy: Policy determines how an agent will behave at any time. It acts as a mapping between Action and present State

Figure 5: Important terms in Reinforcement Learning

Your AI/ML Career is Just Around The Corner!

AI Engineer Master’s ProgramExplore Program![]()

What is Markov’s Decision Process?

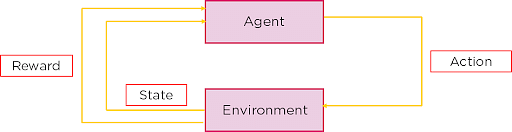

Markov’s Decision Process is a Reinforcement Learning policy used to map a current state to an action where the agent continuously interacts with the environment to produce new solutions and receive rewards

Figure 6: Markov’s Decision Process

First, let’s understand Markov’s Process. Markov’s Process states that the future is independent of the past, given the present. This means that, given the present state, the next state can be predicted easily, without the need for the previous state.

This theory is used by Markov’s Decision Process to get the next action in our machine learning model. Markov’s Decision Process (MDP) uses:

- A set of States (S)

- A set of Models

- A set of all possible actions (A)

- A reward function that depends on the state and action R( S, A )

- A policy which is the solution of MDP

The policy of Markov’s Decision Process aims to maximize the reward at each state. The Agent interacts with the Environment and takes Action while it is at one State to reach the next future State. We base the action on the maximum Reward returned.

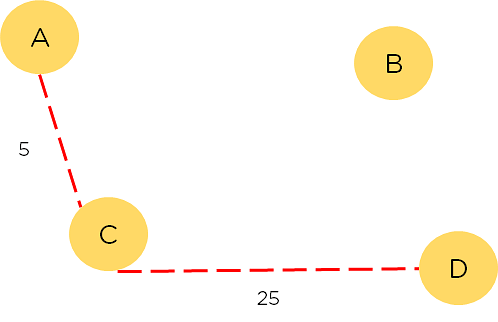

In the diagram shown, we need to find the shortest path between node A and D. Each path has a reward associated with it, and the path with maximum reward is what we want to choose. The nodes; A, B, C, D; denote the nodes. To travel from node to node (A to B) is an action. The reward is the cost at each path, and policy is each path taken.

Figure 7: Nodes to traverse

The process will maximize the output based on the reward at each step and will traverse the path with the highest reward. This process does not explore but maximizes reward.

Figure 8: Path taken by MDP

Reinforcement Learning in Python

Let us see how we can use reinforcement learning in a real-life situation.

Let’s make a game of Tic-Tac-Toe using reinforcement learning. As we know, we don’t require any data for reinforcement learning.

Figure 9: Tic Tac Toe

Let’s start by importing the necessary modules :

Figure 10: Importing modules

Define the tic-tac-toe board :

![]()

Figure 11: Defining rows and columns

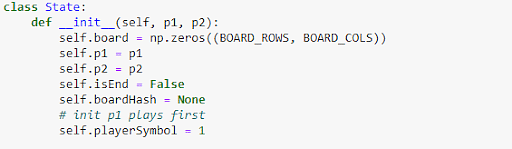

Now, let’s define a function for the different states that we can take.

Figure 12: Defining states

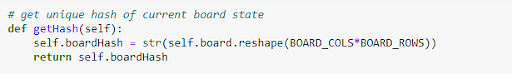

The actions taken on the board will have to be stored as a hash function

Figure 13: storing action

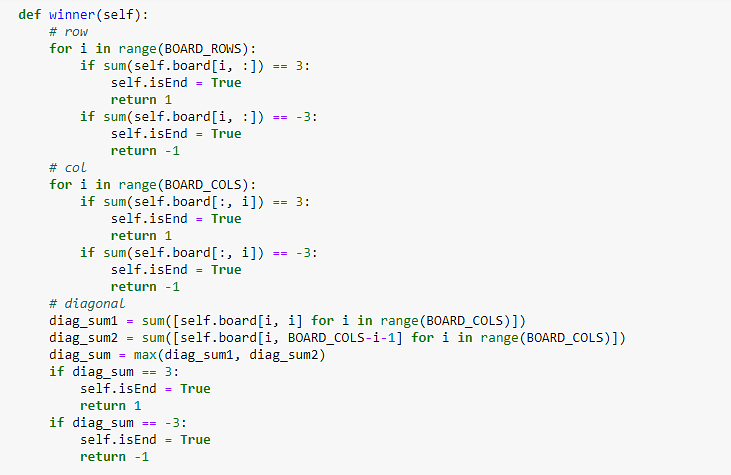

Let us define a function to find the winner of the game:

Figure 14: Finding a winner

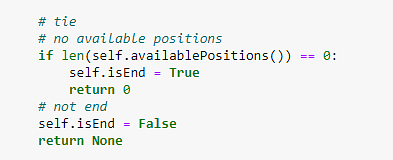

Apart from the winner, the game may also end in a tie:

Figure 15: Finding tie

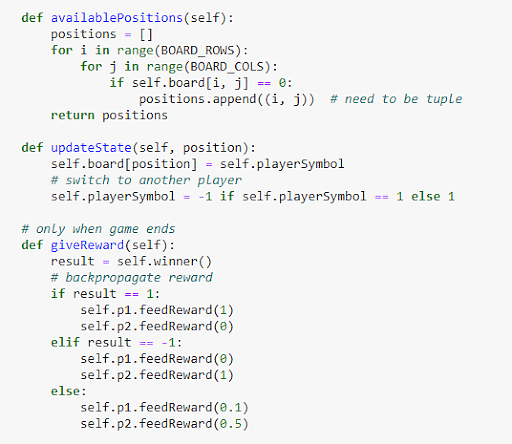

Let’s define a function to keep track of the available positions on the board. We will also define a function to update the state and a reward function:

Figure 16: Finding available positions. Updating state and defining reward

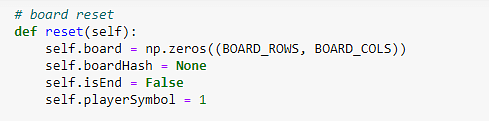

After the game is over, we need to reset the board:

Figure 17: Resetting the board

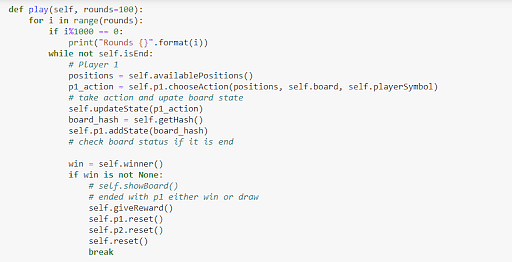

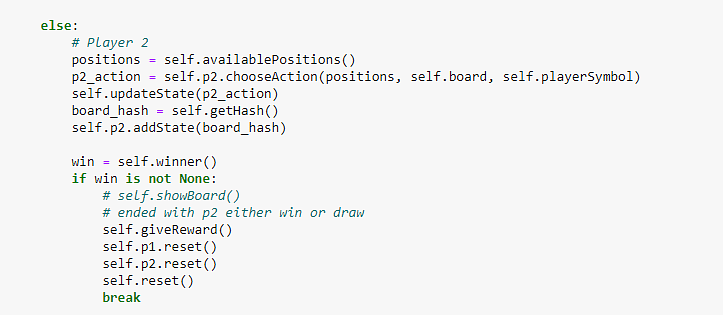

Let us define the main play function between two opponents we will use this to train the model:

Figure 18: Training function

Figure 19: Training function continued

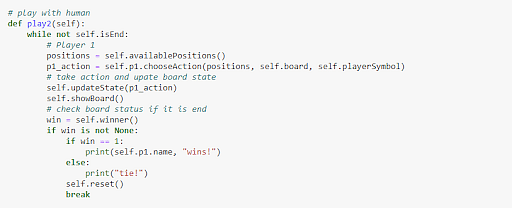

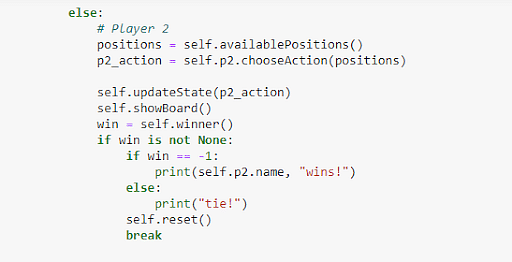

We now define a function to play the actual game:

Figure 20: Playing Function

Figure 21: Playing Function continued

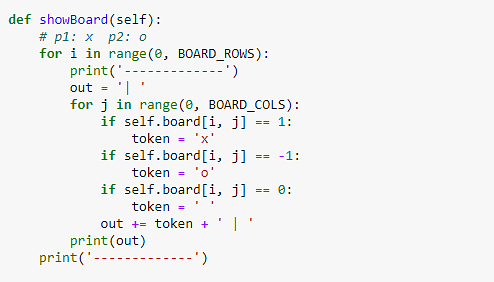

The below function will draw a board on to the terminal.

Figure 22: Drawing the playing board

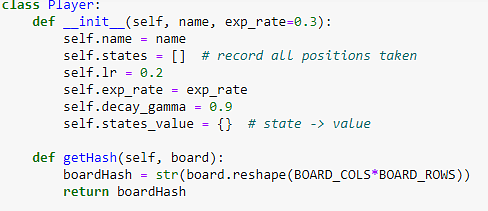

Let’s define a player class to instantiate players and define the policy. This will be used to train the model.

Figure 23: Defining the player

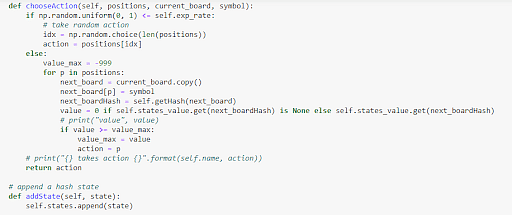

Choosing actions for the player function and defining state :

Figure 24: Choosing action for the player and defining the state

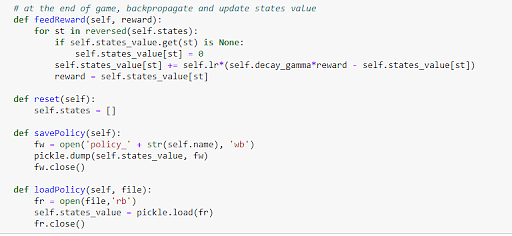

We also define the reward function and save the policy.

Figure 25: defining reward and saving policy

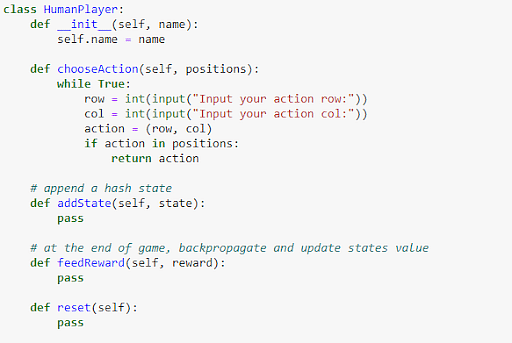

Now let’s define a class that will be called when the player has to take an action.

Figure 26: Function for a human player to play

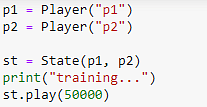

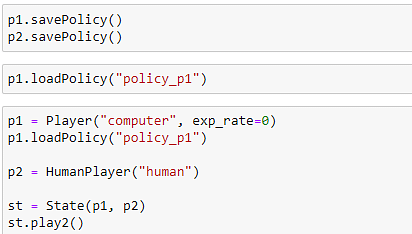

Let us define our machine players and train the model using the policy we made.

Figure 27: Training our model

Let’s save the policy.

Figure 28: Saving the policy

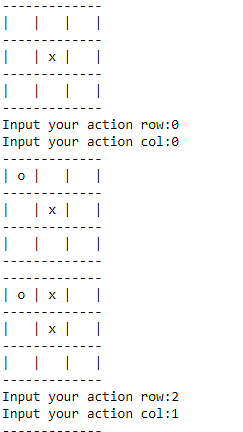

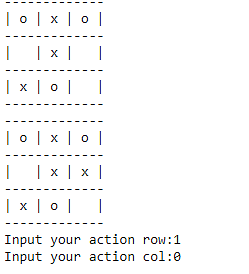

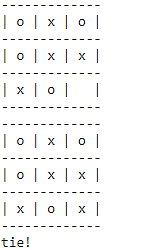

Now, let’s play Tic Tac Toe! The below figure shows a game that ended in a tie:

Figure 29: Playing Tic- Tac- Toe against the computer

The game can have three results, machine winning, human winning, or a tie. As you can see, no data was used to train the model instead the model was trained using the policy created by us. Games like online chess and even self-driving cars etc are trained in this way.

Your AI/ML Career is Just Around The Corner!

AI Engineer Master’s ProgramExplore Program![]()

Conclusion

In this article titled ‘What is Reinforcement Learning? The best guide to Reinforcement Learning’, we first answered the question of why do we need Reinforcement learning and ‘What is Reinforcement Learning?’. We also looked at the differences between the sub-branches of machine learning. We then looked at some common terms associated with reinforcement learning. We then moved on to Markov’s Decision Process, a policy of reinforcement learning, and finally implemented a Tic-Tac-Toe game which we trained using reinforcement learning in python.

We hope this article answered any question which was burning in the back of your mind. Do you have any doubts or questions for us? Mention them in this article’s comments section, and we’ll have our experts answer them for you as the earliest!

Looking forward to becoming a Machine Learning Engineer? Check out Simplilearn’s Caltech Post Graduate Program in AI & ML and get certified today.