Microsoft Corp. today announced the release of new artificial intelligence-powered capabilities for developers to build, customize and deploy autonomous AI agents and apps.

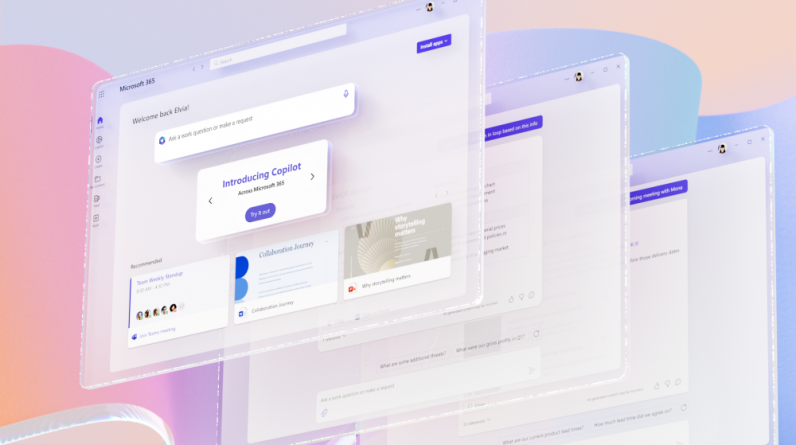

Debuted at Microsoft’s annual information technology professional conference Ignite 2024, Copilot Studio autonomous agents are now in public preview, enabling developers to build AI agents to perform advanced business logic tasks based on conversational workflows. Microsoft also introduced a number of major updates for Power Platform aimed at enhancing developer experience, security and governance of the platform, including AI agents.

Microsoft initially debuted Copilot Studio at Ignite 2023 in early access and since then it has become a mainstay for organizations to build AI-powered applications, according to the company.

“Over the past year, we’ve witnessed how reimagining business processes with Copilot and agents has revolutionized what we build, including intelligent apps and AI agents,” said Charles Lamanna, corporate vice president of business and industry copilot at Microsoft. “Additionally, enhancing efficiency through automation and AI has fundamentally changed how we build, unlocking even more rapid low-code development.”

Agents built using Copilot Studio can operate independently of human oversight to dynamically plan, learn from processes, understand business logic, understand changing contexts and execute multi-step processes. Developers can quickly build AI agents using simple English-language prompts that explain their intents, goals and processes and provide them with the tools and third-party application interfaces and data they need to get the work done.

AI agents built by anyone and everyone

AI agents can be triggered through conversational chats by asking them to take action or through changes in data or other external events such as changing inventory, incoming email or more. For example, a developer could customize an AI agent to produce weekly reports that summarizes team meetings, code participation and charts productivity, while also contacting individual team members to see how their personalized activity. Another agent could help a salesperson follow up on leads coming in from email by checking internal databases to surface network opportunities and help write replies quickly.

Currently, Microsoft makes this simple with what it calls agent library, also in public preview, where users get a head start by choosing prebuilt agents based on common scenarios and triggers. This allows those agents to respond to signals across businesses to initiate tasks like those described above.

To make this even easier the company has brought agent building into Power Apps, Microsoft’s cloud-based tools for building business apps. Users with little or no coding experience can already use Power Apps to build applications using drag-and-drop features and prebuilt templates – and now they’ll have the full power of autonomous agents at their fingertips as well.

Under the hood, Power Apps uses an AI-enabled capability called plan designer, now in public preview, which allows users to describe their business processes in plain English. They can also provide diagrams, screenshots, other images and documents. It will then assist them in developing applications, AI agent automation and more without the need to start from a blank slate.

Using the information given by the user, Copilot will design user roles and requirements, offer real-time suggestions and automation using the conversational interface and allow the user to guide the creation of the application at their own pace. That means users will be able to start with their own business context and logic and approach the problem like they would if they were working with a consultant to develop an application.

Developers now have access to features that provide greater control over governance and security through the Power Platform Admin Center with Managed Security and Managed Operations. These new capabilities bring advanced threat protection, proactive alerts and disaster recovery to Power Apps, Power Automate, Copilot Studio and Dynamics 365. They also affect autonomous AI agent security and control.

Generative AI model evaluation and benchmarking

Microsoft also announced enhancements to evaluation capabilities for generative AI models in Azure AI Foundry, a new unified AI development studio for tools and services announced today, to allow developers to understand what their AI-driven applications are running on to make sure AI models remain accurate and trustworthy.

Evaluating and benchmarking those AI models is an essential component to making certain that those models remain performant, accurate and secure. That’s because generative AI applications can be error-prone, fall out of alignment with verifiable data, and become incoherent and hallucinate. Any number of things can go wrong with AI large language models and it’s necessary to remain proactive in evaluating potential risks during development to prevent issues from causing trouble down the line.

Evaluations have been expanded to allow AI engineers and developers to evaluate and compare models using business data. It’s easy to use public data for comparison and benchmarks, but that still leaves many engineers wondering how models will perform based on a specific use case.

Now, enterprise customers can use their own business context datasets and see how a model will perform in circumstances typical of their everyday use. That will allow them to contrast and compare different model customizations to evaluate changes when fine-tuning and developing for quality, safety and accuracy.

Coming soon to public preview, Azure AI Foundry will provide risk and safety evaluations for images and multimodal content AI models produce. That way, enterprise customers can better understand the frequency and potential severity of harmful content in human and AI-generated outputs and come up with guardrails to handle it. For example, they can assess dealing with preventing an AI from producing images it shouldn’t, such as violent images from text prompts, or violent text captions generated from images.

The AI foundry provides AI-powered assisted evaluators to provide these evaluations at large scale, allowing organizations to grade and assess a large number of models at a time based on target metrics. Metrics could include generated outputs that could be hateful, unfair, violent, sexual and self-harm-related content. They could also be protected materials that represent security leaks or infringement risks.

Having a guide to understand how a model will behave before fine-tuning or moving it up the development line provides AI engineers and developers a way to take steps such as using content filters to block harmful content or other guardrails. After making these changes, the evaluations can be rerun to check scores to see which models performed better and to decide which ones should be used.

Images: Microsoft

Your vote of support is important to us and it helps us keep the content FREE.

One click below supports our mission to provide free, deep, and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and many more luminaries and experts.

“TheCUBE is an important partner to the industry. You guys really are a part of our events and we really appreciate you coming and I know people appreciate the content you create as well” – Andy Jassy

THANK YOU