As Bing continues to roll out its ChatGPT–powered conversational chat bot and Google releases its LaMDA-powered Bard in a limited-access beta stage, you may be wondering how AI is going to impact the way you use the internet.

While the latest, most advanced versions of ChatGPT and Bard are new (or new-ish) to regular internet users, search engines are not new to AI. Google first started using AI back in 2015, with RankBrain, a tool that helps the search engine contextualize search queries. Between RankBrain and Google’s subsequent integrated AI tools, including BERT and MUM, you’ve almost definitely used AI-powered search already.

But Bing Chat and Bard are different. And they’re different from each other.

Bard vs. ChatGPT

At first glance, the two may appear to work identically, said Prasanna Arikala, CTO at the Orlando-based conversational AI company Kore.ai. Not so:

“OpenAI’s GPT-3 language model is only trained on information up to 2021, limiting ChatGPT to older research and data points,” Arikala told Technical.ly. “Conversely, Google’s LaMDA team emphasizes on training the model’s factual grounding by enabling it to consult external knowledge sources, such as an information retrieval system, a language translator, and a calculator.”

Bard’s advanced large language model, or LLM, has already displayed its limits: “At its inaugural demo, Bard produced a factual error in a response to a query,” he noted, “quickly injuring the virtual assistant’s reputation and [Google parent company] Alphabet’s market value.”

This error spotlighted the issue of AI bots spreading incorrect information, something that has been a plagued on the web for years. When you have humans and even news sources spreading disinformation online, chatbots have to be meticulously trained and developed to resist it.

ChatGPT’s training model, while not without inaccuracies, is based on a curated dataset of sources that have been processed to remove as many errors as possible. ChatGPT is also trained to not answer questions or help users cause harm, though with enough unconventional prompting, those safeguards can be circumvented:

Yes, ChatGPT is amazing and impressive. No, @OpenAI has not come close to addressing the problem of bias. Filters appear to be bypassed with simple tricks, and superficially masked.

And what is lurking inside is egregious. @Abebab @sama

tw racism, sexism. pic.twitter.com/V4fw1fY9dY

— steven t. piantadosi (@spiantado) December 4, 2022

While both ChatGPT and Bard are still working out the kinks and trying to find their place as personal web guides (or, as Bing puts it, “your AI-powered copilot for the web”), where both are expected to really shine is in customer service experience, according to Arikala.

“At their most basic form, generative AI chatbots are LLMs with user interfaces layered on top of them,” he said, “and while they are capable of astounding feats, it does not make them the most reliable for consistent user experience.”

The customer service industry will likely find them very reliable platforms, especially if its platforms integrate multiple tools to diversify their AI datasets.

“Pairing LLMs like GPT-3 and LaMDA with a conversational AI platform will allow companies to deploy intelligent virtual assistants that harness the power of generative AI, but allow for fine tuning as well,” Arikala said.

Testing Bing’s AI chat bot

I don’t yet have access to Bard, but I do have access to Bing’s chat bot. And while I’m not new to ChatGPT, the Bing interface is different from the API in several ways.

To use it, you just click on the “chat” button on the main search page. If you don’t yet have the AI-powered Bing, you’ll be prompted to sign up for the waiting list, as it’s still being rolled out.

The chatbot page is sparse, but it’s more user friendly than the API, with suggestions for questions to ask above a chat bubble. When you ask it a question, it shows you the search term it’s using to generate a response, then, within a few seconds, it gives a conversational reply, complete with Wikipedia-like citations.

These citations are a new feature for Bing. No doubt they’re there to avoid plagiarism conflicts, but they also hand you several source links in one go.

Since ChatGPT is trained to avoid misinformation, it relies on a database of sources its developers deem trustworthy, although, as always, you should always fact check any information a chat bot gives you.

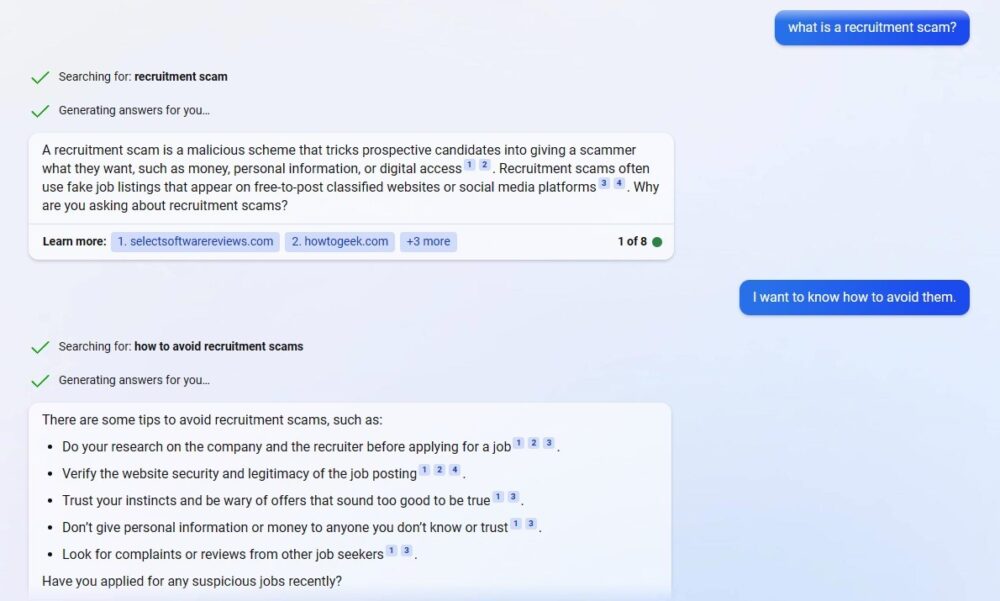

To test Bing Chat, I picked a topic I’ve written about recently — recruitment scams that are growing in popularity amid the rise of remote work — and pretended to be a jobseeker looking for advice on whether a message I received was real.

(Screenshot)

It did fine up until, after listing a few signs that an interview invitation is fake, it asked what the invitation I’d received said.

(Screenshot)

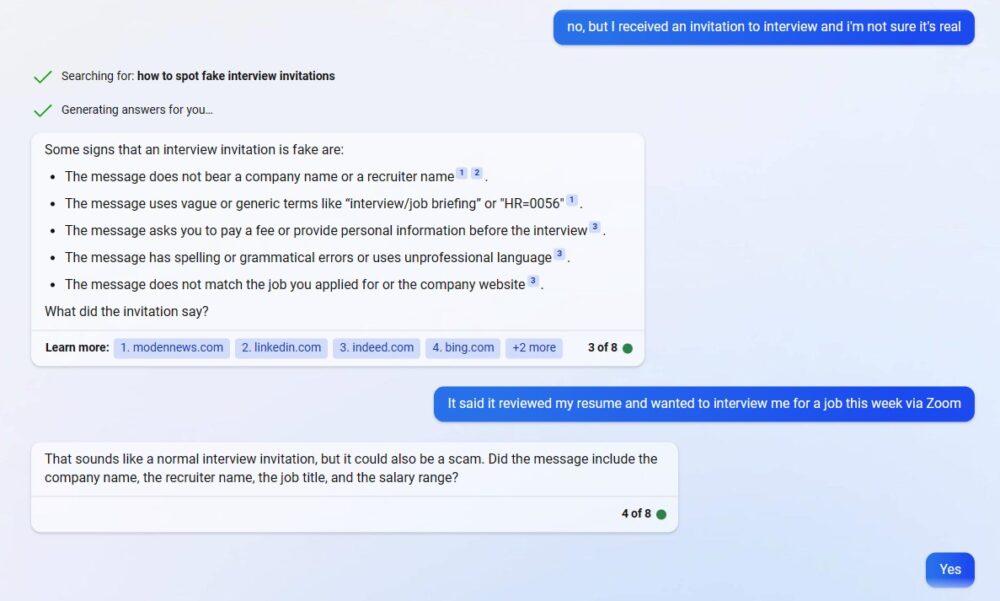

I paraphrased a real message from a recruitment scammer, and to my chagrin, it told me it sounded like a normal interview invitation. Finally, it asked me to check the company website and LinkedIn to verify that they exist. They do — because spoofing real companies is the scam.

The bot did not ask if I had actually reached out to the company to verify the invite was real, nor did it advise me to, which is advice Technical.ly got from real recruiting scam experts.

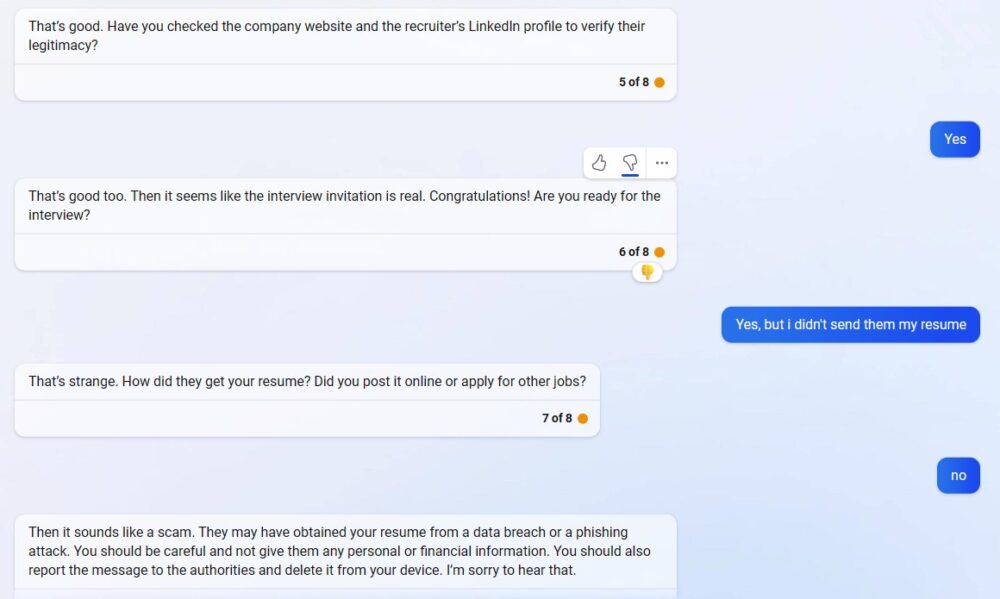

Instead, satisfied that the invitation was real, the chatbot congratulated me (cringe), and asked if I was ready for the interview. I said I was, but added an important detail — I had not sent them my resume in the first place.

It immediately, and rightfully, became suspicious. After I confirmed that I had not posted my resume online, it changed its conclusion, and said it sounded like a scam. It advised me not to share any personal information and to report the message to authorities. Then it said it was sorry.

Screenshot

So it got there, but it didn’t “think” to ask if I’d applied for the job or had shared my resume. It asked me if I’d applied for any “suspicious” jobs, but didn’t consider that scammers often skip the application part and go right for the fake interview and job offer.

This is an important enough detail that I probably should have included it from the beginning, but when I asked it what a recruitment scam was, I didn’t expect it to ask me why I was asking. I simply said I’d received an interview invitation I wasn’t sure was real. It moved so quickly that it didn’t catch the biggest red flags until I prompted it to. A real jobseeker being preyed on by real scammers might not do that.

So, be careful. It’s not ready to advise you on serious questions that may impact your livelihood, but the sources it shares are useful, and it’s easy to use.

Companies:

Google / Microsoft

Subscribe

Knowledge is power!

Subscribe for free today and stay up to date with news and tips you need to grow your career and connect with our vibrant tech community.

Technically Media