In a world where a casual prompt like “a cat surfing on a rainbow” can produce a jaw-dropping video clip, artificial intelligence is redefining creativity. From Hollywood visual effects to viral social media clips, AI-generated videos are everywhere, blending realism with imagination. Tools like OpenAI’s Sora, Google DeepMind’s Veo 3, and Runway’s Gen-4 have made video generation accessible to everyone, from professional filmmakers to hobbyists. But how do these models transform a simple text description or an image into a moving, lifelike video? The answer lies in a fascinating blend of neural networks, massive datasets, and cutting-edge computation. Let’s dive into the tech powering this creative revolution.

Survey

Survey

✅ Thank you for completing the survey!

Also read: The Era of Effortless Vision: Google Veo and the Death of Boundaries

AI videos: Diffusion models

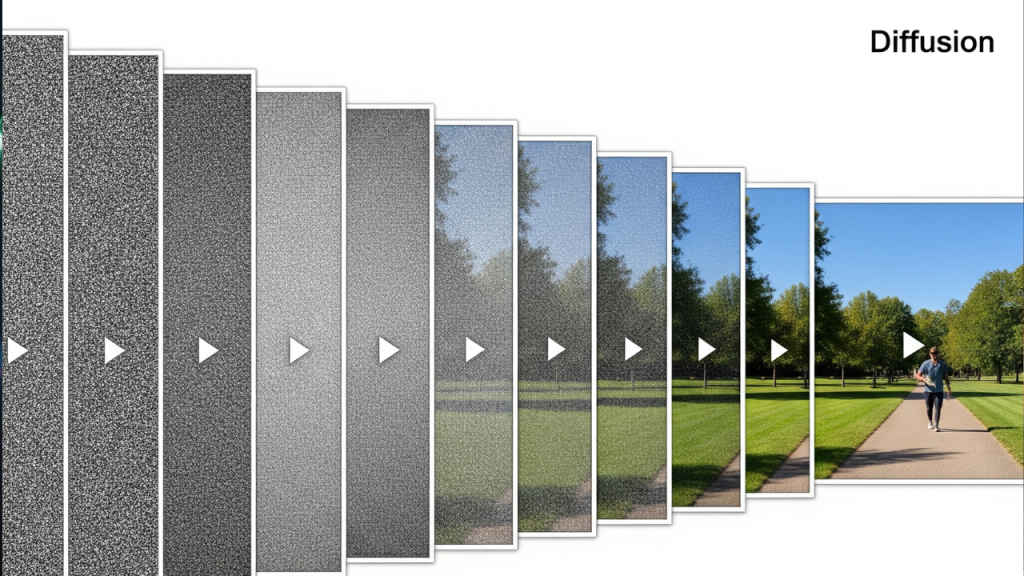

At the heart of AI video generation is the diffusion model, a neural network that works like a digital artist with a knack for cleaning up messes. Imagine taking a clear image and splattering it with random pixels, over and over, until it’s nothing but static, like snow on an old TV screen. A diffusion model is trained to reverse this process, starting with pure noise and gradually sculpting it into a recognizable image. It learns this trick by studying millions of images at various stages of pixelation, mastering how to peel away the noise to reveal something coherent.

For videos, the challenge scales up. Instead of one image, the model handles a sequence of images, video frames, that must flow seamlessly. Diffusion models generate these frames by iteratively refining noisy data, ensuring each frame aligns with the next to create smooth motion.

Processing millions of pixels across multiple frames is computationally expensive, guzzling energy like a fleet of supercomputers. To make this feasible, most video generation models use latent diffusion. Here, raw video data (and text prompts) is compressed into a latent space, a mathematical representation that captures only the essential features of the data, discarding the rest. Think of it like streaming a movie online: the video is sent in a compressed format to save bandwidth, then decompressed for viewing.

In latent diffusion, the model works its magic on these compressed representations, refining them step by step into a sequence of frames that match the user’s prompt. Once complete, the compressed video is decompressed into a watchable clip. This approach drastically reduces the computational load, making video generation more efficient, though it still demands far more energy than generating text or static images.

AI videos:Transformers

Creating a video isn’t just about generating individual frames; those frames need to tell a consistent story. Objects must persist, lighting must stay steady, and motion must feel natural. This is where transformers come in, the same tech that powers large language models like GPT-5. Transformers excel at handling sequences, whether they’re words in a sentence or frames in a video.

To make this work, AI models like Sora chop videos into chunks across both space and time, treating them like pieces of a puzzle. As Tim Brooks, a lead researcher on Sora, explains, it’s “like if you were to have a stack of all the video frames and you cut little cubes from it.” Transformers ensure these chunks align, maintaining continuity so objects don’t vanish or flicker unnaturally. This also allows models to train on diverse video formats, from TikTok-style vertical clips to widescreen cinematic footage, enabling them to generate videos in any aspect ratio.

So, how does the AI know to create a video of a “unicorn eating spaghetti” instead of, say, a dog chasing its tail? The answer lies in a second model, often a large language model (LLM), that pairs text prompts with visual data. During training, these models are fed billions of text-image or text-video pairs scraped from the internet, learning to associate descriptions with visuals. When you enter a prompt, the LLM guides the diffusion model, nudging it toward frames that match your request.

Also read: Netflix and Disney explore using Runway AI: How it changes film production

This process isn’t flawless. The internet’s data is messy, often skewed by biases or low-quality content, which can creep into the generated videos. Creators have raised concerns about their work being used without consent, and the resulting videos can sometimes reflect online distortions, like stereotypes or unrealistic aesthetics. Still, the ability to translate a text prompt or even a single image into a dynamic video is a leap forward in AI creativity.

AI videos: Sound in the silent era

Until recently, AI-generated videos were silent, like early black-and-white films. Google DeepMind’s Veo 3 changed that in 2025, introducing synchronized audio – think lip-synched dialogue, crashing waves, or ambient city noise. The trick? Compressing audio and video into a single data unit within the diffusion model, so both are generated in lockstep. This ensures the sound of a galloping horse matches its hoofbeats on screen, creating a more immersive experience.

AI video generation is a technological marvel, but it comes with a catch: it’s an energy hog. Even with latent diffusion’s efficiency, the sheer volume of computation required to process video frames dwarfs that of text or image generation. Google recently released data showing just how much energy a single AI prompt can consume, highlighting the environmental challenge.

Yet, the future is bright. Diffusion models are proving versatile beyond video. Google DeepMind is experimenting with using diffusion models to generate text, potentially making large language models more efficient than their transformer-based counterparts. As these models evolve, we might see AI that’s not only more creative but also leaner on resources.

A New Era of Creativity

From Netflix’s AI-enhanced visual effects in The Eternaut to viral clips flooding social media, AI video generation is transforming how we create and consume media. It’s not perfect – prompts can be hit or miss, and the tech sometimes produces “AI slop” that clogs feeds with low-quality fakes. But for every misstep, there’s a stunning clip that pushes the boundaries of what’s possible.

As these tools become more accessible via apps like ChatGPT or Gemini, anyone can become a filmmaker. Whether you’re crafting a sci-fi epic or a quirky meme, AI video models are democratizing creativity. Just don’t be surprised if your next viral video takes a few tries and a lot of energy to get right.

Also read: OpenAI and AI filmmaking: Why Critterz could change animation forever

![]() Follow Us

Follow Us

Vyom Ramani

A journalist with a soft spot for tech, games, and things that go beep. While waiting for a delayed metro or rebooting his brain, you’ll find him solving Rubik’s Cubes, bingeing F1, or hunting for the next great snack. View Full Profile