If someone orders shapewear from Spanx LLC’s website today, a humanoid robot handles that package. At a GXO Logistics Inc. distribution center in Georgia, Agility Robotics Inc.’s bipedal machines move merchandise with mechanical precision. And at BMW Group’s Spartanburg plant, Figure 02 robots achieve 400% speed improvements inserting sheet metal parts into chassis assemblies.

These aren’t laboratory demonstrations or venture capital fever dreams. They’re revenue-generating deployments marking a fundamental shift in how machines interact with our physical world.

The era of intelligent robots has arrived. Advanced hardware now connects artificial intelligence models to the real world through video, audio and sensor arrays, enabling AI to control everything from autonomous pallet jacks that load and unload cargo to humanoid robots capable of fine motor movements and tool manipulation. This transformation extends far beyond factory floors – encompassing autonomous vehicles navigating city streets, smart buildings that respond to occupants, and agricultural robots that intelligently tend crops.

This revolution is driven by the emergence of what’s being called “physical AI”: the integration of artificial intelligence into physical systems so robots and machines can perceive, reason about and adapt to the real world in real time. Unlike traditional automation that follows rigid programming, physical AI systems combine sophisticated algorithms with sensors and actuators to navigate unpredictable environments and handle variable tasks.

“We’re getting really good at building the body,” said Tye Brady, chief technologist for Amazon Robotics. “Now we’re bringing the mind to the body through generative AI.”

To understand how physical AI is reshaping entire industries, and assess when the next leap in AI could arrive in a big way at factories, businesses and homes, SiliconANGLE interviewed leading experts and executives about the technology’s transition from experimental curiosity to commercial necessity.

“Physical AI has reached a critical inflection point where technical readiness aligns with market demand,” said James Davidson, chief artificial intelligence officer at Teradyne Robotics, a leader in advanced robotics solutions. “The market dynamics have shifted from skepticism to proof. Early adopters are reporting tangible efficiency and revenue gains, and we’ve entered what I’d characterize as the early-majority phase of adoption, where investment scales dramatically.”

That dramatic scaling is reflected in venture capital flows. According to Mind The Bridge’s latest analysis, Silicon Valley has redirected its investment thesis almost entirely toward artificial intelligence, with 93% of all venture capital now flowing into AI-related startups. Physical AI companies attracted more than $7.5 billion in 2024 alone, with mega-rounds becoming the norm: Jeff Bezos-backed Physical Intelligence raised $400 million at a $2.4 billion valuation, Figure AI Inc. secured $675 million and Skild AI closed a $300 million round.

The momentum only accelerated in 2025. Figure AI raised an additional $1 billion in September and Physical Intelligence returned to raise $600 million more, while General Intuition PBC, a startup developing artificial intelligence models that can navigate three-dimensional environments, raised $133.7 million in October. Project Prometheus, a startup co-led by Bezos and serial entrepreneur Vik Bajaj, formed to develop “AI for the physical economy” raised a colossal $6.2 billion.

According to financial research firm Crunchbase, more than $6 billion in capital has already flowed into robotics companies and startups in the first seven months of 2025. At this pace, Crunchbase predicts this year’s funding will eclipse 2024’s levels.

Photo: Agility Robotics

Virtual worlds, physical impact: The rise of foundation models

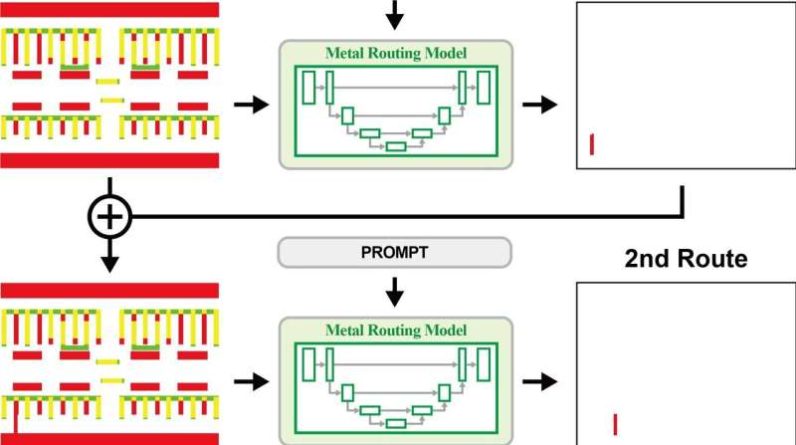

The rapid evolution of Physical AI has been spurred by the development of robotics foundation models, or RFMs: AI software “brains” capable of taking in information and using reasoning to inform robot actions executed in the real world.

These models are often built atop vision-language models: pretrained transformer models with multimodal capability that perceive the world and allow robots to recognize objects and understand physics. RFMs come in wide variety, from Physical Intelligence’s general-purpose π0 model for diverse robots to Nvidia Corp.’s GR00T, a universal model for humanoids.

The technical breakthrough that birthed these models was vision-language-action models, or VLAs. Google DeepMind’s Robotics Transformer 2, developed in 2023, set the paradigm for intelligent robotics by extending two state-of-the-art foundation models: PaLI-X and PaLM-E. VLAs are trained on vast datasets of robotic actions, allowing them to generalize to new tasks.

VLAs enable robots to translate prompts into actions, for instance, “Please pick up the trash and throw it away.” A model’s training data provides both the corpus of knowledge about what things look like and a set of robotic trajectories for handling actions. In this case, even if a robot wasn’t specifically trained for discarding trash, it could still identify items as “trash” through its cameras, grasp them, locate a suitable bin and execute the disposal.

This development marked the fundamental shift from rules-based guidance — where robotic limbs triggered preprogrammed movements — to computer reasoning that could intelligently determine and execute actions.

To train and prepare these models, a new specialized class of AI model emerged: World Foundation Models. WFMs serve two primary functions for robotics AI: They enable engineers to develop vast synthetic datasets rapidly to train robots on unseen actions, and they test these robots in virtual environments before real-world deployment.

WFMs allow developers to create virtual training grounds that mimic reality through “digital twins” of environments. Within these simulated scenes, robots learn to navigate real-world challenges safely and at a pace far exceeding what physical presence would permit.

Examples include Nvidia’s Cosmos platform, which develops WFMs for robots and autonomous vehicles, and Waabi Innovation Inc.’s Waabi World, a WFM designed to teach autonomous truck AI models how to navigate diverse road conditions safely. World Labs Technologies recently launched Marble, a fully-fledged virtual world generator, and Google’s DeepMind debuted Genie 3, an AI model capable of producing interactive virtual worlds.

“Cosmos bridges this gap by exponentially expanding existing data and addressing the critical sim-to-real challenge — the subtle but significant differences between virtual training and the complexity of the physical world,” said Spencer Huang, product manager of robotics at Nvidia.

AI pioneer Yann LeCun left Meta Platforms Inc. to pursue a startup developing WFMs because he said he believes that world models will advance the state of the AI industry to a greater extent than Meta’s focus on language models.

The ability to generate vast quantities of physics-accurate training data represents a watershed moment for the industry. Where traditional robotics required painstaking real-world data collection — expensive, dangerous and limited in scope — WFMs can produce millions of training scenarios in the time it once took to gather hundreds.

“We build the metaverse for self-driving,” explained Waabi Chief Executive Raquel Urtasun. “With Waabi World being that world model that is building in a way also that is grounded in reality, which is very important for physical AI versus hallucinations and things like this that you don’t want on your simulation system.”

Waabi’s approach has achieved an unprecedented 99.7% simulation realism, demonstrating that virtual training environments can now mirror the physical world with near-perfect fidelity.

WFMs have also expanded the intelligent robotics industry beyond robotic limbs, drones and humanoids. Since a WFM creates a “digital twin” of the real world to simulate and understand real environments, it can train any type of robot, even systems not traditionally considered part of robotics, such as internet of things networks, smart buildings and intelligent cities.

Archetype AI Inc., a company developing foundation models for Physical AI, created Newton, a model designed to understand and reason about the physical world in real time. Unlike other models, Newton fuses real-time sensor data with natural language to deliver insights about the world. Its applications cross manufacturing, where it monitors employee safety and predicts equipment maintenance, to analyzing traffic intersections for safer crosswalks and improving safety on large-scale construction sites.

Image: Nvidia

Growing capability leads to commercial applications

The past two years have produced the first commercial deployments of humanoid robotics, including deployments at BMW factories and GXO Logistics warehouses. June 2024 marked the first time a humanoid robot was deployed in commercial operations, with Agility Robotics’ Digit entering the “workforce.”

Despite grabbing a lot of headlines, humanoid robots only represent a small fraction of AI robotics deployments. For now, it’s collaborative robots, robotic arms and autonomous mobile robots that are transforming warehouse and factory settings. The forefront example is Amazon.com Inc., which uses intelligent robots across its warehouses. Of the company’s more than 750,000 robots, Amazon has launched Vulcan, an AI-driven robotic arm with a sense of touch; Cardinal, which stacks packages; and Proteus, an autonomous mobile robot that moves carts.

“It was possible to do this before… but you would have needed a highly trained computer model,” Davidson added. “Now you can ask a physical or generative AI model to create that for you with no training whatsoever.”

Morgan Stanley analysts estimated that Amazon’s robotics developments could save the company as much as $10 billion annually by 2030. And that’s before the benefit of all that data that’s constantly being fed back into the physical models, possibly unmatched in the industry. A report from market analyst Grand View Research estimated the global artificial intelligence in robotics market size at $12.8 billion in 2023 and is projected to reach $124.8 billion by 2030.

Industrial robots held 68% of the AI in robotics market in 2024, with an installed base of 4.28 million units in factories worldwide. Medical and healthcare robots represent the fastest-growing segment at 26% predicted growth rate, with computer vision-assisted surgical systems and autonomous hospital logistics robots advancing rapidly.

Yet the reality remains mixed. Cedric Vincent, head of software development lab at Tria Technologies GmbH offered a sobering assessment: “While you may see videos online of industrial robots moving boxes around… when it comes to humanoid robots replicating human activities, if you watch carefully, you see them fail much of the time.”

“Robots can’t yet pack products” consistently because that requires judgment robotic systems don’t yet have, said Igor Pedan, head of robotics induction at Amazon.com Inc.’s robotics unit. That’s why solo robotic arms or groups of them, not humanoid robots, are its state of the art today.

For powerful AI-capable systems, Vincent added, “the demand by far is for its application with industrial robots, and they are a nice proof of concept. You see that with Nvidia, but there is nothing ‘real’ yet.” Indeed, theCUBE Research, SiliconANGLE’s sister market research firm, notes that because physical AI models don’t yet have the enormous corpus of data that large language models have, they’re probably at least two to three years behind in terms of analogous capabilities.

This gap between demonstration and deployment explains why, as Davidson noted, enterprises should “focus on what’s available today versus what’s still emerging.”

Autonomous vehicles have also benefited from physical AI breakthroughs with AI models and hardware, with Waabi planning fully driverless trucks by the end of this year using its next-generation foundational models. Companies such as Aurora Innovation Inc. and Torc Robotics Inc. have also recently launched commercial pilots for driverless trucking services on fixed routes in Texas and on public roads in the U.S., hauling freight for partners like FedEx and Uber Freight.

The autonomous vehicle market reflects this acceleration, with the sector projected to grow from $68.09 billion in 2024 to $214.32 billion by 2030, according to market analyst Grand View Research Inc.

Nvidia’s Drive Thor platform, launching in 2025 production vehicles, has secured adoption from major manufacturers that include Mercedes-Benz AG, Jaguar Land Rover Automotive PLC, Volvo Car AB and Chinese EV makers Li Auto Inc., Geely Holding’s Zeeker and Xiaomi Corp. The platform’s ability to consolidate multiple vehicle functions — from automated driving to AI cockpit capabilities — into a single system-on-chip has attracted both established automakers and robotaxi companies, such as Uber Technologies Inc.

The trucking segment particularly demonstrates commercial viability. McKinsey & Co. projects that autonomous trucks could create a $600 billion market by 2035 through operational cost benefits. PricewaterhouseCoopers and the Manufacturing Institute estimate that manufacturers could save nearly 30% of their total transportation costs through 2040 if autonomous long-haul trucking is aggressively adopted.

“If you look at a human driver today, although they can drive 11 hours on average, they only drive six and a half hours… suddenly [with self-driving] you can utilize your assets 22, 24 hours,” Urtasun said about the economics.

Image: Amazon

The coming human-robot handoff

Nvidia CEO Jensen Huang declared physical AI as enabling “a new era of AI,” a bold proclamation now backed by concrete technological breakthroughs. Nvidia’s vision extends beyond isolated automation to a fundamental transformation.

“Advances in large language and generative models point toward a new era of AI — systems that can reason about the physical world and operate seamlessly in factories, homes and cities,” added Nvidia’s Spencer Huang. “With a ‘robot brain’ that can be retrained in simulation using real or synthetic data, robots could quickly learn new skills and be repurposed for a wide range of applications.”

This adaptability represents a seismic shift from the narrow, preprogrammed systems that have dominated robotics for decades.

“By 2027, the narrative won’t be about human replacement, but about augmented operations, where AI-powered physical systems work as tools that enhance, rather than replace human expertise,” said Mat Gilbert, director and head of AI and data at Synapse, part of Capgemini Invent.

Gilbert added that human labor would shift from repetitive physical tasks to working alongside and supervising fleets of intelligent systems. Fundamental to this change will be how AI-driven robotics systems can handle variable and unstructured environments, which until recently was the sole domain of human workers.

“The key business differentiator will be who has the skillset to deploy and adapt intelligence, and the businesses that thrive will be those who’ve learned how to quickly teach, manage and trust the robotic components of their workforce,” said Gilbert.

The question of labor displacement casts a shadow over physical AI’s commercial evolution, though early evidence suggests a more nuanced reality than apocalyptic headlines imply. The World Economic Forum’s Future of Jobs Report 2025 projects that AI and automation will create 170 million new roles globally by 2030 while displacing 92 million, a net gain of 78 million jobs. Goldman Sachs estimates 6% to 7% of the U.S. workforce faces displacement risk with AI adoption, though the impact appears “transitory” as new opportunities emerge. A meta-analysis of 52 studies on industrial robots found no consistent evidence of widespread wage depression.

For enterprise leaders, Gilbert framed the shift as “augmented operations rather than human replacement.” China’s $20 billion humanoid investment underscores the stakes: The country’s 123 million manufacturing workers face genuine uncertainty even as officials promise collaboration over replacement.

History shows these concerns are not without merit. The lesson from previous automation waves — from the loom to factory floors — suggests that technological displacement tends to move slower than technologists predict, but faster than workers can retrain without institutional support.

The path to this transformation is already mapped. Spencer Huang observed that while “most robots remain narrowly preprogrammed,” a new wave of companies is already deploying adaptable systems. Some focus on building generalizable “robot brains” — Nvidia, Field AI and Skild AI — while others develop the physical platforms themselves — Figure, Agility and Universal Robots A/S.

Gilbert said that in the short term, two to three years, the most immediate value will come from application builders and companies accelerating development in high-value use cases, such as warehouse logistics while hardware and platform providers will close the gap between potential and production. In the longer term, over five years, value will shift to platform providers and the makers of dominant foundation models for robotic actions, simulation and training.

“Success in physical AI will be determined by four critical factors: ease-of-use, reliability, versatility and performance,” noted Davidson.

Images: SiliconANGLE/Microsoft Designer, Agility Robotics, Nvidia, Amazon

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

- 15M+ viewers of theCUBE videos, powering conversations across AI, cloud, cybersecurity and more

- 11.4k+ theCUBE alumni — Connect with more than 11,400 tech and business leaders shaping the future through a unique trusted-based network.

About SiliconANGLE Media

SiliconANGLE Media is a recognized leader in digital media innovation, uniting breakthrough technology, strategic insights and real-time audience engagement. As the parent company of SiliconANGLE, theCUBE Network, theCUBE Research, CUBE365, theCUBE AI and theCUBE SuperStudios — with flagship locations in Silicon Valley and the New York Stock Exchange — SiliconANGLE Media operates at the intersection of media, technology and AI.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.