About a month ago, Google made it clear that AI is the company’s main priority now that ChatGPT is so popular. Google announced plenty of AI features during I/O 2023 event, opening Google Bard to almost the entire world and introducing a new Google Search AI experience (SGE).

But Google isn’t done with AI novelties, and the company announced brand new AI features on Wednesday for several apps. After Google Maps, Google updated Lens, Bard, and SGE with new features that Google users can try right away.

Google Lens can check moles or rashes on your skin

Google Lens just got a new photo-based search feature that will come in handy, especially during the summer. You’ll be able to take a photo of your skin and see what Google AI thinks about it.

This shouldn’t replace the need to check with a doctor, but it’s another feature that smarter AI can provide. Here’s how Google describes it:

Describing an odd mole or rash on your skin can be hard to do with words alone. Fortunately, there’s a new way Lens can help, with the ability to search skin conditions that are visually similar to what you see on your skin. Just take a picture or upload a photo through Lens, and you’ll find visual matches to inform your search. This feature also works if you’re not sure how to describe something else on your body, like a bump on your lip, a line on your nails, or hair loss on your head.

Searching for skin conditions with Google Lens. Image source: Google

Searching for skin conditions with Google Lens. Image source: Google

Google also highlighted other Lens features in its announcement that you can try on Android and iPhone right now:

- information about things around you (landmarks, plants, animals, and others)

- instant translation of street signs, menus, and any other text

- step-by-step help with homework problems

- shopping for products that catch your eye or discovering different versions of those products

- discovering restaurants around you with the help of dish photos

Also, you can incorporate Google’s Bard generative AI product in Lens to find out more information in a conversational manner about the items you might be searching for.

Google’s SGE is the company’s second generative AI product dedicated to online search. It’s a Google Search variant only available in the US to users who want to try the experimental tool.

With that in mind, Google just added new shopping features to its SGE product. Google offers a simple shopping example in its blog announcement that sounds very familiar to me:

Maybe you’re looking for a Bluetooth speaker to take on a beach vacation that’s effective, yet portable enough to pack in a carry-on suitcase.

Shopping online with Google Search SGE. Image source: Google

Shopping online with Google Search SGE. Image source: Google

Recently, I used ChatGPT to buy new running shoes via prompts that contained multiple conditions just like the one above. And I did it using generative AI that’s unconnected to the internet. SGE is even better than that, as it’ll display current results. And Google says SGE will draw information from more than 35 billion product listings.

Google says SGE will show you product descriptions with reviews and ratings, updated prices, and images. That’s the kind of information I had asked ChatGPT for in my prompts.

Another new SGE feature is the ability to “Add to Sheets” items you might consider buying. You can then share those sheets with others. The feature will do wonders for those Black Friday and Christmas shopping sessions that involve multiple family members.

Access to Google Search SGE might be limited, but shopping appears to be a big point of interest for Google right now, and I can understand why. After my running shoes experiment, I said I’d never use simple Google Search again to buy stuff. The generative AI experience is just too good to ignore.

Google on Wednesday announced new AI features for shopping, including a way to try on clothes virtually with the help of AI.

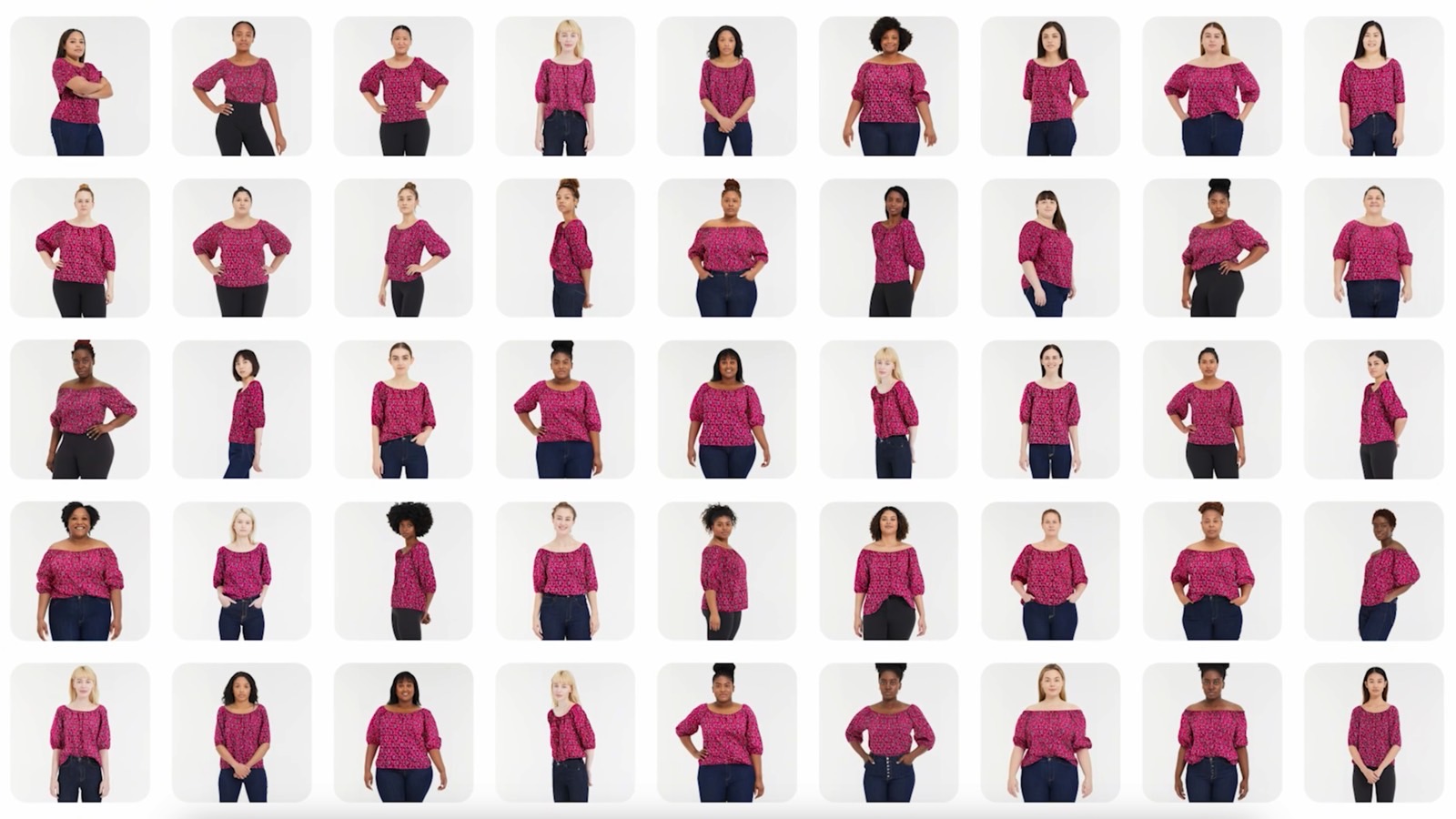

Google’s Virtual Try On lets you see the same clothes on different models. Image source: Google

Google’s Virtual Try On lets you see the same clothes on different models. Image source: Google

The Virtual Try On feature will initially be available in the US, with a limited set of brands for now: Anthropologie, Everlane, H&M, and Loft.

The feature will let you show what a piece of clothing would look like on a diverse set of models (image above):

Our new generative AI model can take just one clothing image and accurately reflect how it would drape, fold, cling, stretch, and form wrinkles and shadows on a diverse set of real models in various poses. We selected people ranging in sizes XXS-4XL representing different skin tones (using the Monk Skin Tone Scale as a guide), body shapes, ethnicities, and hair types.

In addition to Virtual Try On, Google also introduced a new “guided refinements” feature for shopping. The AI will act as a store associate when you’re buying stuff online, and it’ll listen to your questions. Like looking for a more affordable option or a similar product. You’ll be able to ask for different colors, styles, and patterns, and Google’s AI should surface products from various retailers.

Trying Anthropologie clothes virtually with Google AI. Image source: Google

Trying Anthropologie clothes virtually with Google AI. Image source: Google

All you need to make the generative AI shopping experience even better is some sort of device that will let you try clothes at home in augmented reality. If only there were a device like that that one could soon buy…