Writers don’t usually worry about AI detection when they start drafting. The doubt appears later, during review, revision, or approval. Someone rereads a paragraph and hesitates. The tone feels smooth but oddly distant. Dechecker enters at that moment, when judgment replaces speed and content needs to stand up to real scrutiny rather than theoretical rules.

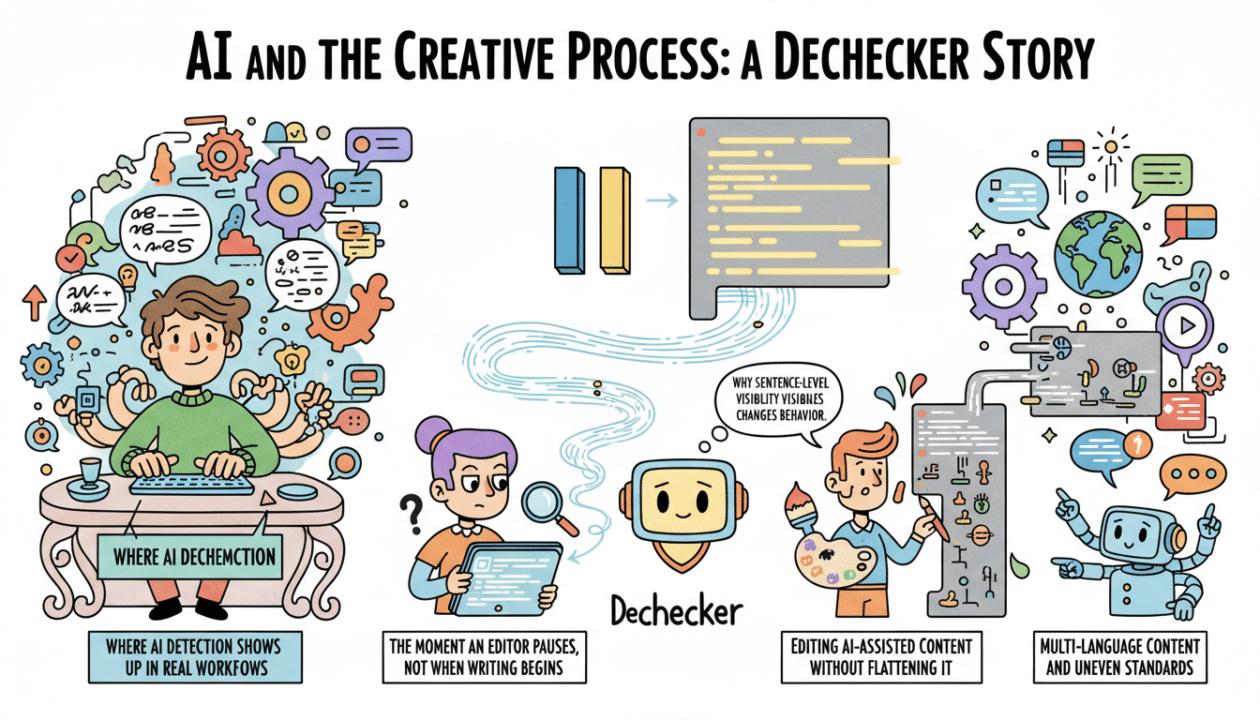

Where AI Detection Shows Up in Real Workflows

The moment an editor pauses, not when writing begins

In practice, AI usage rarely causes problems at the drafting stage. Trouble surfaces when an editor senses something off but can’t articulate why. Running the text through an AI Checker often confirms that instinct. Certain sentences stand out as overly balanced, explanatory without commitment, or detached from lived context. Detection becomes a way to name discomfort rather than create it.

Why sentence-level visibility changes behavior

Editors don’t want another score to interpret. They want to know what to touch. Dechecker’s sentence-level detection aligns with how humans already edit. Instead of debating an entire document, attention narrows to specific lines. Decisions become practical. Rewrite this. Keep that. Remove the rest. The AI Checker fits into existing habits rather than forcing a new mindset.

Editing AI-Assisted Content Without Flattening It

When polishing goes too far

Many drafts lose their edge during revision, not creation. AI-assisted rewrites often smooth transitions, equalize tone, and eliminate hesitation. The result reads clean but interchangeable. Dechecker highlights exactly where this flattening happens. Editors see which sentences stopped sounding owned and started sounding produced.

Humanization as a judgment call

The suggestions Dechecker provides don’t replace editorial thinking. They prompt it. When a sentence is flagged, the question isn’t how to trick detection but whether the phrasing reflects an actual stance. Editors often add context, constraints, or rationale rather than synonyms. The AI Checker becomes a mirror for intention, not a rewriting engine.

Multi-Language Content and Uneven Standards

Global teams, shared expectations

Content teams working across languages face inconsistent detection quality from most tools. Dechecker’s multi-language support allows editors to apply similar standards without forcing English-centric patterns. This matters when academic papers, reports, or blogs circulate internationally under the same credibility expectations.

Translation artifacts versus AI patterns

Translated text can trigger false suspicion because of a rigid structure or formal phrasing. Dechecker helps separate those artifacts from genuine AI-generated patterns. Editors learn when to ignore flags and when to intervene. Over time, this reduces unnecessary rewrites and builds confidence in cross-language publishing.

Reports That Support Decisions Beyond Writing

Sharing clarity instead of defending guesses

When content is questioned by a manager, client, or reviewer, vague reassurances rarely help. Dechecker’s reports show what was flagged, why it mattered, and how it was addressed. This shifts conversations from opinion to evidence. The AI Checker becomes part of documentation, not just editing.

Trust through transparency

Teams that share detection reports internally tend to argue less. Writers understand feedback. Reviewers explain changes clearly. The process feels accountable rather than punitive. AI usage stops being a sensitive topic and becomes an operational detail.

Hybrid Inputs and Modern Draft Origins

Spoken ideas turned into written assets

Many drafts start as conversations. Meetings are recorded, interviews captured, ideas spoken freely before a structure exists. When that material is converted into text using an audio to text converter, the first draft carries natural rhythm but uneven clarity. Editors often rely on AI to organize and refine it. Dechecker helps ensure that refinement doesn’t erase the original voice entirely.

Managing layered authorship

Modern documents often pass through multiple hands and tools—a human outline. AI expands. A human edits. Another AI polishes. The final author still signs their name. Dechecker helps teams track where machine patterns dominate and where human judgment remains visible. The AI Checker doesn’t assign blame. It restores balance.

How Different Roles Use an AI Checker Differently

Writers use it to learn patterns

Writers quickly recognize habits that trigger detection. Overly neutral framing, excessive qualifiers, or generic transitions appear repeatedly. Seeing these patterns sentence by sentence shortens the learning curve. Writers adjust upstream rather than fixing everything at the end.

Editors use it to prioritize effort

Editors manage time, not theory. Dechecker shows where edits matter most. Instead of reworking entire sections, editors focus on high-impact sentences. The AI Checker helps allocate attention, which is often the scarcest resource.

Managers use it to reduce risk

Managers rarely read every word closely. They need assurance that content won’t create problems later. Detection reports and targeted edits provide that assurance. The AI Checker becomes a safety layer without slowing output.

What AI Detection Cannot Do for You

It won’t invent originality

If a piece lacks a clear angle or insight, no amount of detection will fix it. Dechecker assumes there is something worth protecting. The AI Checker refines expression, not substance. Teams that understand this avoid misusing detection as a shortcut for strategy.

It won’t eliminate false positives

Every experienced user encounters sentences flagged unexpectedly. Teams that benefit most treat these moments as prompts to reconsider phrasing rather than proof of failure. Over time, judgment improves and reliance decreases.

Where Dechecker Fits Long-Term Content Quality

From reactive checks to habitual clarity

Teams that integrate Dechecker early stop treating AI detection as a final hurdle. It becomes part of drafting culture. Writers think more deliberately. Editors intervene less. The AI Checker fades into the background as habits improve.

Preserving credibility in an AI-heavy world

As AI-generated content becomes common, credibility depends less on whether AI was used and more on whether human judgment is visible. Dechecker supports that visibility. It doesn’t promise perfect detection. It supports responsible authorship.

AI writing isn’t a shortcut or a threat by itself. The risk lies in losing track of where human intention ends and automation begins. Dechecker meets writers and editors at that boundary. The AI Checker doesn’t replace decision-making. It sharpens it, sentence by sentence, in the moments where hesitation usually means something important.

![]()

![]()

This content is brought to you by the FingerLakes1.com Team. Support our mission by visiting www.patreon.com/fl1 or learn how you send us your local content here.