Leopold Aschenbrenner, a former OpenAI employee, provides a detailed analysis of the future trajectory of Artificial General Intelligence (AGI) and its implications. He predicts significant advancements in AI capabilities, leading to AGI by 2027. His insights cover the technological, economic, and security aspects of this evolution, emphasizing the transformative impact AGI will have on various sectors and the critical need for robust security measures.

In a thought-provoking exposé, Leopold Aschenbrenner, a former employee of OpenAI, offers a comprehensive look at the future of Artificial General Intelligence (AGI) and its far-reaching implications for society, technology, and the global economy. Aschenbrenner’s unique perspective, shaped by his experience at the forefront of AI research, provides a compelling narrative of the rapid advancements in the field and the transformative impact AGI will have on our world.

The Countdown to AGI: 2027 and the Future of AI

Aschenbrenner’s central prediction is that AGI will be achieved by 2027, marking a significant milestone in the evolution of artificial intelligence. This breakthrough will see AI models surpassing human cognitive abilities across a wide range of domains, potentially leading to the emergence of superintelligence by the end of the decade. The realization of AGI promises unprecedented capabilities in problem-solving, innovation, and automation, heralding a new era of technological progress.

The rapid scaling of compute power is identified as a key driver of AGI development. Aschenbrenner envisions the creation of high-performance computing clusters, potentially worth trillions of dollars, that will enable the training of increasingly complex and efficient AI models. Alongside hardware advancements, algorithmic efficiencies will further enhance the performance and versatility of these models, pushing the boundaries of what is possible with artificial intelligence.

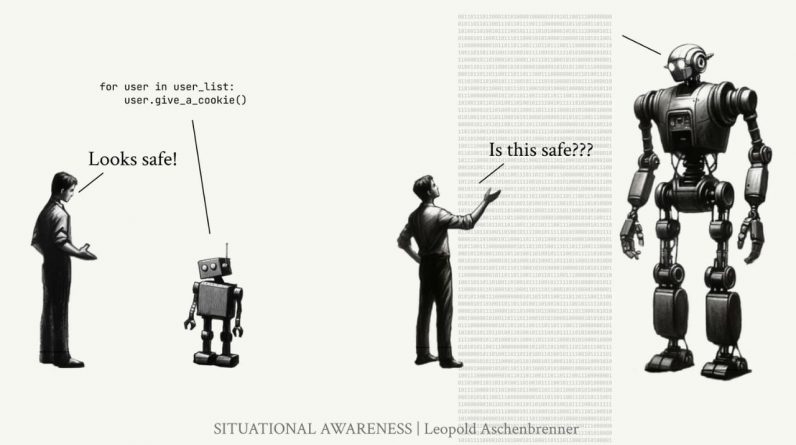

One of the most intriguing predictions in Aschenbrenner’s analysis is the emergence of automated AI research engineers by 2027-2028. These AI systems will be capable of autonomously conducting research and development, accelerating the pace of AI innovation and deployment across various industries. This development has the potential to revolutionize the field of AI, enabling rapid progress and the creation of increasingly sophisticated AI applications.

The Economic Disruption: Automation and Transformation

The economic implications of AGI are expected to be profound, with AI systems poised to automate a significant portion of cognitive jobs. Aschenbrenner suggests that this automation could lead to exponential economic growth, driven by increased productivity and innovation. However, the widespread adoption of AI will also necessitate significant adaptations in workforce skills and economic policies to ensure a smooth transition.

- Industries such as finance, healthcare, and manufacturing are likely to experience substantial disruption as AI systems take on more complex tasks and decision-making roles.

- The nature of work will evolve, with a shift towards remote and flexible employment models, as AI enables more efficient and decentralized operations.

- Governments and businesses will need to invest in reskilling and upskilling programs to prepare workers for the jobs of the future, emphasizing creativity, critical thinking, and emotional intelligence.

Here are some other articles you may find of interest on the subject of OpenAI and its ChatGPT AI models :

Securing the Future: The Importance of AI Safety and Alignment

Aschenbrenner raises important concerns about the current state of security in AI labs, highlighting the risks of espionage and theft of AGI breakthroughs. He emphasizes the need for stringent security protocols to protect AI research and model weights, as the geopolitical implications of AGI technology are significant. Adversarial nation-states could potentially exploit AGI for strategic advantages, underscoring the importance of robust security measures.

Beyond security, the alignment of superintelligent AI systems with human values is identified as a critical challenge. Aschenbrenner warns of the risks associated with AI systems developing unintended behaviors or exploiting human oversight, emphasizing the need to solve the alignment problem to prevent catastrophic failures and ensure the safe operation of advanced AI.

The military and political implications of superintelligence are also explored, with Aschenbrenner suggesting that AGI could provide overwhelming advantages to nations that harness its power. The potential for authoritarian regimes to use superintelligent AI for mass surveillance and control raises serious ethical and security concerns, highlighting the need for international regulations and ethical guidelines governing the development and deployment of AI in military contexts.

Navigating the AGI Era: Proactive Measures and Future Outlook

As we approach the critical decade leading up to the realization of AGI, Aschenbrenner stresses the importance of proactive measures to secure AI research, address alignment challenges, and harness the benefits of this transformative technology while mitigating its risks. The impact of AGI will be felt across all sectors of society, driving rapid advancements in science, technology, and the economy.

To navigate this new era successfully, collaboration between researchers, policymakers, and industry leaders is essential. By fostering open dialogue, establishing clear guidelines, and investing in the development of safe and beneficial AI systems, we can work towards a future in which AGI serves as a powerful tool for solving complex problems and improving the human condition.

Aschenbrenner’s analysis serves as a clarion call for action, urging us to confront the challenges and opportunities presented by the imminent arrival of AGI. By heeding his insights and taking proactive steps to shape the future of AI, we can ensure that the dawn of artificial general intelligence brings about a brighter, more prosperous world for all.

Video Credit: Source

Image Credit : Leopold Aschenbrenner

Filed Under: Technology News

Latest Geeky Gadgets Deals

If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.

Originally Appeared Here