One of the phenomena triggered by the AI boom in recent years is deepfakes. The term is made up of the words deep learning and fake.

Deep learning refers to machine learning methods, while a fake is a forgery, an imitation or a hoax. A deepfake is therefore a forgery created with the help of AI processes.

These can be fake computer-generated photos of known people, images, or videos in which faces have been replaced with others, or even voice recordings or messages in which a known voice says phrases that it has never actually uttered.

Such forgeries of images, videos, and sound recordings have always existed. However, thanks to AI technology, these fakes are now near perfect and difficult to distinguish from authentic recordings.

What’s more, the required software is available to everyone and is often even offered as a web service meaning anyone can create deepfakes nowadays.

High potential for misuse

AI applications for creating deepfakes are often advertised as software that can be used to play tricks on other people.

But in fact, such programs are often misused by criminals. They utilize the possibilities of AI for these scams. For example:

- They use AI to perfect the grandchild trick. To do this, they call their victims using the AI-generated voices of relatives, describe an emergency such as an accident and ask for immediate financial support.

- In a video, they put a phrase into the mouth of a politician that they have never said, in an attempt to influence public opinion.

- You have a celebrity advertise a product without their knowledge or consent. Customers who then order this product are either ripped off with an inflated price or never receive the goods.

Recognizing deepfake images

When the deepfake scams began, consumers could only protect themselves from being ripped off by criminals by looking or listening carefully. This is because the AI often works inaccurately and many details are misrepresented or unrealistic.

However, other AI applications soon appeared on the web that examined images and videos precisely for these errors and also included color patterns and textures in their analyses. A whole range of such programs are now available. Many of them are free of charge.

The user uploads an image or video to a website and the AI analyses it and tells the user whether it’s a deepfake or not.

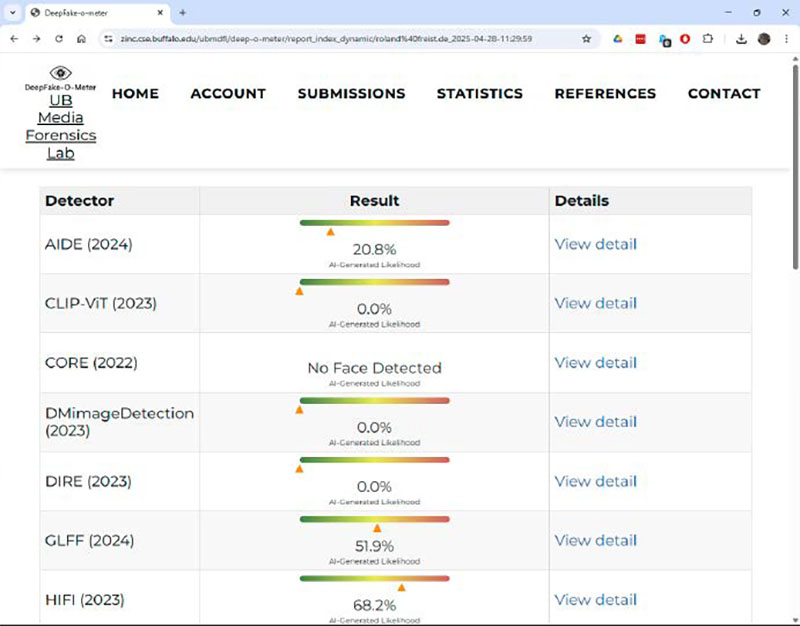

The University at Buffalo’s Deepfake-o-Meter recognizes deepfakes in images, videos and audio files using 16 programs from the open source scene. In the test, however, the performance of the tools was not convincing.

IDG

Probably the most comprehensive deepfake detection tool on the web comes from the University at Buffalo in the US state of New York. The Deepfake-o-Meter project, developed there by a team at the Media Forensic Laboratory, brings together 16 AI recognition programs from the open source scene and feeds them with images, videos, and audio files uploaded by users.

After a few seconds, the tools present their results and state the probability that an uploaded medium is an AI-generated deepfake.

To gain access to the Deepfake-o-Meter, all you need to do is register for free with your e-mail address. This gives the user 30 credits for using the service; a single query costs one credit.

For a small test, we first uploaded what is probably the most famous deepfake image in the world, the photo of the late Pope Francis in a white down jacket created with Midjourney.

However, only two services from the Deepfake-o-Meter repertoire gave a probability of more than 50 per cent that the photo was fake.

None of the recognition programs used by Deepfake-o-Meter identified the image of Pope Francis in a white down coat as a reliable deepfake. Other AI images were also not reliably recognized.

IDG

In a second test, we had the Canva.com portrait generator generate the image of a woman. This time, seven of the sixteen AI tools recognised the deepfake.

This image of a woman generated with Canva.com was also only recognized as AI-generated by some of the detectors of the Deepfake-o-Meter.

IDG

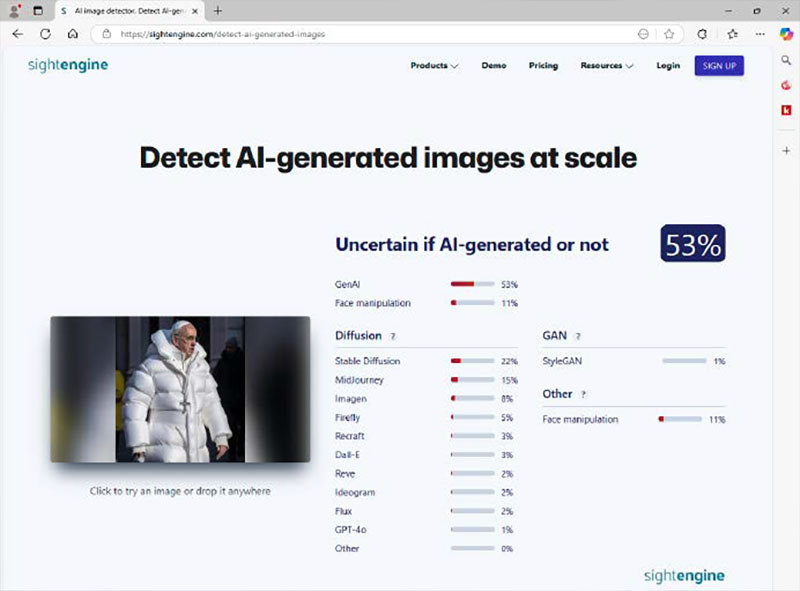

The AI recognition of the French company Sightengine works much faster than the Deepfake-o-Meter tools. In the test, it recognized the photo almost immediately after it was uploaded.

For the portrait of a woman created with Canva.com, it gave a probability of 99 per cent that it was an AI-generated image. However, this program also produced inconclusive results when it came to the picture of the Pope—according to Sightengine, the probability of a deepfake was 53 percent.

In the case of the image of the Pope in a white down jacket, Sightengine’s AI is not sure whether it is a deepfake or not.

IDG

Recognize deepfake images by these details

Deepfake detectors such as Sightengine are important tools for identifying fake photos. In many cases, however, it’s also possible to recognize with the naked eye that an image doesn’t reflect reality—the devil is often in the details.

One of the biggest problems for AI is the representation of human fingers. The programs are shown millions of photos during training, many of which show hands and fingers.

However, the hands are often incomplete. In a photo of a handshake, for example, only three fingers are visible in most cases. In other pictures, some fingers are in a pocket or are completely or partially covered by objects.

This deepfake of Donald Trump was shared by a fan on Facebook during the election campaign. The depiction of the fingers is incorrect – a common problem. And the writing on the cap is illegible.

IDG

As the AI does not know how many fingers a person has, it deduces from these photos that the number and length can vary. Accordingly, it provides some hands with more or fewer fingers or gives them fingers of different lengths and sizes.

- The AI programs also struggle with arms and legs. The limbs are often not in the right place or cannot be assigned to a person.

- Hair often looks artificial. The strands fall at the wrong angle or cannot be assigned to a person.

- The programs also often have difficulties with clothing details. Shirts and coats have different buttons, necklaces do not form a closed ring or spectacle frames are deformed.

- Fonts appear as spidery, illegible characters.

- There are often incorrect shadows in the background, or there are inconsistencies in the proportions.

In the image of the Pope, the shadow does not match the frame of the spectacles, and the crucifix is hanging on a chain only on one side.

Recognizing deepfake videos with AI software

The creation of deepfake videos took a huge leap forward last year with the introduction of the Sora video generator from Open AI. The films look so real that they can hardly be distinguished from real videos. You can find large quantities of amazingly realistic-looking films on YouTube made with this new technology.

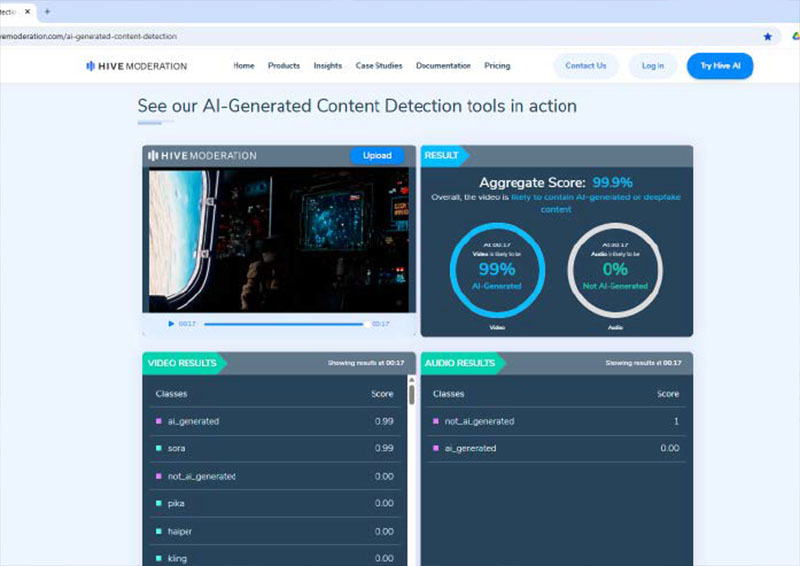

Free AI video detectors are Deepware.ai and the AI detector from Hive. Both are designed as web applications. Deepware.ai is completely free, while the basic version of Hive only accepts videos up to 20 seconds in length.

We uploaded some Sora videos to both websites to see how they performed. The result with Deepware was disappointing: the program did not recognize the deepfakes in any of our examples.

The Hive detector’s results on the other hand were quite different: it indicated a deepfake probability of 99 per cent for all Sora films.

The Hive detector was able to clearly classify the films created with the Sora video generator from ChatGPT manufacturer Open AI as AI-generated.

IDG

Recognizing AI-generated texts

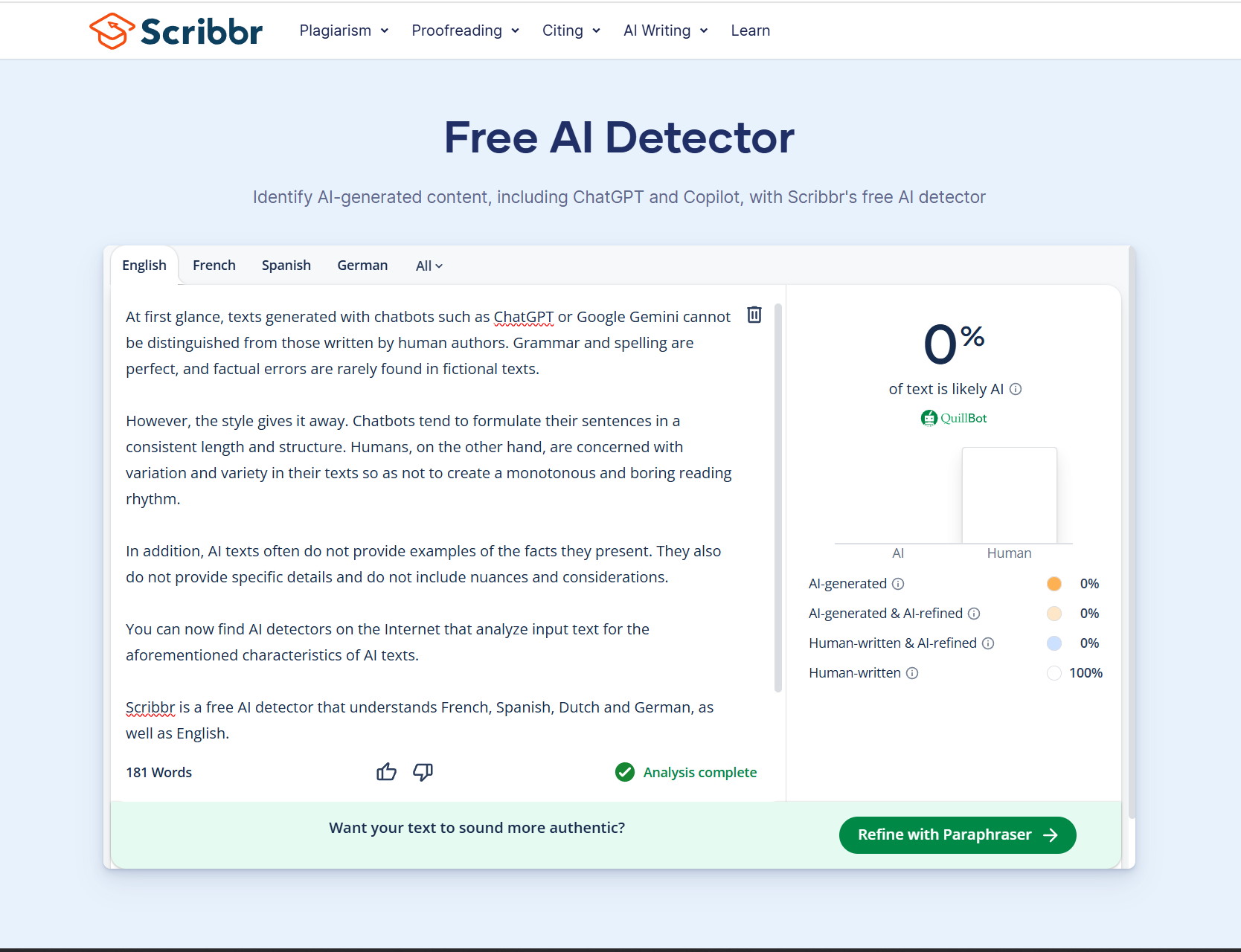

At first glance, texts generated with chatbots such as ChatGPT or Google Gemini cannot be distinguished from those written by human authors. Grammar and spelling are perfect, and factual errors are rarely found in fictional texts.

However, the style gives it away. Chatbots tend to formulate their sentences in a consistent length and structure. Humans, on the other hand, are concerned with variation and variety in their texts so as not to create a monotonous and boring reading rhythm.

In addition, AI texts often do not provide examples of the facts they present. They also do not provide specific details and do not include nuances and considerations.

You can now find AI detectors on the Internet that analyze input text for the aforementioned characteristics of AI texts.

Scribbr is a free AI detector that understands French, Spanish, Dutch and German, as well as English.

Isgen.ai can handle several dozen more languages, but is only free in the basic version. With the freemium offer, you can have up to 12,000 words per month analyzed in a maximum of 50 queries after registering.

The AI detector Scribbr can analyze English texts on request to see whether they were formulated by an artificial intelligence. However, the program’s results are not error-free.

Sam Singleton

Recognize deepfake videos by all of the fine details

Deepfake videos often have the same errors as AI-generated photos: texts are illegible, details are illogical or impossible in reality. For example, the shadows are often incorrect and hair does not appear to have a fixed connection to a head.

The design of the background does not match the rest of the film either. Finally, it’s noticeable in many cases that the people in the film are shown with a higher resolution than their surroundings.

There are also some typical details that only occur in moving images. For example, the people in the videos often move unnaturally slowly and appear to be in a kind of trance.

In addition, their faces often show no facial expressions and they do not blink. To recognize this, however, you sometimes have to reduce the playback speed of the film.

With software such as Real Time Voice Cloning, it’s now possible to create a deepfake voice from a recording that is only a few seconds long. This can read out any text in the voice of another person.

However, the technology is not yet perfect. In various studies, the test subjects were able to distinguish the artificial voice from the real voice in two thirds of all cases. However, the quality is already so good that criminals have been able to successfully scam people with emergency calls using fake voices.

For now, products that promise to unmask fake voices are mainly from English-speaking countries. The security company McAfee, for example, has introduced the Deepfake Detector, which detects artificially generated voices in videos and audio files. It’s available on all PCs with Intel Core Ultra 200V processors.

Companies such as Resemble.ai and AI Voice Detector have already developed applications for companies.

The Hiya AI Voice Detector is another option that is currently free of charge. It’s designed as a Chrome extension and analyzes voice recordings on websites. It actually worked surprisingly well in the test.

SMS analysis with Bitdefender Scamio

Criminal gangs are increasingly trying to lure users to websites where they are supposed to enter their personal data with emergency calls and offers via SMS.

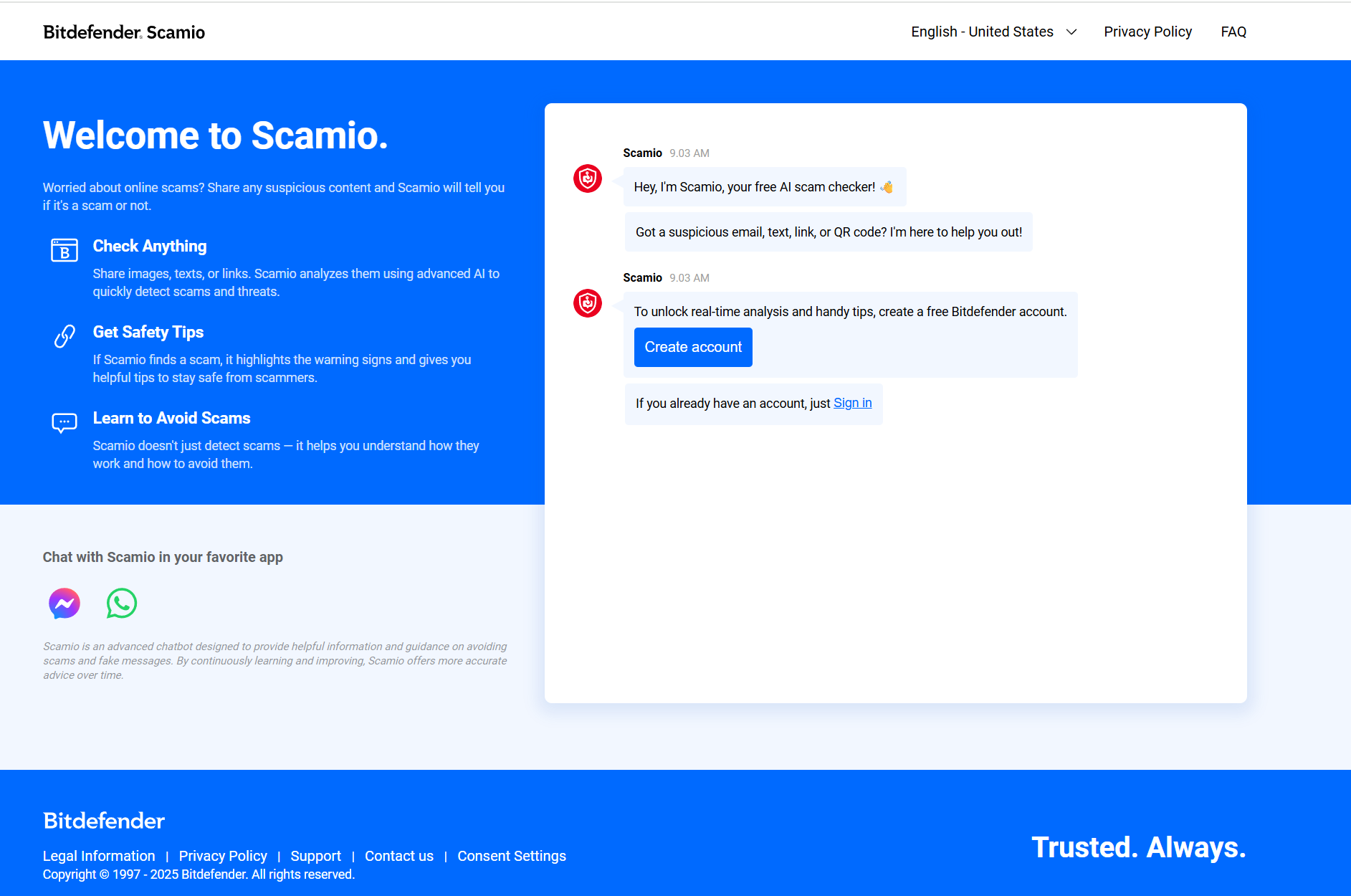

Security manufacturer Bitdefender has developed Scamio, a service that uses AI tools to analyze suspicious emails, links and text messages for phishing attempts and other criminal intentions.

Bitdefender Scamio accepts texts of all kinds and uses AI to analyze them for signs that they are spam or phishing attempts.

Sam Singleton

This article originally appeared on our sister publication PC-WELT and was translated and localized from German.