Early-phase generative artificial intelligence AI – or “request/response AI” — has not yet lived up to the expectations implied by the hype. We believe agentic AI is the next level of artificial intelligence that, while building on generative AI, will go further to drive tangible business value for enterprises.

Early discussions around agentic AI have focused on consumer applications, where an agent acts as a digital assistant to a human. But we feel that when in a consumer setting, this is an open-ended and complex problem. Rather, we see more near-term potential for agentic AI focused on enterprise use cases where the assignment is easily scoped with a clear map to guide agents.

In this Breaking Analysis, we share our thoughts on the emerging trends around agentic AI. We’ll define what it is, how agentic builds on (and is additive to) generative AI, what’s missing to make it real, what the stack components look like and some of the likely players in the space.

Consumer web agents vs. enterprise agents

Before we get into the details, we’d like to clarify that we believe agentic AI has great potential in the enterprise but is a somewhat perilous journey for consumer AI. In particular, it’s our view that consumer agents, where you no longer go to websites, rather machines go there for you and perform tasks, is like sailing off the end of the earth, where the ship has no destination and ends up a derelict.

Enterprise agents, on the other hand, have a defined destination and a clear route to get there. The ship will reach its destination because it knows where it’s going.

An agent needs to navigate systems to perform work on a user’s behalf. The core of an agent is figuring out how to navigate to get that work done. To do that, they need a map and tools to accomplish this task. Consumer agents exist mostly in the wide-open territory of the World Wide Web, and that’s like Ferdinand Magellan declaring that he’s going to go circumnavigate the globe and sail off toward the west. For all he knew, he might have fallen off the end of the earth. He was trying to navigate the whole world without much of anything in the way of a map, as we depict on the left below.

Enterprise agents, on the other hand, are like running errands in a town where you have a map or already know where things are, where the grocery store is, the gas station, the library, as we show on the right side.

Enterprises have bounded and reasonably well-defined routes and tasks. Over time, the efficacy of consumer agents will be possible, but it’s going to take much more technical work to get there. The agents in the enterprise can do more valuable work much sooner and drive what is currently an elusive ROI.

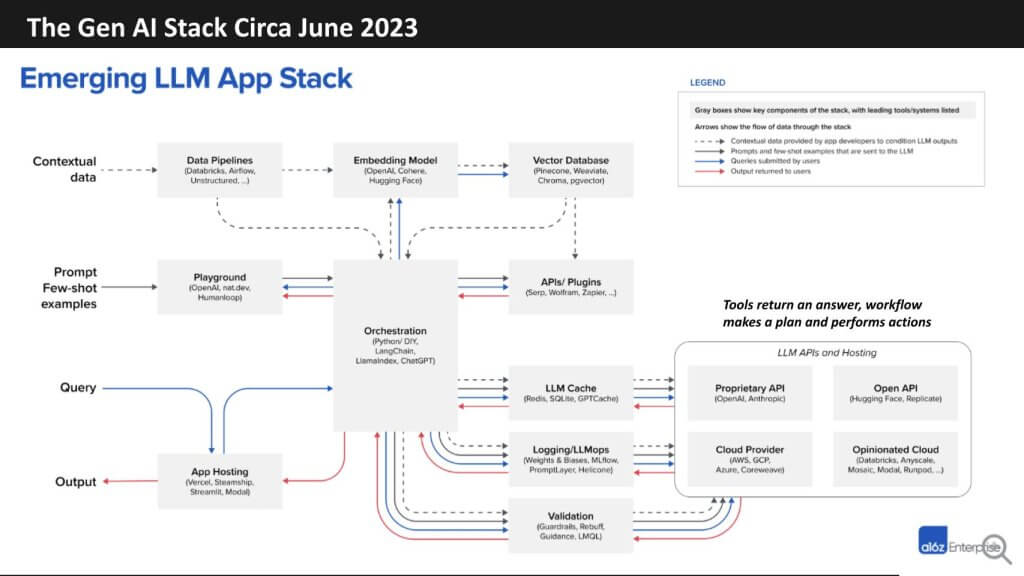

The gen AI stack 18 months ago

Let’s go back in the time machine to just last January. The graphic below was introduced by a16z at the time, describing the emerging gen AI stack. It basically shows what we called up front a request- response model. In other words, a request is initiated via natural language and data is accessed through a retrieval-augmented generation, or RAG, pipeline to return an answer.

The process is fast. It’s quite impressive, really, but the answer is often just OK, and the same or similar queries very often generate different answers. As such, this model has delivered limited return on investmet for enterprise customers. Sure, there are some nice use cases, such as code assist, customer service, writing content and the like.

However, other than the price of Nvidia Corp., Broadcom Inc. and some of the other big AI plays, the returns haven’t been there for mainstream enterprise customers. We see the next incarnation of AI building on the previous picture with some notable additions that we’ll address in a moment.

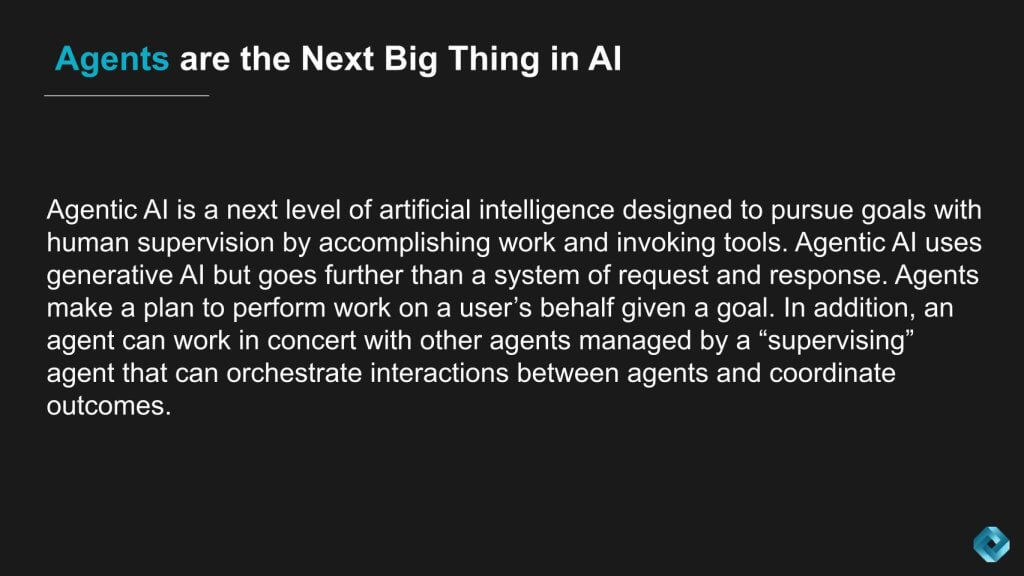

Agentic AI definition

Before we get into what’s new, let’s define agentic AI as we see it. Agentic AI is the next level of artificial intelligence designed to pursue goals with human supervision. The agent accomplishes work and invokes tools to do so.

Agentic AI uses generative AI but goes further than a system of request and response. Agents in this model make a plan to perform work on a user’s behalf, given a specific goal. In addition, an agent can work in concert with other agents managed by a supervising agent that can orchestrate interactions between agents and coordinate outcomes.

Agentic example in supply chain

Building on something we touched on last week, let’s talk about sales and operations planning and the way Amazon.com Inc. does it. We’re talking about Amazon.com, not Amazon Web Services. Amazon forecasts sales for 400 million stock-keeping units weekly, looking five years into the future. The reason it needs to go so far into the future is that it has different agents that do different things depending on the time frame and what type of work they need to coordinate.

For example, a long-term planning agent might figure out how much distribution center capacity it needs to build. Another might configure the layout of each distribution center that either exists or has not been built, another might figure out how much of each SKU to order for each supplier for the next delivery cycle. Another agent figures out how to cross dock deliveries when they arrive so the inventory gets distributed to the right location. Then, after the customer order is received, another agent has to figure out how workers should pick, pack and ship the items for that order.

The salient point is that these agents need to coordinate their plans in the service of some overarching corporate goal, such as profitability, with the constraint of meeting the delivery time objectives that Amazon sets out. Importantly, the decisions one agent makes about distribution center configurations (for example) has to inform how another agent will be able to pick, pack and ship the order.

In other words, the analysis that each agent does has to inform all the other agents’ analyses. So, it’s not just a problem of figuring out what one agent does, rather it’s about coordinating the work and the plans of many agents and accounting for the interdependencies.

The agents do the work based on objectives set by humans. The resulting plan is presented to the humans for review, then put into action or revised and optimized as needed. It’s the combination of human intuition and machines efficiency that makes this so powerful.

Scaling agentic AI across industries

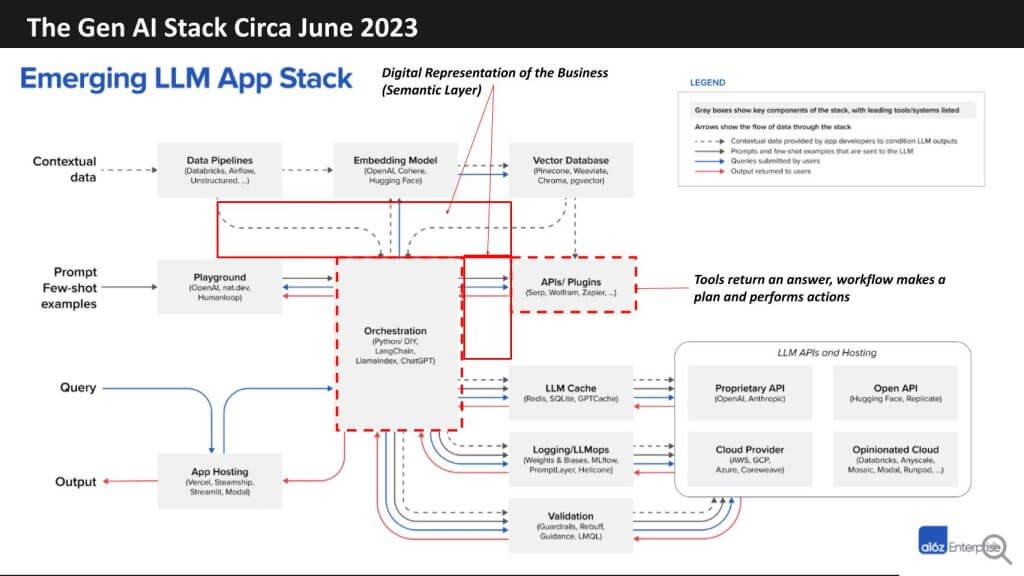

Imagine taking the previous example from a sophisticated, resource-rich firm such as Amazon and creating a capability in software that all enterprises can leverage to create systems of agency. As we said, we see the next wave of AI as agentic AI, building on that previous a16z stack with some additions that we’re showing below.

We’ve taken that picture that a16z developed last January and highlighted the areas where we see change coming. In particular, we start above with the orchestration box in the middle of this diagram. Today, the orchestration is all about using tools, be they large language models or frameworks such as LangChain or high-level languages such as Python, to call models and data. In the future, we see the model doing more of the orchestration by invoking a sequence of actions using multiple workflows that call apps and leverage data inside those apps.

Thinking about tools today, they return an answer to a natural language request. In the future, we see agents doing much more, where the workflows are tapped to make a plan and perform actions. In the empty boxes that are shaped like an L on the diagram, we show the coming together of the digital and physical worlds, something that we’ve talked about extensively on previous Breaking Analysis episodes. This is where the world of people, places and things becomes harmonized in a digital representation of the business, what is sometimes referred to as the “semantic layer.”

Evolving today’s LLM stack – LLMs become LAMs

Let’s talk further about building on the framework from Andreessen Horowitz and how it needs to evolve to support agents. First of all, where we have the dotted lines around the box that says, “APIs and plug-ins,” those move from calling tools to actions that will invoke a legacy operational app or an analytic model, and the action is essentially a workflow building block.

A piece of work that’s on the operational side, or an analytic model, might be, “Tell me what should happen in the business or what has happened, and therefore what should happen next.” Those are essentially up-leveling tools into actions. And in the language of LLMs, these become verbs.

Come back to the orchestrator in the middle: Most of the workflow orchestration done today with LLMs comes from the programmers specifying something in code. In the future, the LLM becomes a large action model, or LAM, and it generates the plan of action or the workflow.

For that to work, it needs to up-level the raw data the RAG pipeline typically looks at, to create a digital representation of the business. This is the map or knowledge graph that says, “What are the people, places and things in the enterprise and the activities that link them? ” That’s what enables the agent to figure out how to navigate to accomplish its goal.

In the case of Amazon, the agent needs to understand what’s in the forecast to know how different inventory items relate to which suppliers, what those suppliers can produce, and how and where logistics can deliver their output. That’s the role of that map. In the future, we see this becoming a horizontal capability that can be applied to any industry through a variety of software components that we’ll talk about next.

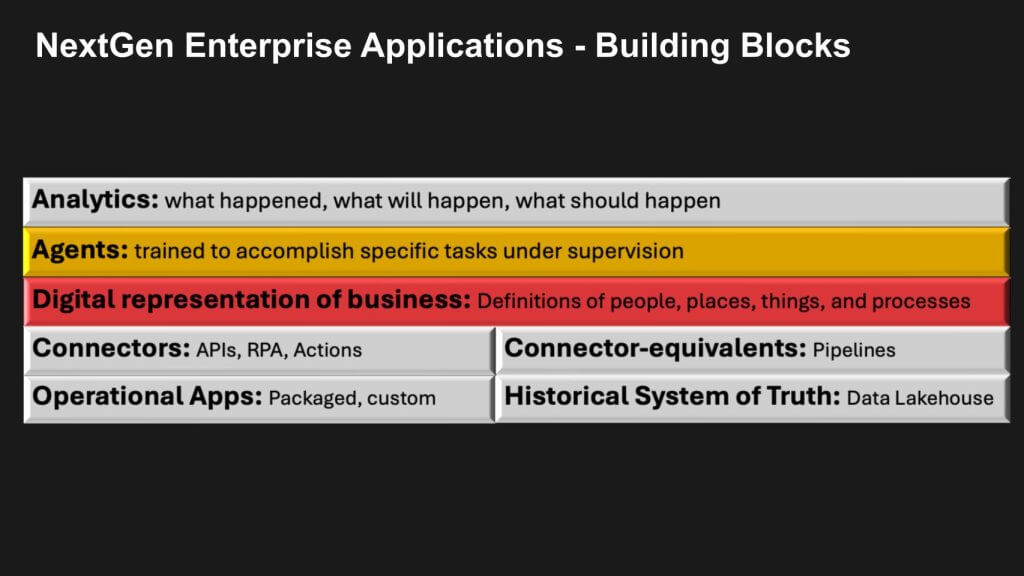

The building blocks of agentic AI

Let’s look into the components for agentic AI and double-click on the missing pieces that we just described. As we said earlier, the orchestration layer changes from calling data to calling apps and using data inside those apps to inform the actions.

The bottom right side of the chart above shows the connectors between raw data and a data product — that is, a semantically meaningful object – and the end result of complex pipelines – that is, the system of truth in a lakehouse. The lower left side is how you elevate operational apps to create actions. Gen AI is useful, because it enables natural language queries and allows us to make sense of application programming interfaces that can create a connector layer on an API and then turn it into an action.

The role of RPA

Think of robotic process automation in this respect as the plumbing. It can help take software robots that are wired to a screen layout or an API. And with agentic AI, we see an LLM being able to learn to navigate a screen, or an API when one is available, or it can learn by observing.

The point is, much of today’s RPA is hardwired with fragile scripts. This is a real problem that customers cite in their complaints about legacy RPA. We envision a more robust automation environment that is much more resilient to change as these hardwired scripts become intelligent agents. Does RPA go away? No. You don’t just rip out the plumbing.

As well, gen AI can help make building pipelines easier and less complex. The power of a digital representation of the business is, it enables building pipelines essentially on demand.

Deeper dive into the agentic AI stack

We love to geek out on Breaking Analysis. We get excited about these marketecture diagrams. But the real purpose of having these low-level building blocks is that we can no longer buy the applications that run the enterprise off the shelf. Twenty-five years ago, you bought SAP for enterprise resource planning and Siebel for customer relationship management.

Then for emerging businesses, it was NetSuite ERP and Salesforce CRM. But those were for the cookie-cutter business processes. Now enterprises need to build systems that embody exactly how they want to run their businesses. These are custom, but they need easy-to-use building blocks.

This starts as the foundation level with the application and data estate that companies already have. As we described earlier, that needs to be up-leveled and harmonized into a common language, like nouns and verbs. In this case, nouns are the data objects and verbs are the actions that we were talking about in terms of the connectors. That becomes the so-called semantic layer or digital representation of the business.

To be clear, that’s why we’ve shaded that layer in red. It’s not all there today in the schematic, and that is the most valuable piece of real estate in enterprise software for the next 10 years. That is what is being built in various forms and is a key ingredient to make the Amazon.com example more applicable across enterprises.

We believe this is the foundation on which agentic AI will be built. This layer dictates how much is in place, what type of tools you can use, and therefore what type of applications are possible.

Today, on the data side, we start building code by hand. We build code that turns raw data using pipelines into, let’s say, business intelligence metric definitions. These are these final data products, and we might use Fivetran and dbt and a Spark pipeline to complete the task and feed dashboards to the business.

But when that governance catalog, we talked about in the past, especially last week, fully maps all the data into semantically meaningful objects, such as Informatica — in fact, does today — then you can build new objects or data products on demand by automatically generating the pipelines. And that gets you to the bookings, billings, backlog type metrics or supplier on-time delivery performance.

We see products like the AtScale and dbt metrics layer and Looker’s LookML, where you define these by hand today. That’s on the data side. On the application side, you will use LLMs to up-level raw application APIs or screens into actions, and this is the opportunity for the RPA vendors.

These are the raw building blocks. If you go up a level, you start to be able to build a digital representation of the entire business. A firm such as Palantir Technologies Inc. is well ahead. Celonis Inc. is mining the logs of all application activity, to stitch together a process map of the entire company. Salesforce Inc.’s Data Cloud does this for Customer 360, and the customer experience connector maps all the processes for nurturing a customer from lead to conversion. And as we talked about in the past, RelationalAI Inc. and EnterpriseWeb LLC are creating the new foundation for application definitions.

This combines both the application logic and the database in a knowledge graph so that you can build an end-to-end definition. The point is, we have some of the pieces, but we don’t have all the pieces, so we can’t put together the full map, yet. But some have put pieces in place, and that’s what will make building the agents more productive.

The vision of next-gen applications

Let’s come back to our vision and the conceptual view of the world; and what the endgame is, as shown above. We envision a digital assembly line for knowledge workers that can be configured based on the attributes and understanding of the business. Think of agentic AI as assembly lines. They’re purpose-built for knowledge workers, and we imagine turning the enterprise into a digital platform that organizes the work for everyone in the company.

To do this, we need to be able to construct something where the digital platforms that we’re building will create assembly lines on demand for specific projects. And the work of building these digital factories, is ongoing where, for example, the management systems are constantly evolving to become ever-more sophisticated.

The goal is that when there’s work to be done, you can compose a process end-to-end very quickly, and it’s extremely precise.

The role of process mining and orchestration

The clip below is from a conversation that George Gilbert had earlier this year with Vijay Pandiarajan of MuleSoft (Salesforce), that provides some useful details on this concept.

One of the really interesting things is the efficiency in which work gets done, whether it’s with people or whether it’s with agents. I really think about them as processes with people, systems and bots. And really, we’ve got all of those functioning here. One way to look at the overall effectiveness of a particular process, we gather the analytical information about the execution of these things, the auditability, the trace logs, all of that. We have all of that information. We have the information that’s in the Customer 360 about what the customer has done as well, and the data graphs that are inside Data Cloud. Process mining becomes a really interesting way for us then to look at how are these things transpiring, and what is the most efficient way in which these elements can be brought together.

We haven’t said much about that top layer, what we’re calling orchestration. But really, a lot of these end-to-end experiences start with defining what an orchestration should look like, and then that’s when these people, systems, and bots are actually working together. We now have the analytical information coming out of that system. And then process mining lets us go back and see how closely were we aligned to what we had initially set out, right? What is happening with our overall orchestration? Are we actually hitting the goals and the targets that we had?

Vijay Paniarajan, Mulesoft (Salesforce)

Organizations as digital factories

Building on the Amazon.com example from earlier, and considering Vijay’s commentary, let’s examine more deeply how agents can support teams to build management systems.

What we heard from Vijay was that the analytics informed how work was done and were used to figure out how can we do that better. This reminds us of how over a century ago, factories were designed around time- and-motion studies to analyze how work was done to figure out how it could be done more efficiently.

This metaphor of building the digital factory is that platforms are the assembly line for knowledge work. Firms used to build management systems (for example, technology), mostly around people and processes to make organizations more productive. In the future, we predict these management systems will be built around agents and software that learn from their people and the operations, and they’ll use the data, as Vijay was talking about, from observing this activity to constantly improve.

Specifically, building these systems will involve continually improving the models about how the parts of the business work, should work and how they can work better. Importantly, all these agents’ prescriptions will be driven by top-level corporate goals, whether profitability, market share, growth of the ecosystem that the company orchestrates, and the like.

The point is that management know-how that used to be in the heads of the management teams starts to get embodied in this system of software components that includes agents and processes that are well-defined. In the future, we envision engineering a system or this digital factory. That is the point of the metaphor.

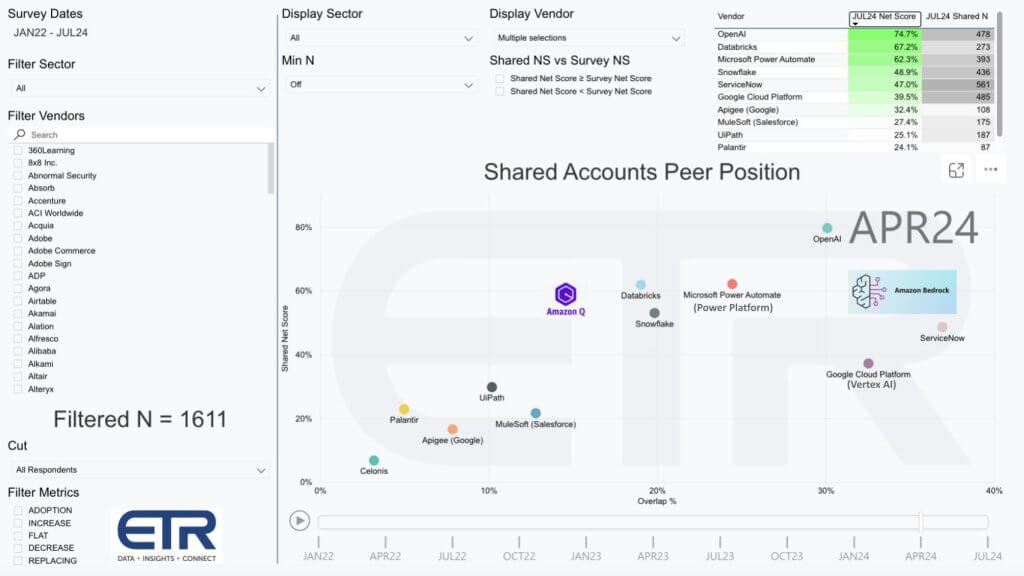

Evaluating some players contributing to the agentic AI trend

Let’s take a look at some of the firms that we see as key players in this agentic AI race, and bring in some of the Enterprise Technology Research data. Below we show data from the April ETR survey. And we’ve had to take some liberties with the categories and companies as there is no agentic AI segment in the ETR taxonomy. So we’re generalizing here.

On the vertical axis is net score or spend velocity on a specific platform. The horizontal axis is overlap or presence within the dataset of more than 1, 600 information technology decision-makers. We have a number of representative firms that we think can lead and facilitate agentic AI.

We’ve got OpenAI in the upper right as the key LLM player – they’re off the charts in terms of account penetration. We’ve got UiPath Inc., Celonis, and ServiceNow Inc. in the automation space, and analytics and data platform companies such as Palantir, Snowflake Inc. and Databricks Inc.

We show integration and API platforms such as Salesforce’s MuleSoft and Google’s Apigee. We show Microsoft Corp.’s Power Automate, which is its RPA tool, but we show that as a proxy for the company’s Power Platform, the entire suite. With Google, we’ve superimposed Vertex AI, which is its AI agent builder that it announced at Google Cloud Next in April. And we’ve added Amazon Bedrock, the company’s Model Garden and Amazon Q, its up-the-stack application platform.

Fitting the agentic AI pieces together

What we’ve put together above seems like many tangentially related companies, but in fact, these are all critical players in collecting the building blocks, such as Apigee, which used to be for managing APIs. Those APIs are what gets up-leveled into actions that an agent would know how to make sense out of.

Palantir and Celonis are two different ways of building that digital representation of the entire business. UiPath has now the ability to use gen AI to accelerate and to make more robust how you build connectors that become actions, whether to screens or APIs. MuleSoft the same way. Moreover, MuleSoft, like several companies, has low-code tools that we’ll tie back to Microsoft’s Power Platform, that help citizen developers build workflow agents without being superhuman and having to know how to navigate the open web.

Amazon Q and Amazon Bedrock will eventually be ways for, respectively, citizen developers and pro-code or maybe corporate developers to build agents as well, but Amazon will need a better map of that digital representation of the business. And though Databricks and Snowflake seem to be on top of the world right now, because data is the foundation of all intelligent applications, both of these firms need to somehow (either directly or through its ecosystem) build that representation of the people, places and things in the business. This is important because, as we talked about last week, open data formats such as Iceberg mean that no one company owns the data anymore.

The value is shifting to the tools that process data, govern it and turn it into people, places and things. Then the agents and applications do work on top of data. The reason this is important was summed up at Databricks’ Analyst Day when Chief Executive Ali Ghodsi was discussing Microsoft’s Power Platform, implying it will just have these nice graphical user interface tools that people won’t really need anymore, because people won’t be interacting with apps. The agents will be so they don’t need a GUI.

But what’s important is that the Power Platform tools, for example, are so far the most advanced we’ve seen for enabling citizen developers to define these agents. Microsoft is still missing some pieces on that digital representation of what the people, places and things are, but it’s coming at it from a user simplicity point of view.

The point is, when you look at the market this way, you can see not only who’s missing what, but how they’re all trying to converge on the same thing but taking different paths. That’s what’s important — that they’re all pursuing the same massive AI opportunity, but they’re coming from different starting points.

Making agentic AI a reality

Let’s wrap up with some of the areas that we see as gaps that need to be filled in order for our agentic AI scenario to play out. As we said up front, we see agentic AI really having an impact in the enterprise, and we see today’s LLMs evolving from models that can retrieve data via a natural language query to large action models, or LAMs, that can orchestrate a workflow.

To really take advantage of agentic AI, we have to connect to legacy apps, and we have to harmonize that data in those applications. And the example we use above is to ensure that things such as customers, bookings, billings and backlog all have the same meaning when applied across the enterprise.

Sounds simple. It isn’t. Being able to understand and take action in near-real time is the future of business, in our view. And tool chains to build and train agents in an ongoing fashion becomes increasingly important.

Agents everywhere

We’re going to see agents show up pretty much anywhere gen AI has shown up in enterprise tools. We’re going to see a lot of splashy announcements related to consumer agents. But we think it will be like the consumer web 25 years ago: It burst on the scene with a ton of energy, but we were missing the infrastructure for the consumer web to have a business model, for instance, to deliver goods and services. It literally took decades to get there and Amazon eventually solved the problem with its vision, execution ethos and massive resources.

In the enterprise, on the other hand, we can put agents any place where there’s a tool with a user interface. Because at the simplest level, it can accomplish a sequence of steps on behalf of a user.

In other words, a user expresses intent. The agent does a sequence of activities in a tool, but we can also build workflows. These are simple building blocks where you define the actions and the data that it needs to perform those actions, and to figure out what actions to perform. It’s a much more scoped, bounded and narrow problem than solving for consumer use cases.

Going back to the beginning, instead of setting off to circumnavigate the globe, it’s just, “Find my way to the gas station or the grocery store.” It’s a much more tractable problem, and we can produce a lot of tangible value in a short time frame.

What do you think about agentic AI? Is it the next buzzword or the next wave of enterprise value?

Let us know.

Keep in touch

Your vote of support is important to us and it helps us keep the content FREE.

One click below supports our mission to provide free, deep, and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and many more luminaries and experts.

“TheCUBE is an important partner to the industry. You guys really are a part of our events and we really appreciate you coming and I know people appreciate the content you create as well” – Andy Jassy

THANK YOU