Every content owner needs to ensure it has the correct metadata attached to its video, audio and photo files.

Over the last few months, vendors have begun to leverage the technology to help broadcasters, streamers and production companies index their assets.

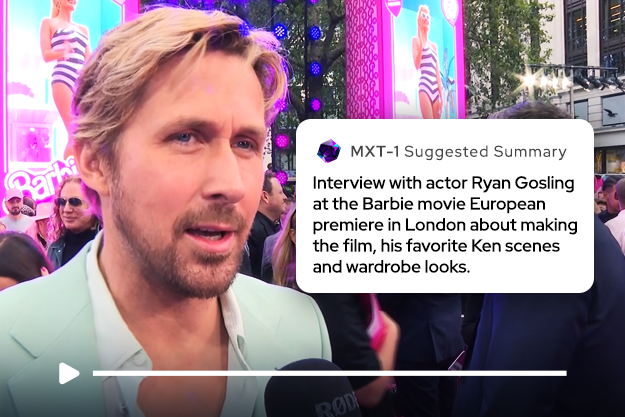

Newsbridge introduced MXT-1 at NAB Show. It is a generative AI indexing technology that generates human-like descriptions of video content and is capable of indexing more than 500 hours of video per minute, and is particularly for those working with media and sports content.

TVBEurope caught up with Newsbridge CTO Frederic Petitpoint to find out more about the company’s use of generative AI in MXT-1.

What makes MXT-1 unique?

There are a few things that make MXT-1 unique. Its multimodal indexing approach detects faces, text, logos, landmarks, objects, actions, shot types, and produces transcription. In addition, it can summarise videos in their entirety as well as by scene, enabling users to know exactly what’s in a piece of footage without potentially needing to spend hours rolling through it.

MXT-1 is out of beta mode starting in September and is being fully deployed with a refreshed user experience across all Newsbridge solutions, including our Just Index, Media Hub, Live Asset Manager, and Media Marketplace.

How was it developed?

MXT-1 was developed in-house by Newsbridge’s AI engineering and research team. We were already exploring the use of natural language models to generate human-like descriptions of video content. Then some of our customers, including 24/7 Arabic news service Asharq News, asked us to provide a solution that would enhance capabilities such as multilingual media indexing and summarisation. Asharq deals with a massive volume of content, indexing around 1500 hours every month. Complete and accurate content descriptions in both Arabic and English are key for their journalists and producers to quickly find the footage they need to craft stories.

Our customers’ needs acted as a catalyst for us to really push the boundaries of our AI’s abilities and led us to where we are with our technology today.

Why is it important for the broadcast industry?

Along with being too “general” for broadcast media indexing, the cost of traditional, off-the-shelf AI technology is quite prohibitive, and prevents many organisations from fully automating their archive and live indexing operations. MXT-1 was specifically trained on media, entertainment, and sports content, making it the perfect solution for these use cases.

Our technology is also important for the broadcast industry due to its multilingual capability. MXT-1 produces transcriptions, scene descriptions, and media summaries in multiple languages. In videos where more than one language is spoken, all segments are translated into the language of choice. For international news organisations, and the globalisation of content, this is crucial for optimizing workflows.

In addition, broadcasters are increasingly looking to generate new sources of revenue. Having an AI-powered media archive that’s deeply searchable, and in multiple languages, only enhances the discovery and resale potential of the content. An archive with 10,000 hours of content has an average annual revenue potential of $10 million for the rights holder. The key to unlocking that revenue is knowing what’s inside your media collection, and providing an easy search experience for buyers to find what they’re looking for.

MXT-1 also reduces energy consumption. How does it do that?

We’ve developed our technology in a way that consumes seven times less energy than mono- or uni-modal AI systems. Without getting too techy, we achieve this lower emissions processing of video content through micro serverless containers, running as much as possible on CPU, instead of always using GPU for AI inferences.

We know that video represents 82% of internet traffic, and the volume of data stored in data centers is experiencing hyper-growth of 40% per year. Likewise, environmental responsibility and sustainability is increasingly becoming part of regulation and customer requirements globally. Now more than ever it’s important for companies like us to limit our impact as much as possible, and make lower emissions a big focus in our R&D.

Why did you decide to use generative AI rather than machine learning?

The two are related — generative AI is a specific application of machine learning.

In Newsbridge’s MXT-1 pipeline, we use generative AI through one of our fine-tuned large language models (LLMs) to generate human-like descriptions (i.e., transforming vectors to text so that humans can understand). We are also using machine learning processes such as transcription, landmark detection, and detecting face vectors to feed our LLM.

There’s a lot of discussion around the use of generative AI and what that means to human employees – what are your thoughts on that?

We’re certainly experiencing an AI revolution, and let’s be real, generative AI will absolutely transform jobs. We’ve already seen some companies announcing layoffs across editorial teams, claiming generative AI could automate a big chunk of their work. It really depends on the type of editorial work we are talking about — ChatGPT is very good at rephrasing, for example, so I do see GenAI as a tool.

Sometimes I use Midjourney to give light to my ideas in presentation and pitch decks, and anyone who has done similar will know that “prompt engineer” is becoming a real skill, probably even a new job in the future!

We must remember that AI models are trained with what humans have done and published on the internet in the past, not with what humans are planning to do in the future.

I don’t believe that AI will completely take over and eliminate media jobs. Bias, inconsistencies, and inaccuracies can creep in very quickly — we’ve all seen the stories about AI hallucinations. There will always be a need for human oversight and human connection, especially when using AI in broadcast news settings.

For media indexing specifically, deeper learning leads to more accurate results, and ongoing training is needed for AI to precisely identify the key people, objects, locations, context and actions that matter to ever-evolving organisations. I believe that the role of the media archivist in the future will resemble that of a content guardian. Their daily work will reflect the work of no-code data scientists. Media archivists will be able to explore video as if it was data, using simple search queries.

How do you see generative AI developing for the media industry?

MXT-1 uses LLMs to describe every shot, just like a human would. But in the future, we want to push the boundaries of video understanding and unlock the possibilities for various types of creative storytelling.

One of our customers is already using MXT-1 to generate SEO and social media descriptions. Our AI suggests engaging copy for YouTube and OTT elements including titles, descriptions, keywords, and even automatic video chaptering for optimal audience engagement.

We’re also starting to deploy fine-tuning in our summarisation models. This enables the AI to really hone in and produce text aligned with a customer’s tone of voice, and reflect specific acronyms and other shorthand used within the organisation.

Can we expect Newsbridge to include it in more products in the future?

MXT-1 is our core technology, and it already powers all of our products, so yes! We continuously design and code to make it as seamless as possible for our users. Currently, we release two to three improvements every month. Generative AI technology is always evolving, and we’re excited to keep evolving our products’ capabilities along with it.