Artificial intelligence continues to lead all enterprise information technology sectors by a wide margin in terms of spending momentum. But that momentum has not led to an across the board boost in productivity or meaningful revenue gains for firms.

Specifically, the adoption of generative AI is increasing steadily but the use cases are not yet self-funding. As such, the outlook for IT spending, while slowly improving in the second half of 2024, remains constrained.

In this Breaking Analysis, we take a midyear checkpoint on AI adoption and its relationship to IT spending. — wth a closer look at how IT decision makers are deploying gen AI in production, some old and new blocker,s and what we think needs to happen to generate greater AI returns for enterprise customers.

AI momentum soars above all sectors

This chart shows the spending profiles for the various sectors tracked in Enterprise Technology Research’s quarterly Technology Spending Intentions Survey or TSIS. Net Score or spending velocity is shown on the vertical axis and Pervasion or penetration into the data set for the sector is represented on the horizontal axis. The N in the survey is more than 1,700 IT decision makers or ITDMs. The red dotted line at 40% indicates a highly elevated Net Score.

You can see the steady climb for machine learning adn AI since its momentum bottomed at 40% eight quarters ago in October 2022. Prior to the latest ETR survey we generally saw across-the-board momentum compression for almost all other sectors. However, in the latest survey, we’re seeing accelerated momentum for many sectors, including analytics, cloud, containers, data platforms, networking, robotic process automation and servers.

IT spending outlook ticks up

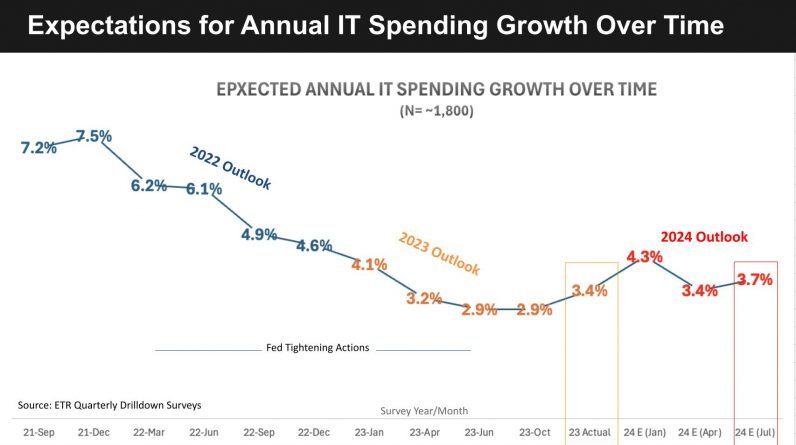

This across-the-board trend is supported by the macro spending outlook. In this graph we’re showing the expectations for annual IT spending growth over time.

We’ve reported before how coming out of the pandemic, IT spending growth expectations were inversely proportional to interest rates. That trend continued through the early fall of 2023, but we finished stronger than expected last year at 3.4% growth. And we came into 2024 with an expectation of 4.3% growth for this year. That expectation moderated in the second quarter to 3.4% but you can see in the latest survey we’re seeing some renewed strength with a slight uptick to 3.7%. The macro remains uncertain, however, with IT spending expectations just over current global gross domestic product growth forecasts of 2.5% to 3%.

Gen AI continues to be the belle of the ball

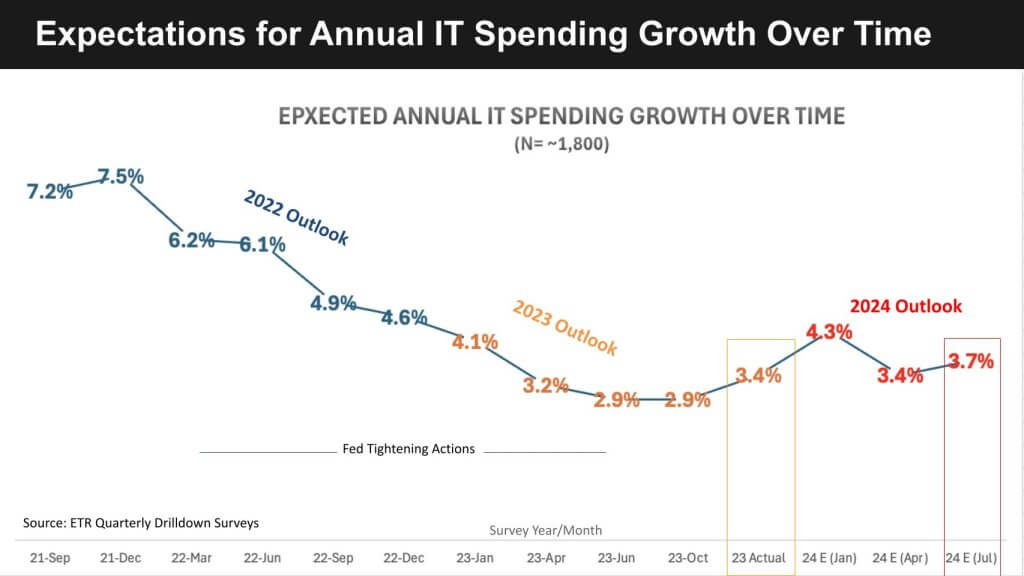

We’ve reported extensively that AI has been stealing budget from other sectors at more than 40% of customer accounts. And as this data shows, evaluations and gen AI adoption continues at a steady pace.

Across nearly 1,800 ITDMs, the percentage of organizations not evaluating gen AI has declined from 52% in April of 2023 to 16% today. And the percentage of customers that have selected at least one use case has doubled from around 40% to 80% over that same timeframe.

You may be surprised at that 16% figure – that is, those not evaluating gen AI. When we dig into that data, we find a cohort of customers taking a wait-and-see attitude given the fast pace of large language model innovation and concerns over privacy. This is especially acute in the health care sector.

Enterprise use cases remain ‘chatty’

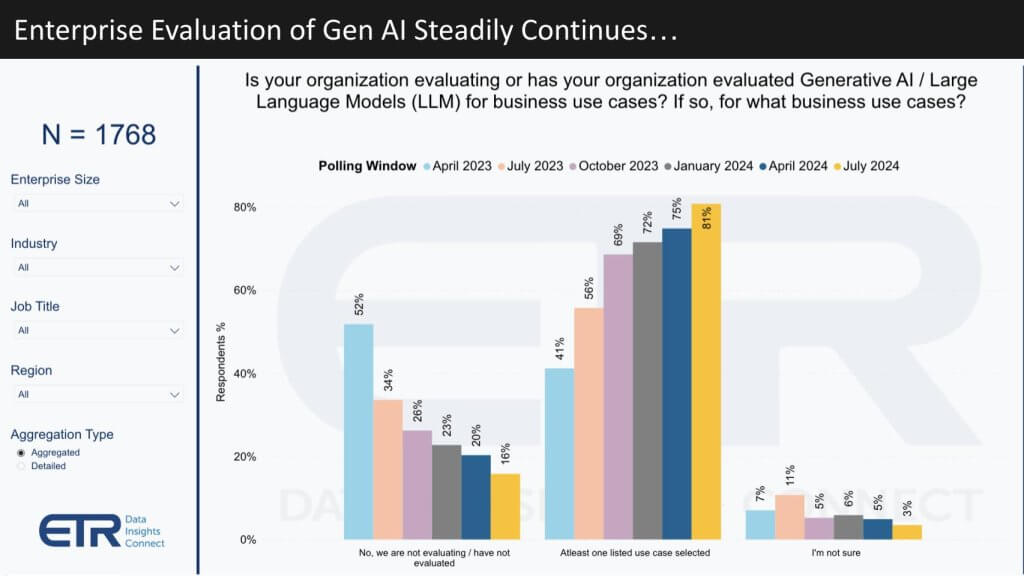

Despite the high interest in gen AI, when we dig deeper into the use cases that are going into production, we see they’re very much what you would expect with ChatGPT and other popular LLMs.

Of the 1,400-plus respondents who indicated they’ve evaluated at least one use case, 25% said they were not in production yet. When asked which use cases are in production we see code generation, customer support, text summarization and writing content as the top use cases. Of note is that relative to previous quarters, these use cases are flat to down.

New to the list however is summarizing meetings, search and help desk use cases.

The point is that though adoption continues, the use cases don’t appear to be game-changing in the sense that they are throwing off so much value that they have become self-funding. They’re nice but very much the kinds of use cases you would get from an off-the-shelf LLM.

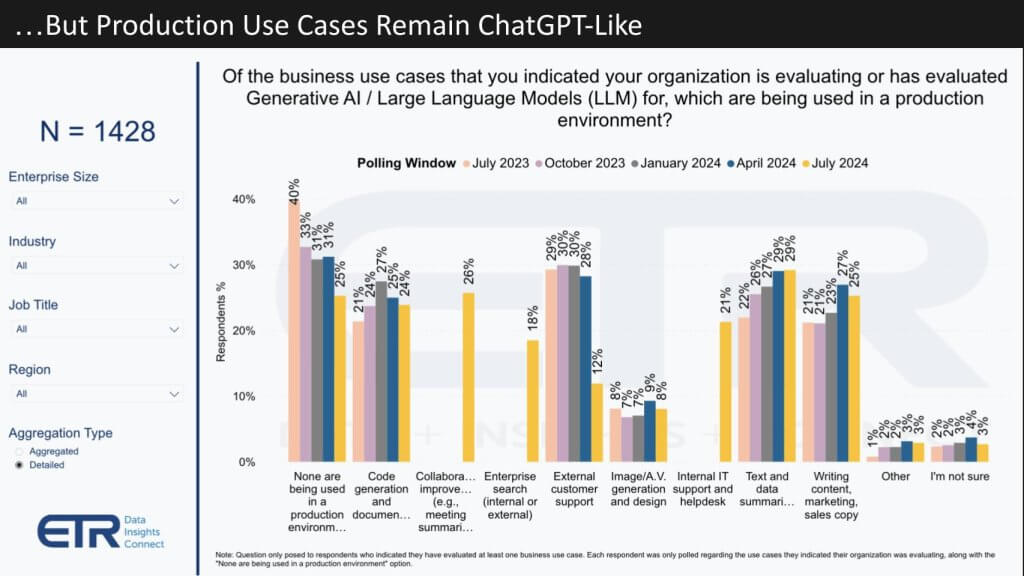

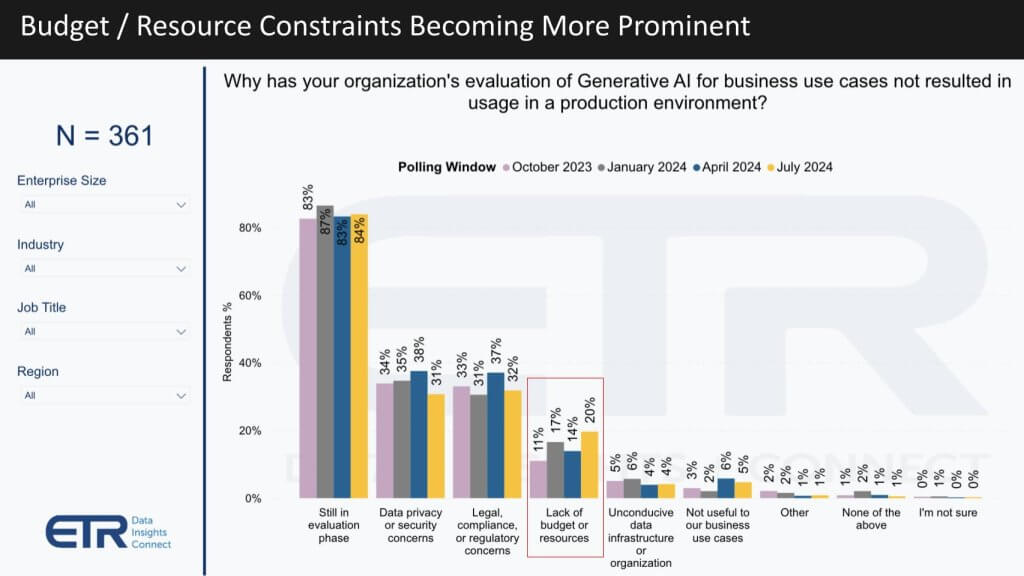

Budget is creeping up as a blocker to LLM adoption

To that point, the main barriers to bringing gen AI into production remain privacy and legal concerns. But as shown here, budget and resource constraints are now cited by 20% of the respondents that don’t have generative AI in production yet.

It’s also interesting to note that though concerns over data privacy and compliance continue to be the most prominent, organizations may be getting a handle on these concerns as they have peaked in the data and are slightly down this quarter.

But coming back to the budget constraints, this again is another indicator that gen AI return on investment is not off the charts. The percentage of customers reporting production use cases for retrieval-augmented generation, or RAG, is in the single digits, another indicator of possible resource constraints. At this point in time, AI ROI is not to the point where it is so obviously self-funding that it’s fueling, not detracting, from other sectors.

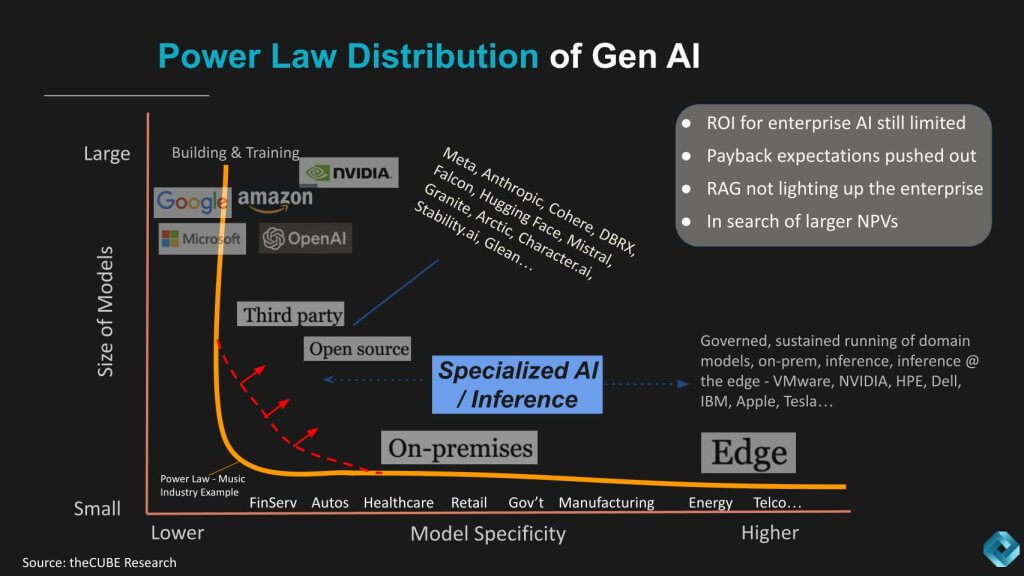

Enterprise ROI will come from domain-specific use cases

We’ve used this notion of the Gen AI Power Law from theCUBE Research many times, as shown again here:

Briefly, the point of this model is though there’s lots of action today with very large, expensive-to-build language models, for most enterprises, the real value will come from applying smaller language models, or SLMs, to their specific business, driving unique value within an industry. Examples would be novel retail experiences, real-time supply chain adjustments, hyper-automation in manufacturing, and dramatically compressed drug discovery.

But these types of high-value projects take time, resources and lots of trial and error. As such, the ROI for enterprises in production today remains limited. Moreover, we’re seeing a higher percentage of customers push ROI payback expectations out to over 12 months, which is prudent. Customer conversations confirm that the idea of applying smaller language models to specific domains has merit. But successful projects with much larger net present values will take much more time – perhaps 18to 4 months or more.

Dialing in on 2H 2024

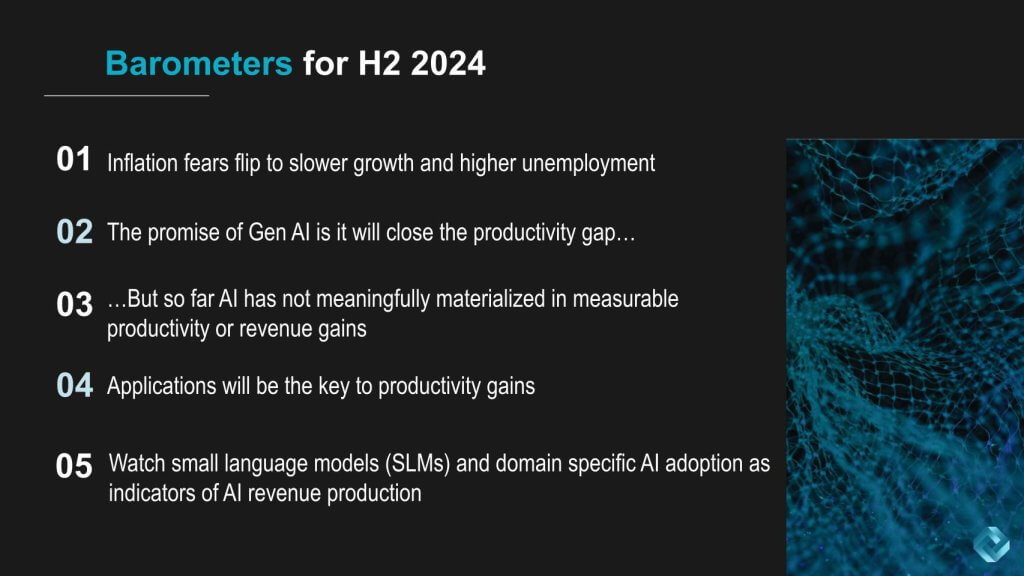

What are some barometers we can watch as indicators of progress for the second half of this year?

The narrative around inflation fears has flipped. Economic growth is slowing and unemployment is perhaps ticking up somewhat. Expectations for rate cuts in September are back on the table, which might is certainly driving positive sentiment in stock markets. And that could be a positive for IT spending. But this is an election year that is filled with uncertainty and that could bring dislocations in spending patterns.

The promise of gen AI is it will close the productivity gap…. But so far AI has not meaningfully materialized in measurable productivity or revenue gains.

Those productivity gains will most likely come from applications. Existing apps with embedded AI from the likes of Microsoft, Salesforce, Oracle, Workday and ServiceNow.

Watch small language models and domain-specific AI adoption. This will be where we’re likely to find new revenue production with novel experiences, advances in robotics, highly advanced analytics applied to discovery of new drugs or new sources of energy, supply chain and logistics breakthroughs, and better forecasting.

What are you seeing in terms of LLM adoption? How is it affecting other budgets and what are your expectations for payback size and timeframe?

Let us know.