Running an LLM (language learning model) on your Windows PC just got significantly easier thanks to Microsoft’s Foundry Local AI tool. It’s completely free to use and offers a wide variety of AI models to choose from.

What is Foundry Local AI

Microsoft recently announced Foundry Local, which is mainly designed for developers. The free tool lets you run LLMs on your PC locally, no Internet needed. You’re not going to get the same results as you would with the popular cloud-based AI tools, such as ChatGPT, Gemini, or Copilot, but it’s still a fun way to experiment with AI models.

Since it’s still new and technically in a public preview only, it’s still fairly basic, so don’t expect anything mind-blowing. Over time, Foundry Local will likely get better and have more features.

Related: other than Foundry Local, you can make use of these methods to set up your own offline AI chatbots.

Foundry Local Prerequisites

Before installing anything, make sure your PC is capable of handling Foundry Local AI models. Yes, ideally, Microsoft wants you to have a higher end Copilot+ PC, but it’s not necessary.

You’ll need:

- 64-bit version of Windows 10 or 11, Windows Server 2025, or macOS

- 3GB hard drive space, though 15GB is recommended, especially if using multiple models

- 8GB RAM, though 16GMB is recommended for best results

- Though not required, Microsoft does recommend having NVIDIA GPU (2,000 series or newer), Qualcomm Snapdragon X Elite (8GB or more of memory), AMD GPU (6,000 series or newer), or Apple silicon

You’ll only need Internet access during the installation and when install a new AI model. After that, feel free to disconnect. Also, make sure you have admin privileges to install Foundry Local.

How to Install Foundry Local AI

Unlike many apps, you don’t need to go to a website or the Microsoft Store to install Foundry Local. Instead, you’ll use a winget, which lets you install software through a command line prompt. Don’t worry, you don’t need to be a developer or master of the command line to do any of this.

If you prefer a more traditional installation, download it from GitHub.

For Windows, open a terminal window. Press Win + X and click Terminal (admin). Enter the following command:

winget install Microsoft.FoundryLocal

Agree to the terms and wait for the installation to complete. It may take a few minutes.

For macOS, open a terminal window and enter the following:

brew tap microsoft/foundrylocal

brew install foundrylocal

If you prefer downloading an LLM on a Raspberry Pi, try these steps.

Installing Your First AI Model

For your first time out, Microsoft recommends a light-weight model – the Phi-3.5-mini. You don’t have to start with this one, but if you’re short on space, it’s a good way to try things out without sacrificing precious storage.

Open a terminal window and enter the following command:

foundry model run phi–3.5–mini

It may take several minutes or more, depending on the model you choose. For me, this particular model only took two minutes. The best part is Foundry Local AI installs the optimal version of the model for your hardware configuration, so you’re not left figuring out exactly what to install.

If you want to try out other models, check out the full list by using the following command:

foundry model list

Each model in the list shows how much storage it needs and its purpose. For now, every model is just for chat completion.

Interacting with Local AI Models

There’s not a handy graphical interface for interacting with the AI models you install. Instead, you’ll do everything from the command line. But, it’s not much different than chatting with any other AI chatbot. Simply enter your text when you see the prompt Interactive mode, please enter your text.

Each AI model has its own limitations. For instance, I entered a prompt for Phi-3.5-mini asking “what is foundry local.” I did this specifically to highlight the fact that this model’s knowledge is limited to early 2023 and earlier. The result mentions this limitation, but attempts to answer the question as best as possible. Spoiler alert – it didn’t answer the question correctly, but that’s what I expected.

For best results, stick with simple questions that don’t require much research or current/breaking news type of knowledge.

If you want to switch between models you’ve already installed, use the following prompt:

foundry model run modelname

Just replace “modelname” with your preferred model.

If you’re in Interactive Mode, aka chatting mode, you’ll need to exit the terminal window and start another session. Currently, there isn’t an exit command, which is definitely a feature that’s needed.

Foundry Local AI Commands You Need to Know

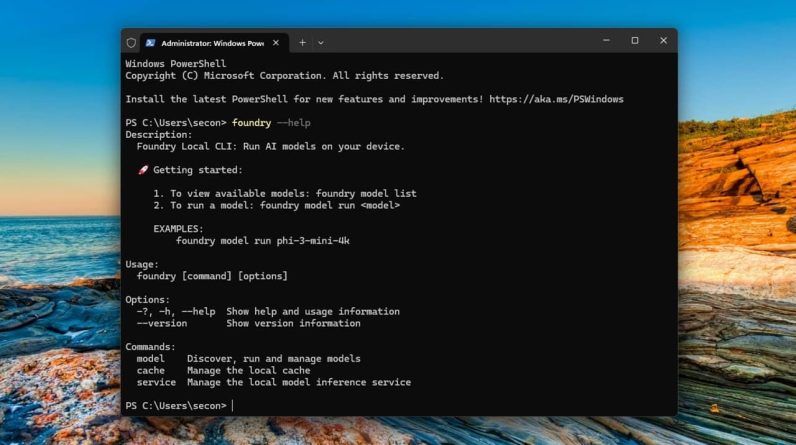

While there’s a list you can check out, you really only need to know a handful of commands to use Foundry Local. These commands show you all available commands within each of the three main categories – model, service, and cache.

See all general Foundry Local commands with:

foundry —help

For model commands, use:

foundry model —help

For service commands, use:

foundry service —help

For cache commands, use:

foundry cache —help

As long as you remember these, you don’t really need the full list. But, keep in mind that new commands will likely be added over time as Microsoft builds on the service. For now, consider it more of a beta test than a full-fledged tool.

If you need more than Foundry Local can provide, stick with ChatGPT or these alternatives. Or, try out these AI tools for improving your life and productivity.