Several months ago, OpenAI stunned the world with an incredible text-to-video AI tool called Sora. The whole concept is very simple: tell the AI what video to make, and Sora will generate it for you. OpenAI still hasn’t released it to the general public because it’s currently in development. I expected to see Sora released by early October, but that hasn’t happened. OpenAI’s DevDay came and went, and Sora is still unavailable to users.

Meanwhile, Meta announced its own Sora alternative. It’s called Movie Gen, its third iteration of generative AI products for image and video editing. Movie Gen can generate video and audio with a single text prompt, just like Sora. It also lets you edit existing videos with text prompts.

Movie Gen isn’t available to users yet either, as Meta continues to develop it. But one day, it might be added to the suite of Meta AI features available in social apps, forever changing the way we use Instagram, WhatsApp, and Facebook.

Meta explained in a blog post that it optimized its Movie Gen model for both text-to-image and text-to-video modes.

Tech. Entertainment. Science. Your inbox.

Sign up for the most interesting tech & entertainment news out there.

By signing up, I agree to the Terms of Use and have reviewed the Privacy Notice.

It’s a 30-billion parameter transformer model that can create 16-second videos at 16 frames per second. “These models can reason about object motion, subject-object interactions, and camera motion, and they can learn plausible motions for a wide variety of concepts—making them state-of-the-art models in their category,” Meta said.

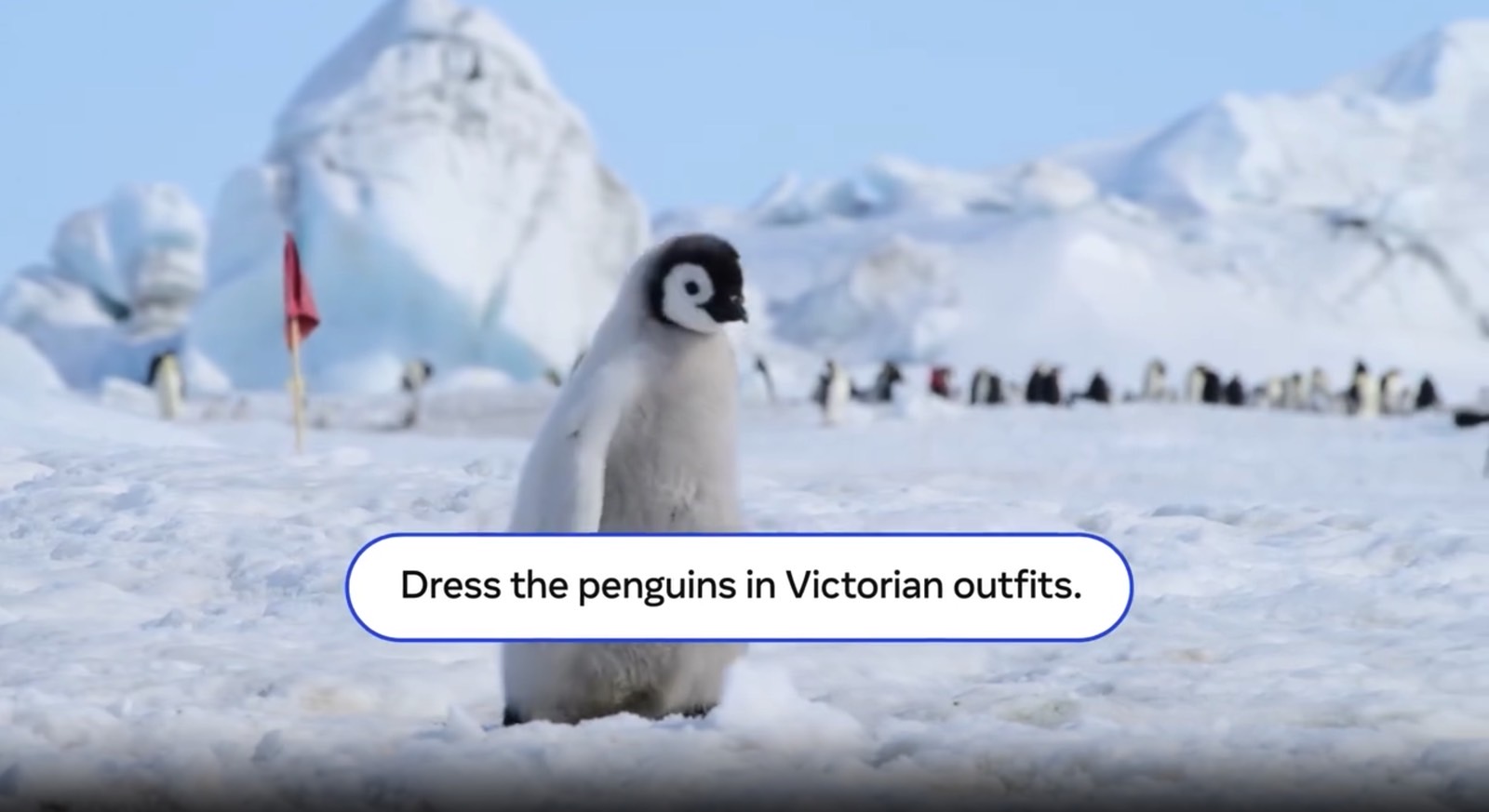

A Movie Gen prompt to create an AI video. Image source: Meta

Meta also demonstrated Movie Gen’s ability to create personalized videos. You can simply upload a person’s image and combine it with a text prompt to create an AI video “that contains the reference person and rich visual details informed by the text prompt.” Meta said its “model achieves state-of-the-art results when it comes to creating personalized videos that preserve human identity and motion.”

This is where I’ll tell you that such features could be abused to create fake videos that might go viral, spreading misinformation. It’s probably one reason we don’t have Movie Gen out in the wild at this time, though Meta doesn’t mention abuse in the blog post.

Movie Gen can create videos using the photo of a real person and a text prompt. Image source: Meta

Movie Gen can create videos using the photo of a real person and a text prompt. Image source: Meta

Moving on, Movie Gen can also be used to edit genuine videos. You’d submit your own clip and then instruct the AI to perform edits. Movie Gen features “advanced image editing, performing localized edits like adding, removing, or replacing elements, and global changes such as background or style modifications.” The model will preserve the original content and target only the pixels it needs to change.

Again, this opens the door for abuse in the real world. Some people will use it to create fun clips for entertainment, while others might want to distort the truth with AI edits to real videos.

Movie Gen uses a real video and a text prompt to edit the clip. Image source: Meta

Movie Gen uses a real video and a text prompt to edit the clip. Image source: Meta

The last Movie Gen feature that Meta detailed is audio generation. Meta trained a 13-billion parameter audio generation model that can look at a prompt containing a video of up to 45 seconds and a text prompt to create ambient sound, sound effects, and instrumental background music. Everything will be synced to work together.

Meta also produced a paper on Movie Gen tech. In human testing, Meta said its models outperform competitors, including OpenAI’s Sora.

As for when Movie Gen will be available, Meta says it’ll work with filmmakers and creators to integrate their feedback. Eventually, Movie Gen will be available in Meta’s social apps. The screenshots above come from Meta’s AI-generated and AI-edited clips. You can check out all the Movie Gen examples in Meta’s blog at this link.