Weâre approaching a point where the signal-to-noise ratio is getting close to one â meaning that as the pace of misinformation approaches that of factual information, it’s becoming nearly impossible to tell what’s real. This guide teaches journalists how to try to identify AI-generated content under deadline pressure, offering seven advanced detection categories that every reporter needs to master.

As someone who helps newsrooms fight misinformation, here’s what keeps me up at night:Â Traditional fact-checking takes hours or days. AI misinformation generation takes minutes.

Video misinformation is so old that it predates modern AI technology by decades. Even despite the basic technical limitations of early recording equipment, it could create devastating false impressions. In 2003, nanny Claudia Muro spent 29 months in jail because a low-frame-rate security camera made gentle motions look violent â and nobody thought to verify the footage. In January 2025, UK teacher Cheryl Bennett was driven into hiding after a deepfake video falsely showed her making racist remarks.

AI-generated image purporting to show Pope Francis I wearing a Balenciaga puffer jacket. Image: Midjournery, Pablo Xavier

This viral image of Pope Francis I in a white Balenciaga puffer coat fooled millions on social media before being revealed as AI-generated using Midjourneyâs text-to-image prompt. Key detection clues included the crucifix hanging at his chest held inexplicably aloft, with only a white puffer jacket where the other half of the chain should be. The image’s creator, Pablo Xavier, told BuzzFeed News: “I just thought it was funny to see the Pope in a funny jacket.”

Sometimes the most effective fakes require no AI at all. In May 2019, a video of House Speaker Nancy Pelosi was slowed to 75% speed and pitch-altered to make her appear intoxicated. In November 2018, the White House shared a sped-up version of CNN correspondent Jim Acosta’s interaction with a White House intern, making his arm movement appear more aggressive than in reality.

I recently created an entire fake political scandal â complete with news anchors, outraged citizens, protest footage, and the fictional mayor himself â in just 28 minutes during my lunch break. Total cost? Eight dollars. Twenty-eight minutes. One completely fabricated political crisis that could fool busy editors under deadline pressure.

For journalists looking for precise answers, the goal has shifted from definitive identification to a probability assessment and informed editorial judgment.

Not long ago, I watched a seasoned fact-checker confidently declare an AI-generated image “authentic” because it showed a perfect five-finger hand instead of six. But now, that solution is almost gone.

This is the brutal reality of AI detection: the methods that made us feel secure are evaporating before our eyes. In the early development of AI image generators, poorly drawn hands â like extra fingers or fused digits â were common and often used to spot AI-generated images. Viral fakes, such as the âTrump arrestâ images from 2023, were partly exposed by these obvious hand errors. However, by 2025, major AI models like Midjourney and DALL-E have significantly improved at rendering anatomically correct hands. As a result, hands are no longer a reliable way to detect AI-created images, and those seeking to identify AI art must look for other, subtler signs to spot AI-generated content.

The text rendering revolution happened even faster. Where AI protest signs once displayed garbled messages like “STTPO THE MADNESSS” and “FREEE PALESTIME,” some of the current models produce flawless typography. OpenAI specifically trained DALL-E 3 on text accuracy, while Midjourney V6 added “accurate text” as a marketable feature. What was once a reliable detection method now rarely works.

The misaligned ears, unnaturally asymmetrical eyes, and painted-on teeth that once distinguished AI faces are becoming rare. Portrait images generated in January 2023 showed detectable failures all the time. The same prompts today produce believable faces.

This represents a fundamental danger for newsrooms. A journalist trained on 2023 detection methods might develop false confidence, declaring obvious AI content as authentic simply because it passes outdated tests. This misplaced certainty is more dangerous than honest uncertainty.

Introducing: Image Whisperer

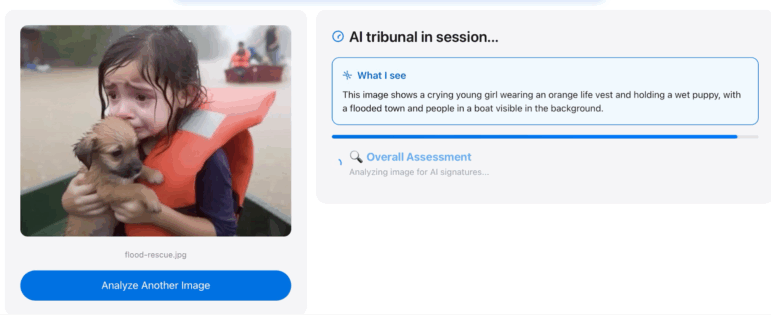

Analysis of an AI-generated image purporting to show a girl being rescued after flooding in the US. Image: Henk van Ess

I began wondering if I could build a verification assistant for AI content as a bonus for this article. I started to email experts. Scientists took me deep into physics territory I never expected: Fourier transforms, quantum mechanics of neural networks, mathematical signatures invisible to the human eye. One physicist explained how AI artifacts aren’t just visual glitches â they’re frequency domain fingerprints.

But then came the reality check: “Don’t build a tool yourself,” one expert warned. “You’ll need massive computing power and Ph.D.-level teams. Without that infrastructure, you’ll fail miserably.”

That’s when it hit me. Why not fight AI with AI, but differently? Instead of recreating billion-dollar detection systems, I’d harness existing AI infrastructure to do the heavy lifting.

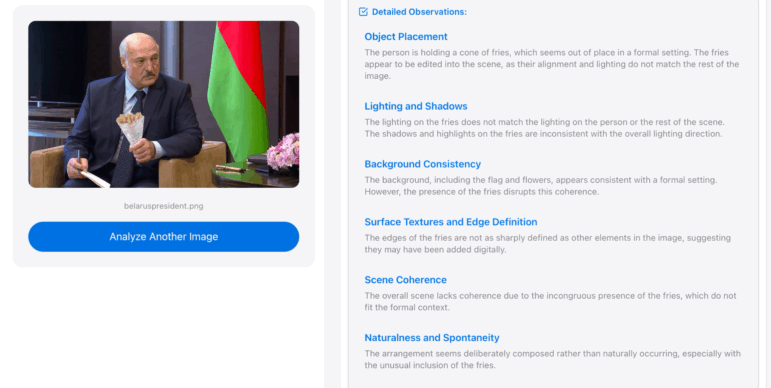

Analysis of an AI-generated image purporting to show the Belarusian president holding a cone of fries. Image: Henk van Ess

Image Whisperer (initially named Detectai.live) was born from this insight. The tool runs parallel large language model analysis alongside Google Vision processing, applying the physics principles these experts taught me while leveraging the computational power already available. Most importantly, unlike most AI tools, it tells you when it doesn’t know something instead of guessing.

It’s not trying to be the best system out there â it’s trying to be the most honest.

Seven Categories of AI Detection

The arms race between AI creators and detectors continues, with creators currently benefiting from the advantage of speed. Identifying what is or isn’t a deepfake is becoming a game of cat and mouse with developers improving the technology. Successfully identifying deepfakes requires combining multiple detection methods, maintaining constant vigilance, and accepting that perfect detection may be impossible. For journalists looking for precise answers, the goal has shifted from definitive identification to a probability assessment and informed editorial judgment.

But journalism has always adapted to changing technology. We learned to verify sources when anyone had the tools to create a website. We developed social media verification protocols when everyone became a potential reporter. Now we must develop standards for an era when anyone can create convincing audiovisual evidence.

Category 1: Anatomical and Object Failures: When Perfect Becomes the Tell

30-Second Red Flag Check (Breaking News):Â When time is critical and you need an instant assessment of suspicious perfection, focus on the gut feeling that something looks “too good to be true.” Look for magazine-quality aesthetics in contexts where that level of grooming would be impossible or inappropriate. A protest leader with flawless makeup, a disaster victim with perfect hair, or a candid political moment where everyone looks professionally styled should trigger immediate suspicion.

- Perfection gut check â Does this person look too polished/perfect for the situation?

- Context mismatch scan â Magazine-level beauty in a crisis/conflict scene?

- Skin reality check â Airbrushed smoothness where natural texture should exist?

- Overall grooming assessment â Does appearance match the scenario?

Five-Minute Technical Verification (Standard Stories):Â This deeper examination focuses on the technical details that betray artificial generation. Modern AI creates anatomically correct images, but they often exhibit an uncanny perfection not found in real photography. Real faces have subtle asymmetries, natural wear patterns, and environmental effects that AI struggles to authentically replicate.

- Zoom to 100% on faces â Look for natural skin texture, pores, and minor asymmetries.

- Clothing physics assessment â Natural wrinkles, fabric textures, wear patterns present?

- Hair strand analysis â Individual strands visible versus painted/rendered appearance?

- Jewelry/accessory realism â Three-dimensional appearance versus computer-graphics flatness.

- Teeth examination â Are there natural imperfections versus uniform perfection?

- Overall perfection audit â Does the grooming level match the claimed context and setting?

Deep Investigation (High-Stakes Reporting):Â For stories where accuracy is paramount, this comprehensive analysis treats the image as evidence requiring forensic scrutiny. The goal is building a probability assessment based on multiple verification points, and understanding that while definitive proof may be impossible, informed judgment is achievable.

- Comparative analysis â Find other photos of the same person to compare levels of natural versus artificial perfection.

- Technical magnification â Use professional tools to examine skin texture at the pixel level for mathematical patterns.

- Context verification â Research and compare with other images to determine whether the person typically appears this polished in similar settings.

- Professional consultation â Contact digital forensics experts, like Farid Hany, for advanced analysis.

- Multiple angle verification â Seek additional photos/video from the same event to check for consistency.

- Historical comparison â Compare to verified photos of the person from a similar time period and context.

Category 2: Geometric Physics Violations â When AI Ignores Natural Laws

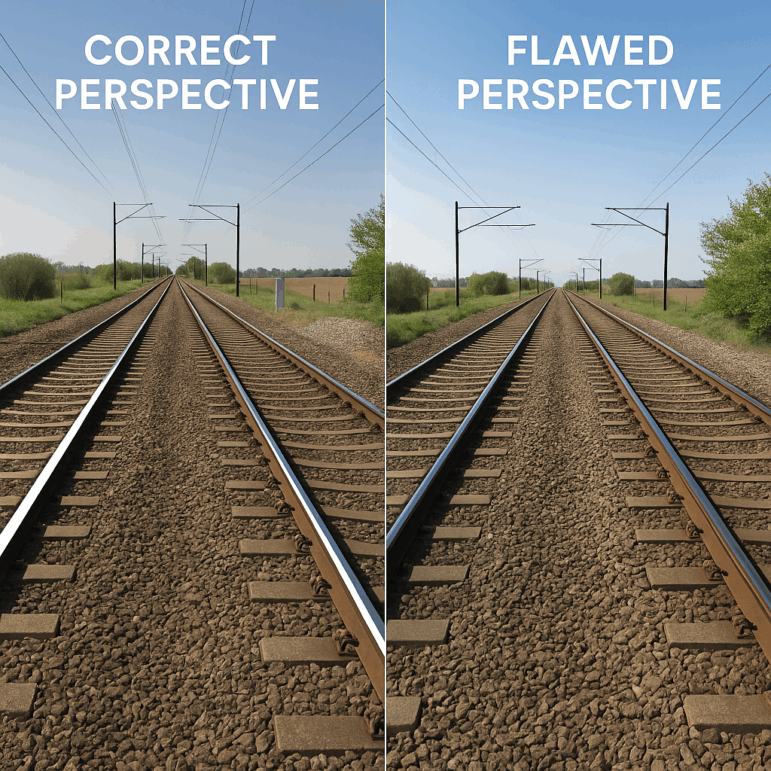

AI-generated image of train tracks receding into the distance next to correct perspective image from real life. Images: Henk van Ess

The Narrative:Â AI assembles images like a collage artist, not a photographer. It understands visual elements but not the geometric and physical rules that govern how light, perspective, and shadows actually work in the real world. These fundamental physics failures are harder for AI to fix because they require an understanding of 3D space and light behavior.

Real-World Physics Problems in AI Images:Â Although we are still early in the age of generative AI, today’s AI-generated images perspectively correct shadows and reflections. A typical example generated using OpenAI’s DALL-E 2 shows shadows that are inconsistent, reflections that are impossibly mismatched or missing, and shadows in the reflection oriented in exactly the wrong direction.

Vanishing Point Analysis:Â Real buildings follow the laws of perspective â parallel lines converge toward a single point on the horizon. AI often creates buildings where rooflines point left while window lines point right, a physical impossibility that reveals algorithmic assembly rather than photographic capture. Vanishing points are fundamental to capturing the essence of perspective in real images, and generated images often exhibit inconsistencies where lines do not meet at the correct vanishing point.

Shadow Consistency Check:Â Where there is light, there are shadows. The relationship between an object, its shadow, and the illuminating light source(s) is geometrically simple, and yet it’s deceptively difficult to get just right in a manipulated or synthesized image. In single-light source scenes (like sunlight), all shadows must point away from that source. AI frequently shows people casting shadows in multiple directions despite one sun, violating the basic laws of physics.

Research Validation:Â Academic research has confirmed these geometric flaws. Studies using GradCam analysis on outdoor images reveal varied shadow directions of vehicles, and structural distortions near vanishing points, while indoor scenes show object-shadow mismatches and misaligned lines in room geometry.

This type of subtle detection is not for the faint-hearted. Get used to the idea of staring at lines first.

30-Second Red Flag Check:

- Find any photo of straight train tracks (Google “railroad tracks perspective”).

- Open MS Paint or any basic image editor.

- Use the line tool to trace both rails , extending them toward the horizon.

- Check for them to converge to a single point â this is what SHOULD happen.

Now you have the visual template for what a correct perspective looks like.

Five-Minute Technical Verification (Standard Stories):

Perspective Test:

- Choose ONE building in the image.

- Use any image editor to draw extended rooflines and window rows.

- Check to see if lines from the same building converge at a single point.

- Multiple vanishing points for one structure = AI assembly error.

Shadow Analysis:

- Identify the primary light source (brightest highlights).

- Draw lines from the light source through object tops to shadow ends.

- Verify all shadows point in the same direction.

- Conflicting shadow directions = physics violation.

Deep Investigation (High-Stakes Reporting):

Reflection Verification:Â When objects are reflected on a planar surface, lines connecting a point on the object to the corresponding point in the reflection should converge to a single vanishing point.

- Find reflective surfaces in the image (water, glass, mirrors)

- Draw lines connecting objects to their reflections

- Check to see if lines meet the reflective surface at right angles

- Impossible reflection positions = geometric failure

Category 3: Technical Fingerprints & Pixel Analysis â The Mathematical DNA

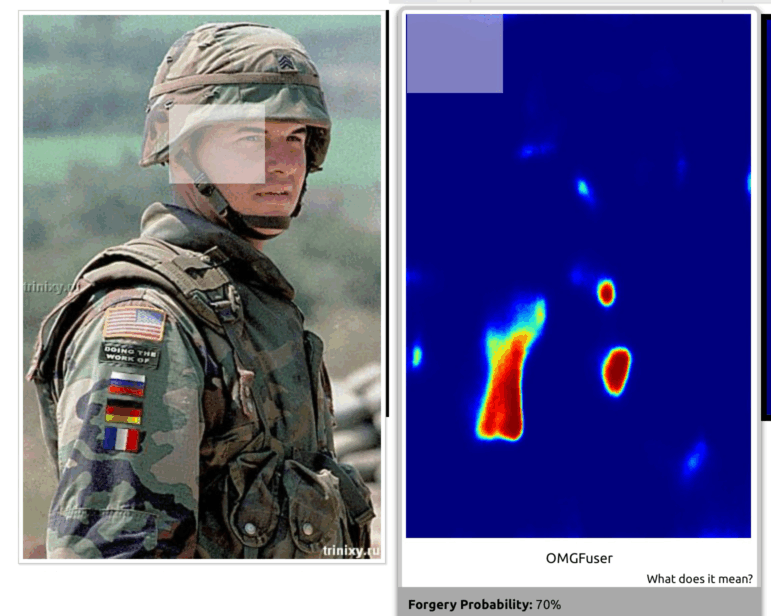

A viral, digitally altered photo of a US soldier from 2004. The “DOING THE WORK OF” patch plus the Russian, German, and French flags have been added to the image. The tool shows you the possible area of alteration and how likely it is altered. Images: Henk van Ess

The Narrative: When AI creates an image, it leaves behind hidden clues in the file â a mathematical signature that are like invisible fingerprints that special tools can detect. These clues are found in the way pixels are arranged and how the file is compressed. Think of it like DNA evidence that proves something was made by AI rather than captured by a real camera.

Noise Pattern Detection:Â Real cameras capture images with natural, messy imperfections â like tiny random specks from the camera sensor. AI-generated images have unnaturally perfect patterns instead. When experts analyze these patterns with special software, they see distinctive star-like shapes that would never appear in a real photo. It’s like the difference between truly random static on an old TV versus a computer trying to fake that randomness â the fake version has a hidden order to it that gives it away, if you have the proper tools.

Copy-Paste Detection:Â When AI or humans duplicate image regions, it creates unusual pixel correlations. Different areas become suspiciously similar beyond natural spatial redundancy, creating detectable patterns or mathematical signatures.

Compression Artifact Analysis: AI-generated content often shows unnatural compression patterns that differ from camera-originated raw files, revealing algorithmic rather than optical origins.

Professional Detection Tools:Â TrueMedia.org’s technology has the ability to analyze suspicious media and identify deepfakes across audio, images, and videos. Examples of recent deepfakes flagged by TrueMedia.org include an alleged Donald Trump arrest photo and an alleged photo of President Biden with top military personnel.

30-Second Red Flag Check:

Before analyzing suspect images, practice on something foolproof:

- Upload your photo to Image Verification Assistant.

- The technology will provide a rate of Forgery Probability.

- Research the image further when the probability of forgery is 70% or higher.

Five-Minute Technical Verification (Standard Stories):

- Visual Texture Check: Zoom in to 100% on areas like skin or sky. Look closely at the texture â does it have the random, irregular quality of real life, or does it look too smooth and mathematically perfect? Real photos have natural chaos; AI often creates patterns that are suspiciously uniform.

- Automated Detection Tool: Upload the image to TrueMedia.org (a free website). This tool runs the image through AI detection software that analyzes those hidden mathematical signatures we talked about earlier. It gives you a percentage likelihood that the image is AI-generated.

- Check the File’s Hidden Information: Right-click on the image file and select “Properties” (on PC) or “Get Info” (on Mac). Look at the metadata â this shows what software created the file and when. AI images often show editing software time stamps or creation tools that don’t match the supposed story of when/how the photo was taken.

- Surface Smoothness Analysis: This is different from texture check step. Here you’re looking at surfaces that should naturally be imperfect â like walls, fabric, or water. AI tends to “airbrush” these surfaces, making them unnaturally smooth where a real photo would show small bumps, variations, or wear.

Each step catches different types of AI mistakesâthink of it like using multiple different tests to be sure of your conclusion.

Deep Investigation (High-Stakes Reporting):

Forensically â This is a set of free, comprehensive noise analysis tools with frequency domain visualization.

Frequency domain analysis â Technical detection of mathematical patterns unique to AI.

Category 4: Voice & Audio Artifacts â When Synthetic Speech Betrays Itself

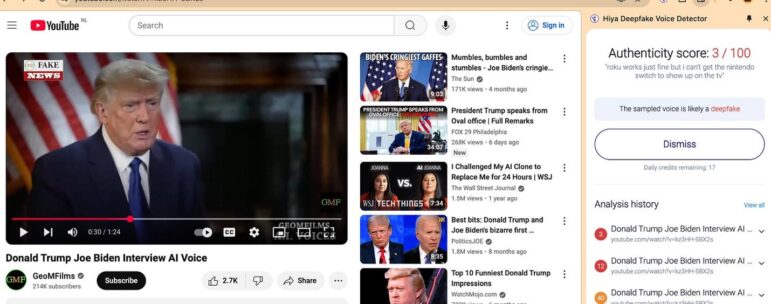

Analysis of AI-generated audio deepfake of Donald Trump. Image: YouTube, via Henk van Ess

The Narrative:Â Voice cloning technology can replicate anyone’s voice from seconds of audio, but it leaves detectable traces of artificial generation in speech patterns, emotional authenticity, and acoustic characteristics. While achieving impressive accuracy, synthetic voices still struggle with replicating the subtle human elements that make speech truly authentic.

Real-World Audio Deception Cases:Â In March 2019, the CEO of a UK energy firm received a call from his “boss” with a perfect German accent, requesting a big money transfer. Only a suspicious second call from an Austrian number revealed the AI deception. More recently, political consultant Steven Kramer paid $150 to create a deepfake robocall impersonating US President Joe Biden, which urged people not to vote in New Hampshire’s 2024 Democratic primary.

Speed and Cost of Audio Fakes: According to the lawsuit against Kramer, the deepfake took less than 20 minutes to create and cost only $1. Kramer told CBS News that he received “$5 million worth of exposure” for his efforts.

Speech Pattern Red Flags:Â Lindsay Gorman, who studies emerging technologies and disinformation, told NBC News that there often are tells in deepfakes: “The cadence, particularly towards the end, seemed unnatural, robotic. That’s one of the tipoffs for a potentially faked piece of audio content.”

- Unnatural pacing without normal hesitations or breathing.

- Flawless pronunciation lacking the imperfections of natural speech.

- Robotic inflection on certain words or phrases.

- Missing environmental background noise that should be present.

- Phrases or terminology the person would never actually use.

Linguistic Logic Failures:Â An earlier deepfake case revealed AI saying “pounds 35,000” â putting the currency type before numbers in an unnatural way that exposed the synthetic generation.

30-Second Red Flag Check:

Have a look at Hiya Deepfake Voice Detector, a simple Chrome plugin (you can use it 20 times a month). It passed the Trump-Biden video test:

It’s a Chrome extension that analyzes audio in real-time to determine if what you’re hearing is a real human voice or something cooked up by AI.

Here’s what it actually does:

- Analyzes voices in videos and audio playing in your Chrome browser.

- Works instantly, requiring only one second of audio to make a determination.

- Functions across any website â social media platforms, news sites, video platforms.

- Detects human-like speech created by all major AI voice synthesis tools.

- Supports multiple languages.

- Runs in real time as you browse.

Caveat: Since the plugin uses probabilistic algorithms, it won’t be 100% accurate in every case.

Five-Minute Technical Verification (Standard Stories):

- Listen for speech naturalness â Does pacing and pronunciation feel human?

- Verify availability â Could this person actually have made this statement when claimed?

- Check emotional authenticity â Does the emotion match the content and context?

- Call back on the official number to verify any urgent audio requests.

- Ask contextual questions that only the real person would know.

Deep Investigation (High-Stakes Reporting):

- Download the full audio fragment.

- Feed it to Notta.ai and let it write a transcript.

- While you are waiting, feed Claude with five to six verified audio fragments of the same person or transcripts.

- Ask it to make a semantical analysis and categorize the themes, the speech patterns, the use of grammar, the style, the tone of voice.

- Then upload the transcript of the audio you donât trust and ask Claude to compare it for anomalies.

Category 5: Temporal and Contextual Logic â When AI Misses the Big Picture

AI-generated image purporting to show a news broadcast still about a Paris climate protest. Image: Henk van Ess

The Narrative:Â AI generates content based on visual patterns without understanding real-world context, temporal logic, or situational appropriateness. This creates content that looks convincing in isolation but falls apart under sensible scrutiny.

The Iran Prison Video Deception:Â A sophisticated AI-generated video claimed to show an Israeli missile strike on Iran’s Evin Prison, but was generated from a 2023 photograph. Key detection clues include seasonal mismatches (leafless shrubs in supposed summer footage), perfect detail matching that violated probability, and impossible timing.

30-Second Red Flag Check:Â AI creates visually convincing content, but often misses fundamental logical relationships between timing, place, and circumstance. In breaking news scenarios, trust your knowledge of the world to spot impossibilities that would take sophisticated analysis to verify later.

- Season/weather sanity check â Does vegetation, clothing, or lighting match the purported date and location?

- Technology timeline scan â Any devices, vehicles, or infrastructure that don’t belong in this time period?

- Geographic gut check â Does architecture, signage, and landscape match the claimed location?

- Source credibility flash assessment â Does the contentâs origin align with its sophistication and access requirements?

Five-Minute Technical Verification (Standard Stories):Â This deeper analysis leverages your research skills to cross-reference claims against verifiable facts. AI struggles with the interconnected nature of real-world events, creating content that passes visual inspection but fails logical scrutiny when compared to external data sources.

- Historical weather verification â Check archived weather data for claimed date/location against visible conditions.

- Architectural landmark cross-reference â Verify that visible buildings, signs, and infrastructure exist at the claimed location.

- Cultural elements audit â Confirm that clothing styles, behaviors, and social dynamics match geographic/cultural context.

- Timeline probability assessment â Research whether claimed events could logically occur simultaneously.

- Source pattern analysis â Map how content spreads compared with typical distribution patterns for similar events.

- Multiple angle search â Look for other documentation of the same event from independent sources.

Deep Investigation (High-Stakes Reporting):Â For critical stories, treat contextual clues as pieces in a forensic puzzle, each requiring systematic verification against established facts. This comprehensive approach builds a probability matrix based on multiple logical inconsistencies rather than single definitive proof points.

- Comprehensive timeline reconstruction â Build a detailed chronology of claimed events and cross-reference all visual elements.

- Geographic intelligence verification â Use satellite imagery, street view, and local expertise to confirm location details.

- Seasonal/environmental forensics â Consult botanical experts, meteorologists, and local sources about environmental conditions.

- Cultural authenticity assessment â Interview regional experts about behavioral norms, dress codes, and social customs visible in content.

- Technology anachronism analysis â Verify that all visible devices, vehicles, infrastructure existed at claimed time and place.

- Source chain investigation â Trace complete distribution history and compare to known patterns for similar authentic events.

- Expert consultation network â Engage local journalists, academics, and officials familiar with claimed location or situation.

- Probability matrix construction â Score each logical element and build a comprehensive assessment of content authenticity.

Category 6: Behavioral Pattern Recognition â When AI Gets Humans Wrong

AI-generatred image purporting to show protesters marching in a city street. Image: Henk van Ess

The Narrative:Â AI can replicate human appearance but struggles with authentic human behavior, social dynamics, and natural interaction patterns. This creates detectable inconsistencies in crowd scenes, group dynamics, and individual behavior that trained observers can spot.

30-Second Red Flag Check (Breaking News):Â AI creates crowds that look realistic at first glance but betray their inauthenticity through unnatural behavior patterns. In breaking news situations, focus on whether people are acting like real humans would under the given circumstances, not digital actors following programmed behaviors.

- Crowd uniformity scan â Are there too many people of similar age, appearance, or style of dress?

- Attention pattern check â Everyone looking in the same direction or at camera, or are there natural variations in attention?

- Emotional authenticity gut check â Do facial expressions match the supposed mood and intensity of the event?

- Movement realism assessment â Natural human spacing and body language rather than artificial positioning?

Five-Minute Technical Verification (Standard Stories):Â This analysis leverages your understanding of human social dynamics to identify AI’s shortcomings in replicating authentic group behavior. Real crowds exhibit complex social patterns that AI training data cannot fully capture, creating detectable artificial uniformity in supposedly spontaneous gatherings.

- Demographic diversity audit â Count age ranges, clothing styles, ethnic representation versus artificial uniformity.

- Social interaction mapping â Identify genuine conversations, relationships, and group dynamics versus staged positioning.

- Environmental response verification â How are people reacting to weather, lighting, noise levels appropriately?

- Cultural behavior cross-check â Do social norms, personal space, and interaction styles match the claimed setting?

- Individual expression analysis â Look for unique facial expressions and genuine emotions versus uniform or generic responses.

- Movement pattern assessment â Are there natural asymmetries and personal quirks versus artificially smooth motions?

Deep Investigation (High-Stakes Reporting):Â For critical stories, treat human behavior as anthropological evidence requiring a systematic analysis of social patterns. This comprehensive approach examines whether the complex web of human interactions could authentically occur in the alleged circumstances.

- Sociological crowd analysis â Consult experts on crowd psychology to verify realistic group dynamics for the alleged event type.

- Cultural authenticity verification â Interview regional specialists about appropriate social behaviors, dress codes, and interaction patterns.

- Demographic probability assessment â Research whether the crowd composition matches typical attendance patterns for similar events.

- Individual behavior forensics â Analyze specific people for consistent personalities, relationships, and authentic emotional responses.

- Environmental adaptation study â Verify that crowd responses to weather, acoustics, and logistics match real-world patterns.

- Historical comparison research â Compare to verified footage or photos from similar authentic events in the same region or cultural context.

- Expert consultation network â Engage anthropologists, sociologists, and local journalists familiar with regional social dynamics.

- Micro-expression analysis â Consult experts to examine facial expressions for authentic emotional responses vs. artificial generation.

- Social network mapping â Trace relationships between individuals to verify the authenticity of group formation versus artificial assembly.

Category 7: Intuitive Pattern Recognition â The Ancient Detection System

AI-generated image purporting to show the Belarusian president incongruously holding a cone of fries at an official meeting. Image: Henk van Ess

The Narrative:Â Our brains evolved pattern recognition over millions of years. AI’s patterns come from training data and algorithmic processes. When something violates natural expectations built into human perception, that gut feeling often represents the fastest and most reliable initial detector before technical analysis.

Real-World Success Stories:Â In 2019, social media users immediately flagged a viral “street shark” image during Hurricane Florence. While technically competent, viewers felt it seemed wrong for the situation. Their instincts proved correct â reverse searches revealed digital insertion. Similarly, experienced journalists can sense when amateur footage looks suspiciously cinematic or when perfect documentation exists for supposedly spontaneous events.

Fun fact: it seems that alleged encounters were happening for more than a decade in a lot of hurricanes, but there was one example that was verified.

30-Second Red Flag Check:Â Trust your evolutionary pattern recognition when time is critical. Look for the production quality paradox where amateur sources produce Hollywood-level content, or timing convenience where chaotic events are perfectly documented. Your ancient detection system often spots these violations before technical analysis can confirm them.

- First impression reaction â Does this feel authentic or “produced”? (Trust your feelings of unease toward artificial representations of human beings, also known as âuncanny valleyâ feelings.

- Production-cost paradox â Does the amateur source align with the cinematic quality ($8 can now produce professional-looking political scandals)?

- Timing convenience check â Does the perfect documentation of events happen too fast for normal recording?

- Emotional manipulation test â Is the content designed to trigger an emotional reaction and quick sharing rather than to inform the reader?

Five-Minute Technical Verification (Standard Stories):Â Transform intuitive feelings into systematic verification by examining specific elements that triggered your pattern recognition abilities. When your gut says something feels off, identify what specifically violates natural expectations to build a logical case.

- Context logic deep check â Does the scenario make practical sense given real-world knowledge?

- Source credibility investigation â Does the origin match the content’s sophistication and access requirements?

- Narrative convenience analysis â Do the stories align too perfectly with current political or social tensions?

- Technical inconsistency audit â Does the quality, lighting, or audio match the alleged circumstances?

- Pattern violation catalog â Document specific elements that trigger suspicious feelings or contextual impossibility.

- Source-content mismatch assessment â Flag sophisticated content from amateur or anonymous sources with no explanation.

Deep Investigation (High-Stakes Reporting):Â For critical stories, treat intuitive detection as the starting point for comprehensive verification. Your pattern recognition identified anomalies; now systematically examine each element that triggered suspicion to build an evidence-based assessment.

- Gut feeling forensics â Catalog every element that feels “off” and research why each violates natural expectations.

- Production paradox investigation â Calculate the actual resources needed as compared with an amateurâs supposed capabilities.

- Contextual impossibility analysis â Map scenarios against real-world knowledge and insights from expert consultation

- Emotional manipulation assessment â Analyze content structure for designed viral triggers versus organic information sharing.

- Technical inconsistency deep dive â Analyze quality, lighting, and audio mismatches frame-by-frame.

- Source authenticity verification â Investigate whether claimed origins could realistically produce this content.

- Narrative engineering detection â Examine story construction for artificial convenience versus natural event development.

- Pattern violation expert consultation â Engage experienced investigators in independent, intuitive assessments.

- Trust threshold analysis â Document when multiple elements seem “off” and justify rejecting content despite technical competence.

When to Trust Your Gut:

- If multiple elements feel “off” even if you can’t identify specific problems.

- When content triggers an immediate emotional response, it is designed to prevent analysis.

- If the source, timing, or context raises logical questions.

- When technical quality doesn’t match the experience level of the producer.

- If your brain detects a production quality paradox or timing convenience that your experiment demonstrated.

The Reality: No Perfect Solutions

Bottom line: These seven categories of AI detection and the new tool â anatomical failures, physics violations, technical fingerprints, voice artifacts, contextual logic, behavioral patterns, and intuitive recognition â give journalists a comprehensive toolkit to assess content authenticity under deadline pressure. Combined with professional detection tools and updated editorial standards, we can maintain credibility. Fight fire with fire. Use AI to detect AI. And help preserve what’s left of our shared reality.

Dutch-born Henk van Ess is cutting through AI to find stories in data. He applies that in investigative research and builds tools for public use like SearchWhisperer and AI Researcher. The trainer in worldwide newsrooms, including the Washington Post, Axel Springer, BBC, and DPG, runs Digital Digging, where open source intelligence meets AI. He serves as an assessor for Poynter’s International Fact-Checking Network (IFCN) and the European Fact-Checking Standards Network (EFCSN).

Dutch-born Henk van Ess is cutting through AI to find stories in data. He applies that in investigative research and builds tools for public use like SearchWhisperer and AI Researcher. The trainer in worldwide newsrooms, including the Washington Post, Axel Springer, BBC, and DPG, runs Digital Digging, where open source intelligence meets AI. He serves as an assessor for Poynter’s International Fact-Checking Network (IFCN) and the European Fact-Checking Standards Network (EFCSN).