AI programs are here, and one of their missions is to take over the writing space.

Large language models like ChatGPT are some of the latest-to-arrive AI programs that can generate a variety of texts. After consuming billions of words from the internet, including sources like websites, articles, and Reddit discussions, the language models can now produce human-like text.

Individuals and companies are already using AI text generators to churn out thematically relevant essays, press releases, and even songs! But there are risks.

The Dangers of AI Writing

From the risk of misinformation to losing your brand voice, there are many dangers associated with using AI to produce content. Here are the top five dangers of using AI to generate written content.

1. Misinformation

Generating text using AI is easy. You only need to write a prompt, and the AI text generator will add content it believes could logically follow.

Despite the fact that ChatGPT and other AI writing tools can write texts that look plausible, their assertions are not necessarily accurate. AI is only as good as the data it’s trained on, meaning it’s susceptible to bias and misinformation.

For instance, Stack Overflow, an online community for developers, temporarily banned users from sharing answers generated by ChatGPT, citing that “the average rate of getting correct answers from ChatGPT is too low.”

Sharing incorrect information can be particularly detrimental when it comes to topics that have the potential to negatively impact someone’s health or finances.

2. Quality Concerns and Possible Plagiarism

AI-based content generators are essentially web scrapers that can learn from data fed to them and generate new ideas or better-quality content. But they, now and then, fall short of this goal.

AI writing tools don’t actually understand the text they generate, as they basically collect information from the internet and reword it. This could result in content that has issues like misplaced statistics and sentences without flow and coherence.

Another drawback of using AI to write is the increased likelihood of unintentional plagiarism. The Guardian reports that one of the reasons schools in New York City banned ChatGPT is the risk of students using it to write assignments and passing them off as their work.

3. Algorithms Devaluing Your Content

Publishers who use AI to write risk having their site penalized by search engines. According to Google, sites with lots of unhelpful content are less likely to rank well in Search. Google states that its team is constantly working to ensure users see more original, helpful content written by people, for people, in search results.

As previously stated, AI-written text may be incoherent and contain factual inconsistencies, making it unhelpful. This means if your site relied on AI content and took a hit after Google’s helpful content update, it could be because the search engine found the content unhelpful or lacking in accuracy.

4. Lack of Original Ideas, Creativity, and Personalization

Since AI tools use existing data to generate texts, they can produce content similar to what already exists. This can be a big problem for people or brands that plan to stand out from the competition by creating original content.

Creative content tends to be engaging and more shareable. The more your content is shared, the more you’ll be able to raise awareness and build relationships with people.

Thought-provoking and unique content often include personal experiences, opinions, and analysis, which are a few areas where AI falls short, at least at present.

5. Reputational Risk and Watered-Down Brand Voice

Reputational risk refers to any threat or danger that can damage an individual or company’s good name or standing. This risk can occur if your brand publishes error-ridden AI-written posts that your audience finds distasteful.

People want to feel connected, so brands that feel relatable on a human level tend to be more attractive.

A brand’s voice can make you smile, cry, and even feel empowered. Unfortunately, while many AI text generators allow you to set the tone of voice when generating the content, the results are either a hit or a miss.

How to Spot AI-Generated Text

Do you want to know if a human or AI wrote what you’re reading? Being able to spot AI-generated text can help you evaluate its credibility. Here are a few ways to spot AI-generated text.

1. Look for Repetition of Words and Phrases

Even though GPT-3 can generate coherent sentences, AI writing tools frequently appear to have difficulty producing long-form, high-quality content.

Many users assert that after generating a few high-quality paragraphs on a specific subject, some AI text generators will either start to repeat themselves or spew irrelevant sentences. This is due to the AI tool adding a series of what it thinks are relevant sentences to reach the target word count.

So, if you’re reading an article, and it seems like the same words are being used repeatedly, then it was probably written by an AI writing assistant. Many AI text generators tend to repeat words like “the,” “it,” and “is” more frequently.

Also, if an article seems to follow a specific pattern or formula, it may have been generated by AI. This kind of writing often makes content predictable and mind-numbing.

2. Search for Unnatural Transitions and Errors

AI tools may be incapable of correctly interpreting the context of a piece of writing. This could lead to content that lacks the coherence and logical flow of human-written content.

AI-generated content is sometimes characterized by grammatical mistakes, punctuation errors, and even paragraphs with a disjointed structure.

Unsurprisingly, though, excellent grammar can be a sign of machine-generated text. This is because grammar is rule-based; AI systems are usually better than people at following logic.

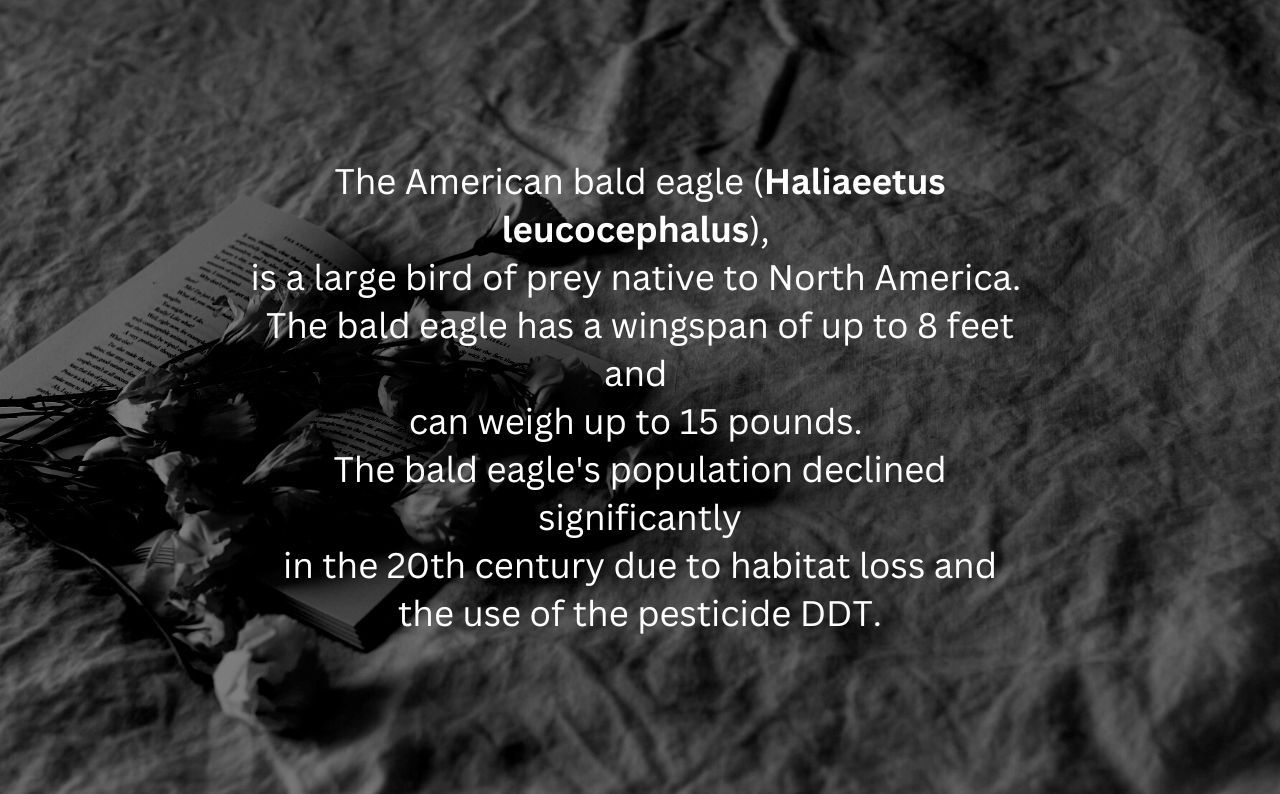

3. Examine Any Fact-Heavy, Dry Texts

An AI writing tool can quickly generate facts and simple sentences but not in-depth commentaries. So if you’re reading an article and notice it’s heavy on facts and light on opinions, it’s likely written by a bot.

4. Check for the Absence of Emotions or Personal Experiences

One obvious problem with current AI writing tools is their inability to display human emotions and subjective experiences. These things are hard to teach because there are no logical steps explaining how to feel or perceive the world. If you notice an article has a flat tone or emotions that feel forced, a bot may have written it.

5. Use an AI Content Detector Tool

A common way of determining whether you’re reading AI-generated text is using software that examines text components like readability, sentence length, and word repetition. Popular AI content detector tools include GPT-2 Output Detector, GLTR (Giant Language Model Test Room), and GPTZero.

You can also use a paid or free plagiarism checker to see if the text is original. If it’s duplicate content, it was probably written using AI.

Remember, though, AI content detector tools aren’t perfect and may be ineffective against new language models and small amounts of text.

AI-Generated Text: The Risks and How to Spot It

Although AI writing tools, like ChatGPT, can be helpful in specific contexts, it’s important to use them cautiously and consider the potential risks.

The dangers of using AI-generated text include the risk of misinformation, plagiarism, Google penalties, and reputational risk.

Being able to spot AI-generated text can help you evaluate its trustworthiness. While there’s no surefire method for spotting AI-generated text, certain characteristics such as repetition of words, unnatural transitions, errors, and absence of emotions can be indicators.