It was April of 2018, and a day like any other until the first text arrived asking “Have you seen this yet?!” with a link to YouTube. Seconds later, former President Barack Obama was on screen delivering a speech in which he proclaimed President Donald Trump “is a total and complete [expletive].”

Except, it wasn’t actually former President Obama. It was a deepfake video produced by Jordan Peele and Buzzfeed. The video, in its entirety, was to raise awareness of the AI capabilities we had at the time to create talking heads with voiceovers. In this case, Peele used Barack Obama to deliver a message to be cautious, wary, and stick to using trusted news sources.

That was six and a half years ago – or several aeons in AI time.

How can we trust any sources now?

Since then, we’ve made countless strides in AI technology. Literally leaps and bounds from the technology Peele used to create his Obama deepfake. GPT-2 was introduced to the public in 2019, which could be used for text generation with a simple prompt.

2021 saw the release of DALL-E, an AI image generation tool capable of fooling even some of the keenest eyes with some of its photorealistic imagery. 2022 saw even more improvements in its capabilities with DALL-E 2. MidJourney was also released that year. Both take text inputs for subject, situation, action and style to output unique artwork, including photo-realistic images.

A picture of my tiger-striped dog jumping off a slide having just caught a frisbee. Just kidding, it’s actually an AI-generated image using Google’s ImageFX, watermarked with SynthID

In 2024, generative AI has gone absolutely bananas. Meta’s Make-A-Video allows users to generate five-second-long videos from text descriptions alone, and Meta’s new Movie Gen has taken AI video generation to new heights. OpenAI Sora, Google Veo, Runway ML, HeyGen… With text prompts, we can now generate anything our wildest imaginations can think of. Perhaps even more, since AI-generated videos sometimes run amok with our inputs, leading to some pretty fascinating and psychedelic visuals.

Synthesia and DeepBrain are two more AI video platforms specifically designed to deliver human-like content using AI-generated avatars, much like newscasters delivering the latest news on your favorite local channel – speaking of which, your entire local channel might soon be AI-generated – like the remarkable Channel One. And there are so many more.

What’s real and what’s fake? Who can tell the difference? Certainly not your aunt on Facebook who keeps sharing those ridiculous images. The concepts of truth, reality and veracity are under attack, with repercussions that reverberate far beyond the screen. So in order to give humanity some chance against the incoming tsunami of lies Google DeepMind has developed a technology to watermark and identify AI-generated media – it’s called SynthID.

SynthID can separate legitimate authentic content from AI-generated content by digitally watermarking AI content in a way that humans won’t be able to perceive but will be easily recognizable by software specifically looking for the watermarks.

This isn’t just for video, but also images, audio, and even text. It does so, says Deepmind, without compromising the integrity of the original content.

Text AI watermarking

Large Language Models (LLMs) like ChatGPT use “tokens” for reading input and generating output. Tokens are basically part of, or whole words or phrases. If you’ve ever used an LLM, you’ve likely noticed that it tends to repeat certain words or phrases in its responses. Patterns are common with LLMs.

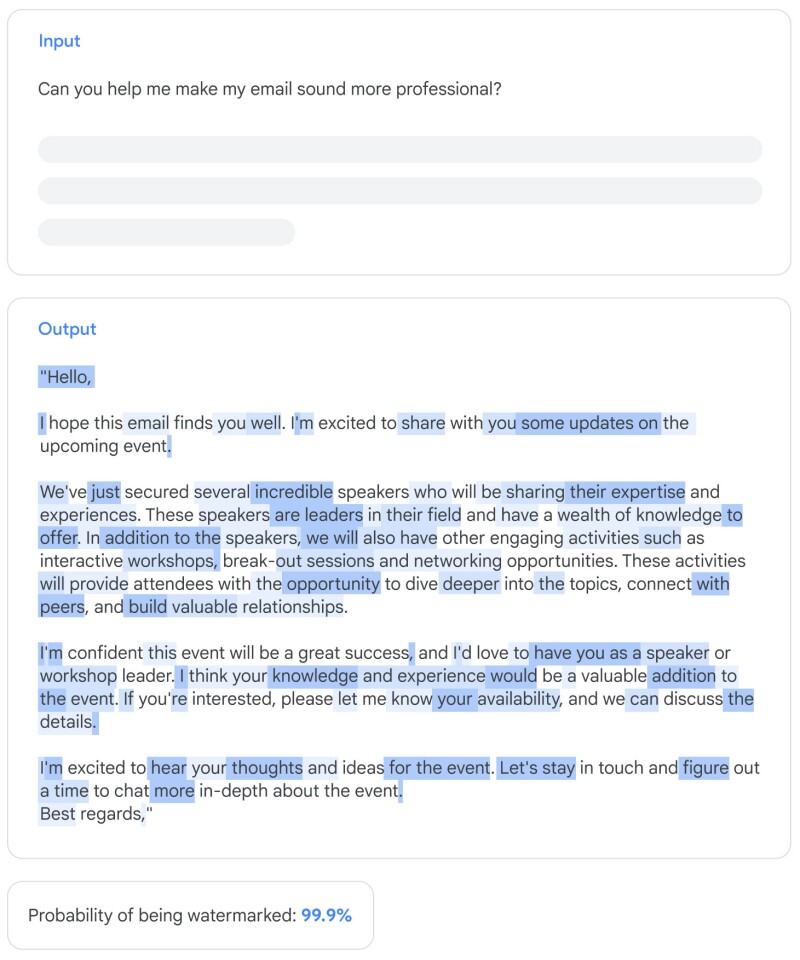

It’s pretty tricky how SynthID watermarks AI-generated text – but put simply, it subtly manipulates the probabilities of different tokens throughout the text. It might tweak ten probabilities in a sentence, hundreds on a whole page, leaving what Deepmind calls a “statistical signature” in the generated text.

SynthID uses complex token prediction algorithms as a watermark for text files

It still comes out perfectly readable to humans, and unless you’ve got pattern recognition skills bordering on the paranormal, you’d have no way to tell.

But a SynthID watermark detector can tell, with greater accuracy as the text gets longer – and since there’s no specific character patterns involved, the digital watermark should be fairly robust against a degree of text editing, as well.

Audio and video AI watermarking

Multimedia content should be considerably easier, since all sorts of information can be coded into unseen, unheard artifacts in the files. With audio, SynthID creates a spectrogram of the file and drops in a watermark that’s imperceptible by the human ear, before converting it back to waveform.

SynthID’s watermarked specrtogram of an audio file

Photo and video simply have the watermarks embedded into pixels in the image in a non-destructive way. The watermark is still detectable even if the image or video has been altered with filters or cropping.

SynthID watermark is imperceivable to the human eye, as shown here

Google has open-sourced the SynthID tech and is encouraging companies building generative AI tools to use it. There’s more at stake here than just people being fooled by AI fakes – the big companies themselves need to make sure AI-generated content can be distinguished from human-generated content for a different reason – so that the AI models of tomorrow are trained on ‘real’ human-generated content rather than AI-generated BS.

If AI models are forced to eat too much of their own excrement, all the ‘hallucinations’ prevalent in today’s early models will become part of the new models’ understanding of ground truth. Google definitely has a vested interest in making sure the next Gemini model is trained on the best possible data.

At the end of the day though, schemes like SynthID are very much opt-in, and as such, companies that opt out, and whose GenAI text, images, videos and audio are that much harder to detect, will have a compelling sales pitch to offer to anyone that really wants to twist the truth or fool people, from election interference types to kids who can’t be bothered writing their own assignments.

Perhaps countries could legislate to make these watermarking technologies mandatory, but then there will certainly be countries that elect not to, and shady operations that build their own AI models to get around any such restrictions.

But it’s a start – and while you or I may still initially be fooled by Taylor Swift videos on TikTok giving away pots and pans, with SynthID technology, we’ll be able to check their authenticity before sending in our $9.99 shipping fee.

Source: Google DeepMind