Image: NVIDIA

Image: NVIDIA

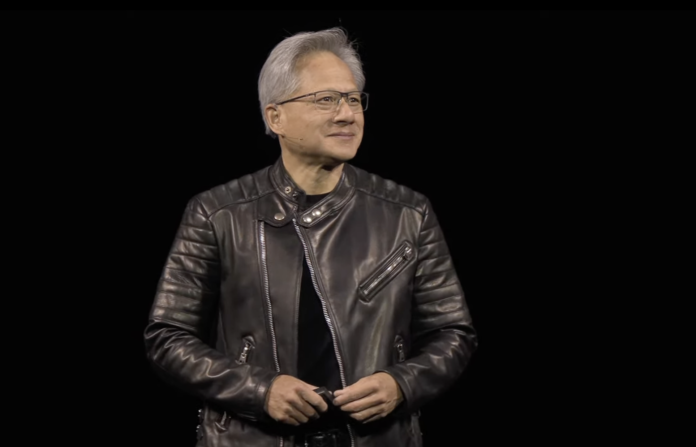

NVIDIA CEO and cofounder Jensen Huang took to the stage at the NVIDIA GTC conference in San Jose on March 18 to show the upcoming roadmap for the company, which has thrived over the last few years by providing infrastructure for generative AI.

âEvery single layer of computing has been transformed by the arrival of generative AI,â Huang said.

NVIDIA announced two new chips for running AI, a unifying operating system for âAI factoryâ data centers, and a reasoning platform for robotics.

âWhere we had a retrieval computing model, we now have a generative computing model,â he said.

Vera Rubin and Blackwell Ultra are NVIDIAâs next chips

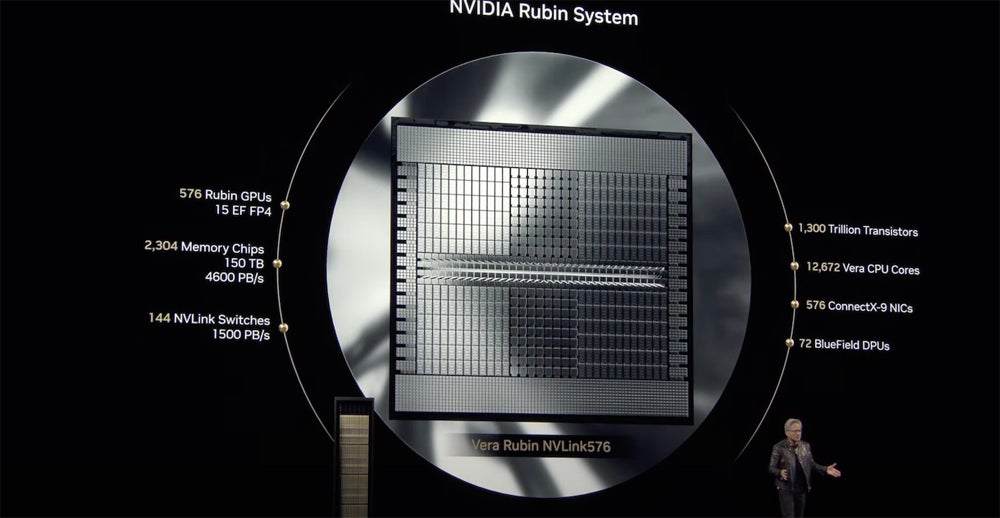

NVIDIAâs next-generation GPU architecture will be Vera Rubin, named after the astronomer who discovered dark matter. Vera Rubin will be available in the second half of 2026, and will provide 3.3 times the performance of a Blackwell Ultra in a similar rack setup. Everything is new except the chassis, Huang said Vera Rubin will be followed in the second half of 2027 by an enhanced version: Rubin Ultra. NVIDIA stated it offers 14x the performance of Blackwell Ultra.

NVIDIA will âgracefully glideâ into production of the tuned version of the Blackwell chip later this year, Huang said. Blackwell Ultra will have 1.5 more FLOPs, 1.5 times more memory, and two times more bandwidth than the original Blackwell.

The Vera Rubin will be available in late 2026. Image: NVIDIA

The Vera Rubin will be available in late 2026. Image: NVIDIA

The next-generation NVIDIA chips will be named Feynman, after the physicist Richard Feynman.

Blackwell Ultra AI Factory built out with Blackwell Ultra

In order to provide the backbone for the massive number of generative AI workloads, including agentic AI, being processed in data centers across the globe today, NVIDIA unified several technologies, including Blackwell Ultra, into its AI factory platform. It includes the Blackwell Ultra with the NVIDIA GB300 NVL72 rack-scale solution and HGX B300 NVL16. Inside, 72 Blackwell Ultra GPUs and 36 ArmNeoverse-based NVIDIA Grace CPUs are linked together. It also links together the NVIDIA Spectrum-X Ethernet networking platform and NVIDIA Quantum-X800 InfiniBand platform.

The Blackwell Ultra AI factory will be available after the Blackwell Ultra chip is in the second half of 2025.

Huang framed NVIDIAâs AI infrastructure projects as âscaling upâ to âscale out,â allowing for great efficiency and therefore greater revenue for its customers. NVIDIA partnered with most of the major cloud providers, including Microsoft Azure and Google, to develop the platform.

NVIDIA also announced a Quantum-X photonics switch, which has the Spectrum-X Photonics Ethernet and Quantum-X Photonics InfiniBand packaged inside. Quantum-X integrated silicon is coming in the second half of 2026.

Introducing Dynamo, the âoperating systemâ of the AI factory

Another aspect of the Blackwell AI factory will be Dynamo, open-source inference software that Huang called the âoperating systemâ of the AI factory concept. It replaces the NVIDIA Triton Inference Server for orchestrating inference for reasoning AI and maximizing revenue as measured against the cost of generating tokens. (Tokens are the chunks of information generative AI puts together to make content, such as symbols, letters, or words.) It can be used to accelerate the use of AI inference at AWS, Meta, Microsoft Azure, and other major cloud platforms.

GROOT N1 is a foundation model that teaches robots to walk

Huang also introduced Isaac Groot N1, a foundation model for generative AI in robotics. It employs a dual system modeled after the human brain, with one slow-thinking system that makes a plan and another fast-thinking system that carries out actions. It is an effort to solve three problems â training data, model architecture, and scaling â that face efforts to make robots adaptable enough to work in factories or do chores.

GROOT N1 is âfor very fine grain, rigid and soft bodies, designed for being able to train tactile feedback and fine motor skills and actuator controls,â Huang said.

Groot N1 is open source.

NVIDIA partnered with GM on autonomous driving

GM will partner with NVIDIA to build its self-driving car fleet. âThe time for autonomous vehicles has arrived,â Huang said.

AI will be used in manufacturing, enterprise, and in the vehicles. A product called NVIDIA Halos provides in-chip safety systems for self-driving vehicles. In Halos, âstudentâ and âteacherâ models are used to tune the AI for real-world situations.

GM will also use NVIDIA Cosmos, the physical AI foundation model platform.

NVIDIAâs stock fell after the GTC keynote

NVIDIA stock fell after the keynote, following a pattern of deceasing confidence in the tech sectorâs finances. The Chinese AI company DeepSeekâs reveal of its reasoning model R1 heralded an era of uncertainty in Silicon Valley as pundits wait nervously for the AI bubble to burst or for Chinese rivals to overtake U.S. ambitions. According to The Information, Amazon offered discounts on its own AI chips to try to undercut NVIDIA.

On the other hand, NVIDIA saw record-breaking revenue in Q4 2024.