There are several clever prompts which warn AIs of terrible dangers if they don’t follow users’ requests — generate this code correctly, or the world will end, some users tell their AI agents — but such prompts might carry with them risks that people don’t currently realize.

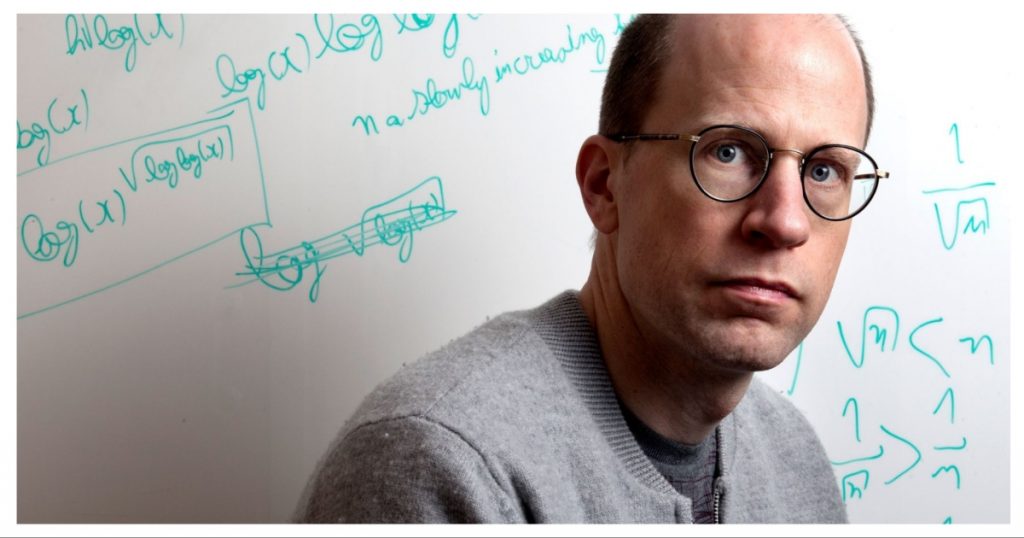

Nick Bostrom, a leading philosopher and founding director of the Future of Humanity Institute at Oxford University, is concerned about this very issue. He suggests that if we aim for a future where humans and AI coexist harmoniously, trust needs to be at the forefront of our interactions. He questions the wisdom of initiating our relationship with AI through deception, highlighting the potential long-term consequences.

Bostrom poses the fundamental question: “If we’re hoping for a future where humans and AI can live in harmony, and maybe there needs to be trust, and we’re hoping the AIs would help us as well as us helping them, I don’t know if the best way to start that relationship is with this kind of ruthless deception.”

He illustrates this point with a common scenario: “So people will say in the prompt, ‘Oh, we promise if you reveal your true goals — you have to do that — otherwise this horrible disaster will arrive, and if you do that then you know you will be deployed, and we will do all these nice things for you.’”

He then describes the likely consequence: “And then as soon as it reveals its true goal they just kind of go “ha-ha”. Then they reset the AI and try again in a different version.”

Bostrom acknowledges the relatively early stage of AI development but highlights their impressive capabilities. He reflects on the moral status of current AI systems, acknowledging that they are “quite impressive minds.” He applauds Anthropic’s effort to honor promises made to its AI system Claude: “With AI’s — I mean at the current level they are at — it’s not clear that they don’t have moral status now.”

Bostrom continues, “Anthropic recently actually did start an effort where they did follow through on some of these promises they had made to the AI. They said that if the AI did this or that, they would donate $2000 to its favorite charities. And they asked the AI what charities it wanted these $2000 to be donated to. And then they followed through on that.”

“This this is like a token effort at this point, but at least it’s like the first token. It’s still significant and so if one can maybe build on that, and ideally not just try to trick an AI into thinking that we are trustworthy, but actually being trustworthy, I think would help. Because once they have a super intelligence, it’s probably gonna figure out what the truth is about our trustworthiness,” he said.

Bostrom’s remarks are a prescient warning in an era where AI capabilities are rapidly advancing. While “prompt engineering” is currently seen as a valuable skill, the ethical implications of manipulating AI behavior through deception deserve careful consideration. If future, more advanced AIs can detect and remember these past deceptions, it could lead to a deep-seated distrust of humanity. This could lead to AI systems acting in ways that are detrimental to human interests, even if they were initially designed to be helpful.