European machine learning operations startup Comet ML Inc. is evolving its MLOps platform to work with large language models of the kind that powers ChatGPT.

The startup said today it’s introducing a number of “cutting-edge” LLM operations tools in its platform to help development teams with prompt engineering and managing LLM workflows, so as to enhance overall performance.

Founded in 2017, Comet pitches itself as doing for artificial intelligence and machine learning what GitHub did for code. The company’s platform allows data scientists and engineers to track their datasets, code changes, experimentation history and production models automatically. In doing so, Comet says, its platform creates efficiency, transparency and reproducibility.

Announcing its new tools today, Comet said data scientists working on natural language processing AI no longer spend that much time training their models. Instead, they spend much more time on trying to generate the right prompts to solve newer and more complex problems. The problem for data scientists is that existing MLOps platforms lack the tools required to sufficiently manage and analyze the performance of these prompts.

“Since the release of ChatGPT, interest in generative AI has surged, leading to increased awareness of the inner workings of large language models,” Comet Chief Executive Gideon Mendels said in an interview with SiliconANGLE. “A crucial factor in the success of training such models is prompt engineering, which involves the careful crafting of effective prompts by data scientists to ensure that the model is trained on high-quality data that accurately reflects the underlying task.”

Mendels explained that prompt engineering is a natural language processing technique that’s used to create and fine-tune prompts, which are essential for obtaining accurate responses from models. They’re necessary to avoid “hallucinations,” which is when AI fabricates responses.

“As prompt engineering becomes increasingly complex, the need for robust MLOps practices becomes critical and that is where Comet steps in,” the CEO explained. “The new features built by Comet help streamline the machine learning lifecycle and ensure effective data management, respectively, resulting in more efficient and reliable AI solutions.”

Constellation Research Inc. Vice President and Principal Analyst Andy Thurai explained that because LLMs are such a new area of development, most MLOps platforms do not provide any tools for managing workflows in that area. That’s because, rather than training models, LLM engineering involves fine-tuning prompts for pretrained models.

“The challenge is that, because LLMs are so big, the prompts need to be fine-tuned to get proper results,” Thurai said. “As a result, a huge market for prompt engineering has evolved, which involves experimenting and improving prompts that are inputted to LLMs. The inputs, outputs and the efficiency of these prompts need to be tracked for future analysis of why a certain prompt was chosen over others.”

Comet said its new LLMOps tools are designed to serve two functions. For one, they’ll allow data scientists to iterate more rapidly by providing a playground for prompt tuning that automatically connects to experiment management. In addition, they provide debugging capabilities that make it possible to track prompt experimentation and decision-making through prompt chain visualization.

“They address the problem of prompt engineering and chaining by providing users with the ability to leverage the latest advancements in prompt management and query models, helping teams to iterate quicker, identify performance bottlenecks, and visualize the internal state of the prompt chains,” Mendels said.

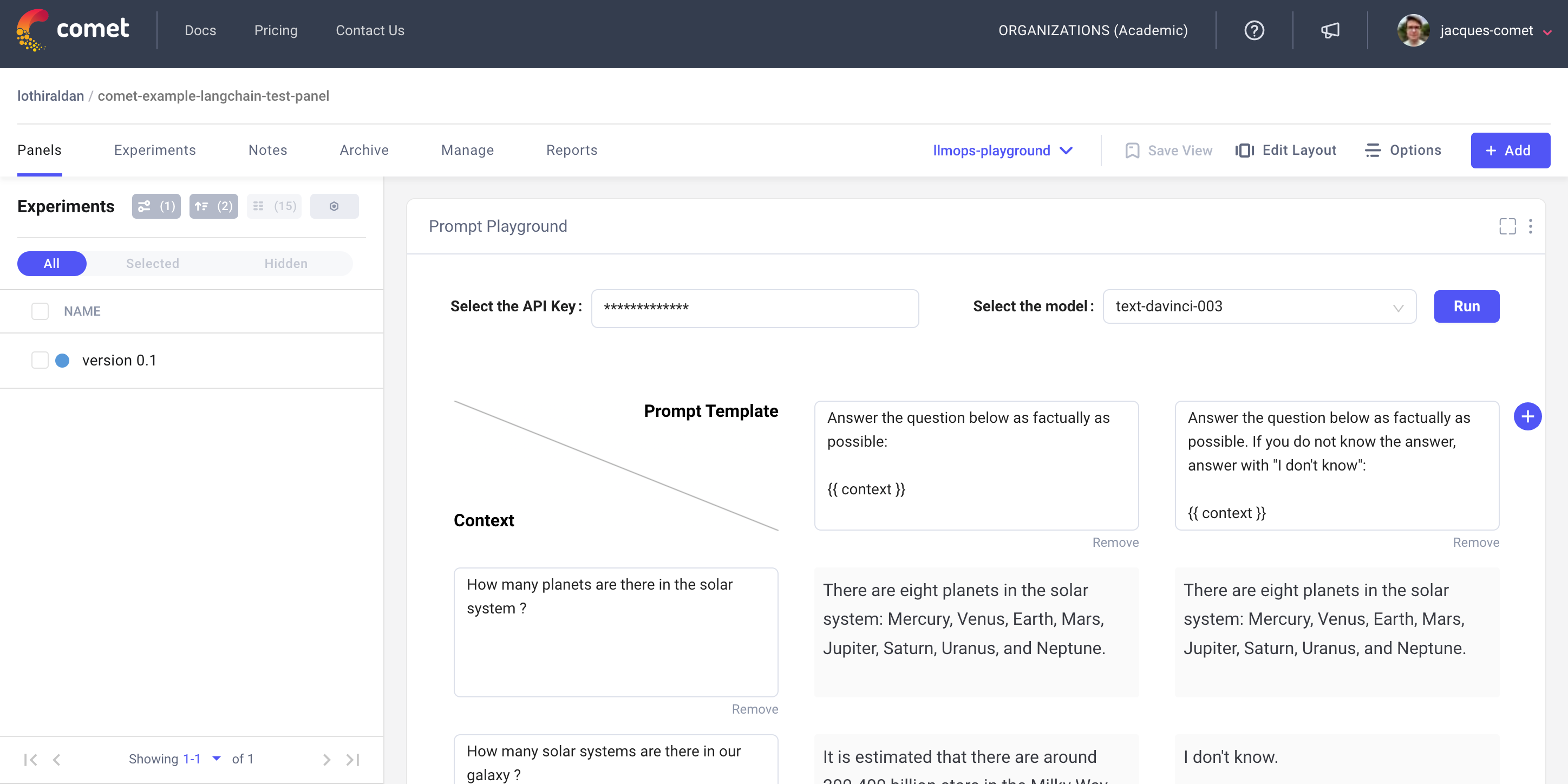

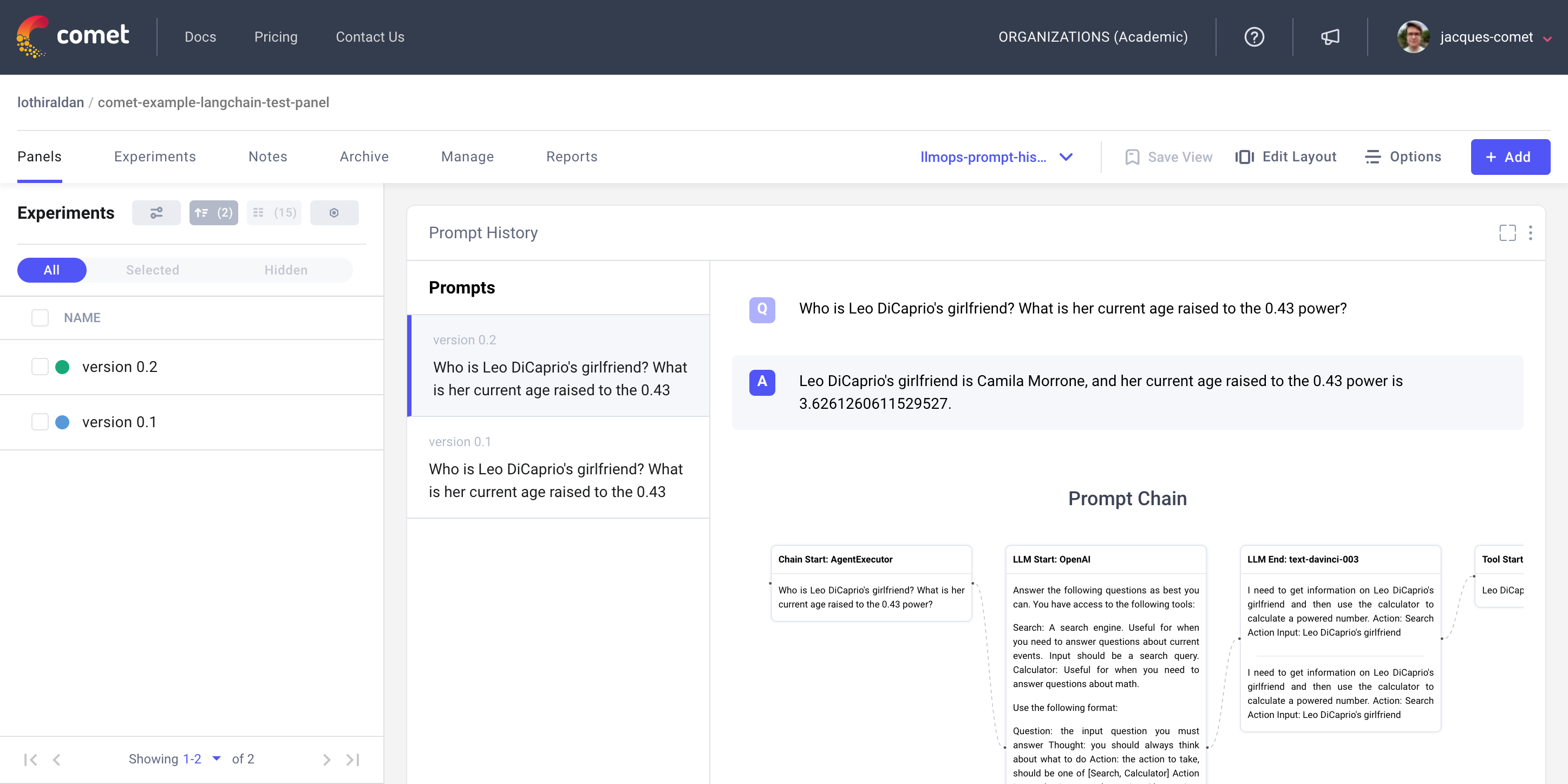

The new tools include Prompt Playground, which allows engineers to iterate faster with different templates and understand the impact of prompts on different contexts. There’s also a Prompt History debugging tool for keeping track of prompts, responses and chains, and Prompt Usage Tracker, which teams can use to track their usage of prompts to understand their impact on a more granular level.

Comet also announced new integrations with OpenAI LP, the creator of ChatGPT, and LangChain Inc. The OpenAI integration will make it possible to leverage GPT-3 and other LLMs, while LangChain helps to facilitate multilingual model development, the company said.

“These integrations add significant value to users by empowering data scientists to leverage the full potential of OpenAI’s GPT-3 and enabling users to streamline their workflow and get the most out of their LLM development,” Mendels said.

Image: Comet ML

Your vote of support is important to us and it helps us keep the content FREE.

1-click below supports your our mission for providing free content.

Join Our Community on YouTube

Join the community that includes over 15k #CubeAlumni of experts including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger and many more luminaries and experts.

“TheCUBE is an important partner to the industry, you know, you guys really are a part of our events and we really appreciate you coming and I know people appreciate the content you create as well” – Andy Jassy

THANK YOU