From virtual assistants to self-driving cars, tech companies are in a race to launch products and enhance the user experience by exploring the capabilities of Artificial Intelligence (AI). It is evident from a Market Research Future Indeed report, which reveals that the machine learning jobs market is projected to be worth almost $31 billion by 2024. Machine learning creates algorithms that enable machines to learn and apply intelligence without being directed, and TensorFlow is an open-source library used for building machine learning models.

This article provides beginners a deep understanding of TensorFlow and why people prefer it over other libraries.

Your AI/ML Career is Just Around The Corner!

AI Engineer Master’s ProgramExplore Program

What is Deep Learning?

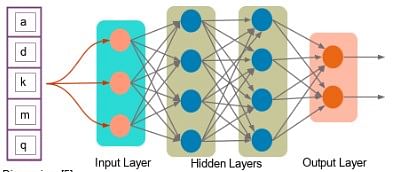

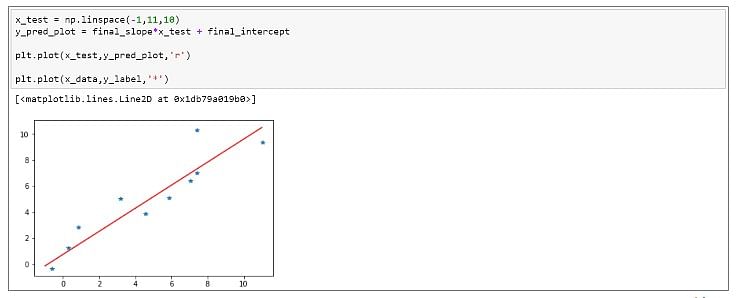

Deep learning is a subset of machine learning. There are certain specialties in which we perform machine learning, and that’s why it is called deep learning. For example, deep learning uses neural networks, which are like a simulation of the human brain. Deep learning also involves analyzing large amounts of unstructured data, unlike traditional machine learning, which typically uses structured data. This unstructured data could be fed in the form of images, video, audio, text, etc.

The term ‘deep’ comes from the fact that a neural network can have multiple hidden layers.

Let us know what are the popular deep learning libraries in the next section of the TensorFlow tutorial.

Master Tools You Need For Becoming an AI Engineer

AI Engineer Master’s ProgramExplore Program![]()

Popular Libraries for Deep Learning

Here is the list of specific libraries available to develop deep learning applications such as:

- Keras

- TensorFlow

- DLJ4

- Theano

- Torch

What Is TensorFlow?

TensorFlow is an open-source library that the Google Brain team developed in 2012. Python is by far the most common language that TensorFlow uses. You can import the TensorFlow library into your Python environment and perform in-depth learning development.

There is a sure way in which the program gets executed. You first create nodes, which process- the data in the form of a graph. The data gets stored in the form of tensors, and the tensor data flows to various nodes.

Why Use TensorFlow?

One of TensorFlow’s best qualities is that it makes code development easy. The readily available APIs save users from rewriting some of the code that would otherwise have been time-consuming. TensorFlow speeds up the process of training a model. Additionally, the chances of errors in the program are also reduced, typically by 55 to 85 percent.

The other important aspect is TensorFlow is highly scalable. You can write your code and then make it run either on CPU, GPU, or across a cluster of these systems for the training purpose.

Generally, training the model is where a large part of the computation goes. Also, the process of training is repeated multiple times to solve any issues that may arise. This process leads to the consumption of more power, and therefore, you need a distributed computing. If you need to process large amounts of data, TensorFlow makes it easy by running the code in a distributed manner.

GPUs, or graphical processing units, have become very popular. Nvidia is one of the leaders in this space. It is good at performing mathematical computations, such as matrix multiplication, and plays a significant role in deep learning. TensorFlow also has integration with C++ and Python API, making development much faster.

Before going through this TensorFlow tutorial, you should know what TensorFlow actually is.

Join The Fastest Growing Tech Industry Today!

Post Graduate Program In AI And Machine LearningExplore Program![]()

What Is a Tensor?

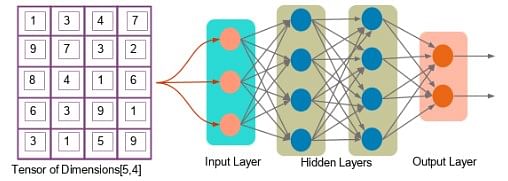

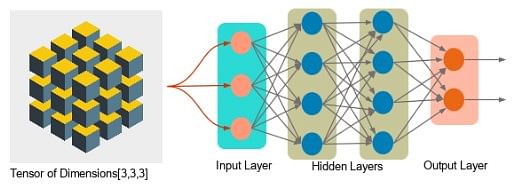

A tensor is a mathematical object represented as arrays of higher dimensions. These arrays of data with different sizes and ranks get fed as input to the neural network. These are the tensors.

You can have arrays or vectors, which are one-dimensional, or matrices, which are two-dimensional. But tensors can be more than three, four or five-dimensional. Therefore, it helps in keeping the data very tight in one place and then performing all the analysis around that.

Let us look at an example of a tensor of [5,4] dimensions (two-dimensional).

Next, you can see a tensor of dimension [3,3,3] (three-dimensional).

Let us discuss Tensor Rank in the next section of the TensorFlow tutorial.

Tensor Rank

Tensor rank is nothing but the dimension of the tensor. It starts with zero. Zero is a scalar that doesn’t have multiple entries in it. It’s a single value.

For example, s = 10 is a tensor of rank 0 or a scalar.

V = [10, 11, 12] is a tensor of rank 1 or a vector.

M = [[1, 2, 3],[4, 5, 6]] is a tensor of rank 2 or a matrix.

T = [[[1],[2],[3]],[[4],[5],[6]],[[7],[8],[9]]] is a tensor of rank 3 or a tensor.

Do you wish to become a successful AI engineer? If yes, enroll in the AI Engineer Master’s Program and learn AI, Data Science with Python, Machine Learning, Deep Learning, NLP, gain access to practical labs, and hands-on projects and more.

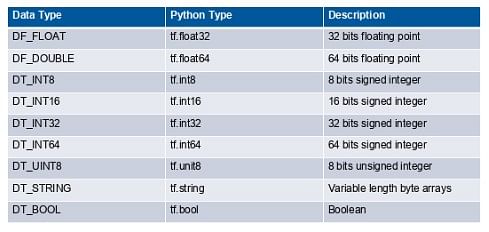

Tensor Data Type

In addition to rank and shape, tensors also have a data type. The following is a list of the data type:

The next section of this TensorFlow tutorial discusses how to build a computational graph.

Building a Computation Graph

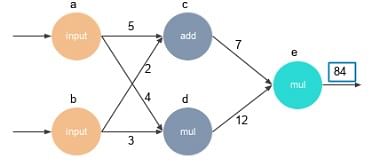

Everything in TensorFlow is based on designing a computational graph. The graph has a network of nodes, with each node operating addition, multiplication, or evaluating some multivariate equation.

The code is written to build the graph, create a session, and execute that graph. A graph has nodes that represent mathematical operations, and an edge represents tensors. In TensorFlow, a computation is explained using a data flow graph.

Everything is an operation. Not only adding two variables but creating a variable is also an operation. Every time you assign a variable, it becomes a node. You can perform mathematical operations, such as addition and multiplication on that node.

You start by building up these nodes and executing them in a graphical format. That is how the TensorFlow program is structured.

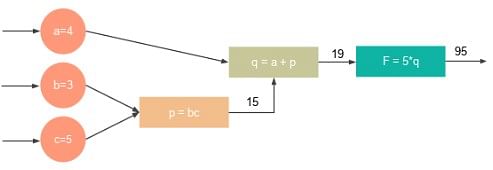

Here’s an example that depicts how a computation graph gets created. Let’s say you want to perform the following calculation: F(a,b,c) = 5(a+bc)

The three variables a, b, and c translate into three nodes within a graph, as shown.

In TensorFlow, assigning these variables is also an operation.

Step 1 is to build the graph by assigning the variables.

Here, the values are:

a = 4

b = 3

c = 5

Step 2 of building the graph is to multiply b and c.

p = b*c

Step 3 is to add ‘a’ to ‘bc.’

q = a + p

Then, we need multiple q, and 5.

F = 5*q

Finally, you get the result.

Here, we have six nodes. First, you define each node and then create a session to execute the node. This step, in turn, will go back and execute each of the six nodes to get those values.

Now that you know about how to build a computational graph, the next section of this TensorFlow tutorial lets’s learn about the programming elements.

Want To Become an AI Engineer? Look No Further!

AI Engineer Master’s ProgramExplore Program![]()

Programming Elements in TensorFlow

Unlike other programming languages, TensorFlow allows you to assign data to three different data elements:

- Constants

- Variables

- Placeholders

Constants

Constants are parameters with values that do not change. We use the tf.constant() command to define a constant.

Example:

a = tf.constant(2.0, tf.float32)

b = tf.constant(3.0)

Print(a, b)

You cannot change the values of constants during computation. Specifying the data, the constant is optional.

Variables

Variables allow us to add new trainable parameters to the graph. To define a variable, we use the tf.Variable() command and initialize them before running the graph in a session.

Example:

W = tf.Variable([.3],dtype=tf.float32)

b = tf.Variable([-.3],dtype=tf.float32)

x = tf.placeholder(tf.float32)

linear_model = W*x+b

Placeholders

Placeholders allow us to feed data to a TensorFlow model from outside a model. It permits value to be assigned later. To define a placeholder, we use the tf.placeholder() command.

Example:

a = tf.placeholder(tf.float32)

b = a*2

With tf.Session() assess:

result = sess.run(b,feed_dict={a:3.0})

Print Result

The Print Result is somewhat similar to a variable but primarily used for feeding data from outside. Typically, when you perform a deep learning exercise, you cannot get all the data in one shot and store it in memory. That will become unmanageable. You will generally get data in batches.

Let’s assume you want to train your model, and you have a million images to perform this training. One of the ways to accomplish this would be to create a variable, load all the images, and analyze the results. However, this might not be the best way, as the memory might slow down or there may be performance issues.

The issue is not just storing the images. You need to perform training as well, which is an iterative process. It may need to load the images several times to train the model. It’s not just the storing of million images, but also the processing that takes up memory.

Another way of accomplishing this is by using a placeholder, where you read the data in batches. And maybe out of the million images, you get a thousand images at a time, process them and then get the next thousand and so on.

That is the idea behind the concept of a placeholder; it is primarily used to feed your model. You read data from outside and feed it to a graph using a variable name (in the example, the variable name is feed_dict).

When you’re running the session, you specify how you want to feed the data to your model.

Become a successful AI engineer with our AI Engineer Master’s Program. Learn the top AI tools and technologies, gain access to exclusive hackathons and Ask me anything sessions by IBM and more. Explore now!

Session

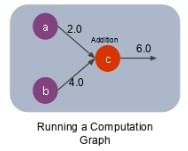

Once you create a graph, you need to execute it by calling a session or using a method called run. A session is run to evaluate the nodes, which is called the TensorFlow runtime.

You can create a session by giving the command as shown:

sess = tf.Session()

Consider an example shown below:

a = tf.constant(5.0)

b = tf.constant(3.0)

c = a*b

# Launch Session

sess = tf.Session()

# Evaluate the Tensor c

print(sess.run(c))

There are three nodes: a, b, and c. We create a session and run the node ‘c’ as this is where the mathematical operation is carried out, and the result is obtained.

On running the node c, first nodes a and b will get created, and then the addition will be done at node c. We will get the result ‘6’ as shown below:

The next section of this TensorFlow tutorial focuses on how you can perform linear regression using TensorFlow.

Linear Regression Using TensorFlow

Let’s see a simple example of linear regression and how it works in TensorFlow. Here, we solve a simple equation [y=m*x+b]. We will calculate the slope(m) and the intercept(b) of the line that best fits our data.

The following are the steps to calculate the values of ‘m’ and ‘b.’’.

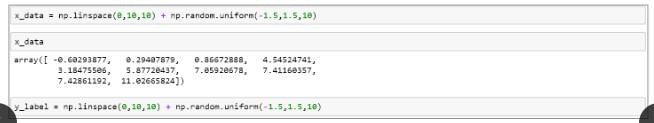

Step 1 – Setting up Artificial Data for Regression

Below is the code to create random test data that is linearly separated:

Here, we generate ten evenly spaced numbers between 0 and 10 and another ten random values between -1.5 and 1.5. Then, we add these values.

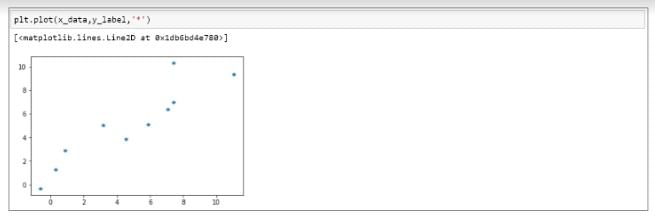

Step 2 – Plot the Data

If we plot the above data, this is how it would look:

Now, we want to find the best fit (equation of a line) for the given data points.

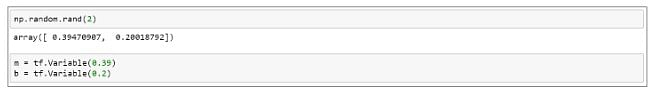

Step 3 – Assign the Variables

Refer to the code shown below:

Here, we have assigned variables ‘m’ and ‘b’ randomly using a NumPy random function.

Here, we have assigned variables ‘m’ and ‘b’ randomly using a NumPy random function.

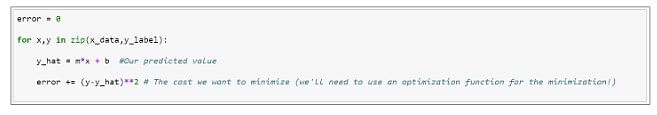

Step 4 – Apply the Cost Function

Next, we find out the cost function.

The cost function is the error between the actual value and the calculated value. With the given values of ‘x’ that we feed to this model, it will calculate the predicted value based on the value of ‘m’ and ‘b’ at any given point in time. The error will be the difference between the calculated value and the actual value.

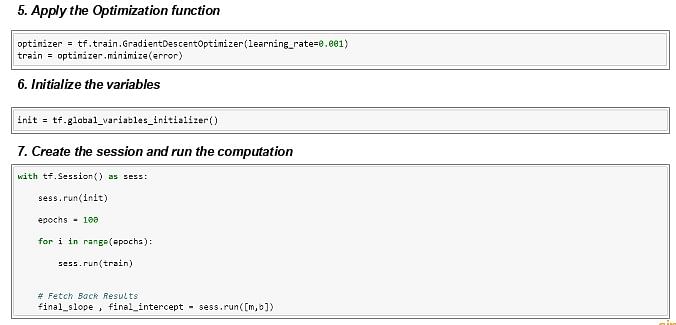

Step 5 – Apply the Optimization Function

For training purposes, you need to use an optimizer.

In this case, it is a gradient descent optimizer, and we need to specify the learning rate.

Until now, we have only created a node, and nothing gets executed. We are just creating a graph consisting of nodes.

Step 6 – Initialize the Variables

In TensorFlow, whenever you create variables, you need to create a node for initializing the variables that will execute in the session. In this step, all the variables created earlier get initialized. This command is essential for the lines of code that contain variables. It is unnecessary for constants and placeholders.

Step 7 – Create the Session and Run the Computation

Here, we have created a session named ‘sess.’ Once you have finished the code in the ‘with’ block, you don’t need to close the session explicitly.

First, you’ll start with the initial ‘with’ block. The first line of code will initialize the variables. Next, you need to run the training method to get the result. This step, in turn, will execute the rest of the graph.

We train the data for several epochs or iterations to get the predicted value of ‘m’ and ‘b.’ Ultimately, the training finishes and you get the benefits of ‘m’ and ‘b.’

We are specifying the epochs to know when the training gets completed. Epochs are nothing but how many iterations you need to do if you pass the thousands of observations through the model for training. If you take these thousands of observations and give them through the training model a hundred times, then that is a hundred epochs. For every epoch, the values of ‘m’ and ‘b’ will keep changing, so that the error is optimized or reduced. At the end of the training session, ‘m,’ and ‘b’ values will be different from what they started.

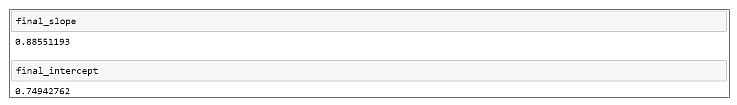

Step 8 – Print the Slope and Intercept

After obtaining the values of ‘m’ and ‘b,’ we can display the details. This step is the end of the training process.

Step 9 – Evaluate the Results

The last step is used to plot the model, i.e., the best-fit line. You can use the plot method to plot the best-fit line.

You can see that the line of best fit is passing in between all the data points. If you consider any specific location and calculate the error, it is minimal. This is how you evaluate the results.

You can refer to the TensorFlow tutorial video below to see the actual code in Jupyter notebook:

20% in Increase in AI Job Roles! Are You Ready?

PCP in Generative AI and Machine LearningExplore Program![]()

Introducing RNN

Neural networks are of different types, like Convolutional Neural Network(CNN), Artificial Neural Network(ANN), RNN, etc. RNN is one type of neural network, and it stands for Recurrent Neural Network.

Networks like CNN and ANN are feed-forward networks, where the information only goes from left to right or from front to back. With RNN, there is some information traveling backward as well. And therefore it is known as a recurrent neural network.

Each type of neural network has a specific application. For example, convolutional neural networks, or CNNs, are ideal for image processing and object detection for videos and images. RNNs, on the other hand, is an excellent choice for Natural Language Processing(NLP) or speech recognition.

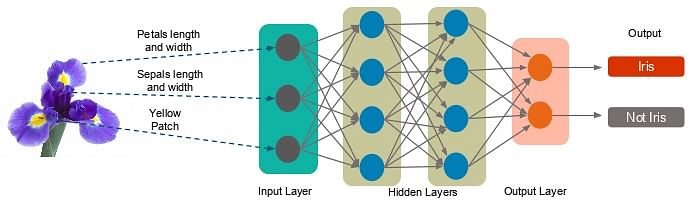

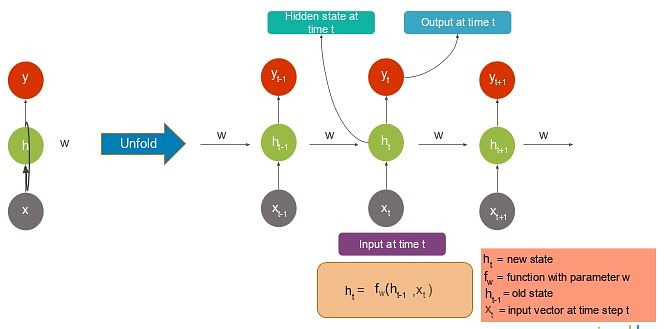

A typical RNN looks like the image shown below:

In a standard neural network, you have multiple neurons in the input layer that accept inputs. The input is processed and passed to the next layer. In RNN, a part of the previous output is fed in, along with the inputs for the given time. This process can be a little confusing.

Let us see an expanded view of one single neuron in an unfolded manner. If we are receiving inputs or data over a while, then this is how the neuron will look:

Note that these are not three neurons. This is one neuron, and it is shown in an unfolded way.

At a given time ‘t-1.’

An input of xt-1 is fed to the neuron, and it generates an output of yt-1

When we move to instant ‘t,’ it accepts an input of xt and additional input from the previous time frame ‘t-1’. So, yt-1 also gets fed at ht, and that results in yt

Similarly for time ‘t+1’, an input of xt+1 plus the input from the previous time frame xt and the hidden state at ht+1 are fed to get an output of yt+1

This TensorFlow tutorial gives you a detailed description of the types of RNN in the next section.

Types of RNN(Recurrent Neural Network)

There are different types of RNNs, which vary based on the application:

- One-to-one

- One-to-many

- Many-to-one

- Many-to-many

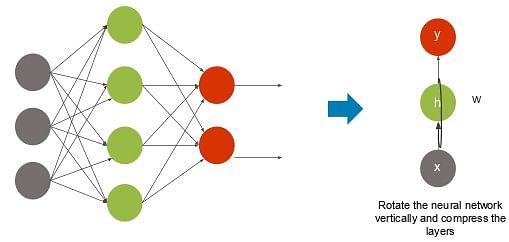

One-to-One

It is known as the Vanilla Neural Network, which is for regular machine learning problems. You can see a one-to-one RNN below:

An example of this network can be stock price, where you feed only one input ‘stock price’ that is spread over a while. You get an output, which is again the stock price, predicted over the next two, three, or ten days. The number of outputs and inputs is the same.

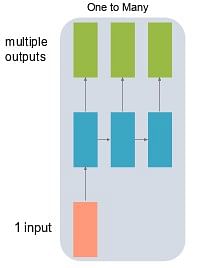

One-to-Many

Here, there is one input and multiple outputs. The network is as shown below:

Example: Let’s say you want to caption an image. The input will be just an image. For output, you’re looking for a phrase. Once you share an image, the network generates a sequence of words for a caption.

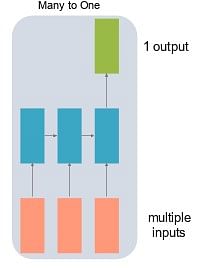

Many-to-One

This kind of network is used to carry out sentiment analysis. The network is as shown below:

Example: You’re feeding some text and want to know the output as the sentiment expressed by the text. It could be positive or negative. The input is many different words, but the output is only one word.

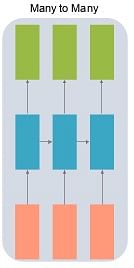

Many-to-Many

It is generally used in machine translation. The network is as shown below:

Example: Let’s say you want to translate some text. You feed in a sentence in one language, and then you want another sentence in another language. In this example, the input and output will have multiple words. RNNs are useful in performing time series analysis.

Your AI/ML Career is Just Around The Corner!

AI Engineer Master’s ProgramExplore Program![]()

Use Case Implementation of RNN

Problem statement – We have gathered data related to milk production over several months. By using an RNN, we want to predict milk production per cow in pounds using a time series analysis.

This TensorFlow tutorial is just an introduction to the still-evolving world of AI and data science. Understanding the other concepts of deep learning is not a cakewalk. You need a step-by-step guide to comprehend the basics of machine learning and deep learning. So, why not take up Simplilearn’s AI and ML course? Get trained by industry experts and pave your way to a rewarding career.